-

Notifications

You must be signed in to change notification settings - Fork 2.3k

Feat: parseJson #2293

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feat: parseJson #2293

Conversation

mattsse

left a comment

mattsse

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that should work

If I understand correctly the ABI spec, structs are encoded as tuples.

exactly

for objects we should be able to convert them into Vec<ParamType> which will encode/decode as tuples, which would mean value_to_type is recursive over Value::Object

| } | ||

| fn parse_json(state: &mut Cheatcodes, json: &str, key: &str) -> Result<Bytes, Bytes> { | ||

| // let v: Value = serde_json::from_str(json).map_err(util::encode_error)?; | ||

| let values: Value = jsonpath_rust::JsonPathFinder::from_str(json, key)?.find(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

what's the benefit if JsonPathFinder here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For the crate you mean? So that I don't have to implement the jsonPath syntax

@mattsse What do you think of the fact that |

| function test_uintArray() public { | ||

| bytes memory data = cheats.parseJson(json, ".uintArray"); | ||

| uint[] memory decodedData = abi.decode(data, (uint[])); | ||

| assertEq(42, decodedData[0]); | ||

| assertEq(43, decodedData[1]); | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is nice, what if I want to query a JSON object multiple times? e.g. say I'd like to do bytes memory data = cheats.parseJson(json) and then data.getUintArray(".uintArray") followed by data.getString(".str")? I guess we'd want a parseJson variant which does not take a second argument and reads the entire thing, and then a JSON library (which we discussed before) which gives nice utils that handle the abi decoding (in forge-std probably)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

parseJson variant which does not take a second argument and reads the entire thing

Reads it into what? We have the JSON in stringified via the readFile. So what we could do is basically a library that reads the JSON via readFile, stores the string locally, and then does parseJson(key) on the stored inner value.

Agreed on the library for forge-std.

| use crate::{ | ||

| abi::HEVMCalls, | ||

| executor::inspector::{cheatcodes::util, Cheatcodes}, | ||

| executor::inspector::{ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(arbitrary comment location to start a thread)

Have not take a close look at this yet, but just wanted to comment on this:

Address inference from a string is not great. Could it misinterpret a hex-encoded bytes string of the same length? Is this an assumption we are willing to make?

I think ethers.js has a good convention for distinguishing hex strings that are numbers from hex strings that are bytes. From their docs:

For example, the string

"0x1234"is 6 characters long (and in this case 6 bytes long). This is not equivalent to the array[ 0x12, 0x34 ], which is 2 bytes long.

So we can use the same convention for json parsing / script output writing. I quickly sanity checked based on that portion of their docs, but would love someone to double check that / confirm it's a good approach here

iirc serde_json::Value::Object is a sorted BTreeMap. I think we want sorted keys, and hence sorted encoded tuples so order is consistent? |

Yup, that sounds reasonable. Just underlining it cause that affects the order the user would have to define the attributes in the struct. While they would intuitively do it via some taxonomical or tight-packing order, they would need to know they they have to list the attributes in alphabetical order in order for the tuple to be translated into the struct correctly |

I think this is reasonable, otherwise it will cause problems if you want to parse e.g. a "transaction" json and different nodes may return a different order in the RPC response |

|

@mattsse finally made it work with arbitrarily nested objects. Switched to handling them via Tokens and it became much simpler. I would like your suggestion on:

|

gakonst

left a comment

gakonst

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

there's a commented out test + needs a test for writeJSON, not sure i exactly get how it's supposed to be used!

the parseJSON flow looks good

|

@gakonst I added a new comment on the issue on a proposed API for writeJson. I haven't found something that I love, so I want to get some alignment before I get full steam ahead implementing. The one I have implemented right now, is only good for writing simple values (not arrays, not struct) at the first "level" of a json (so not being able to write in a nested object). I proposed a new API, but it's meh. Any idea is more than welcome. |

mattsse

left a comment

mattsse

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

couple nits, otherwise ,lg

|

Hey, just testing this out locally and haven't had any issues parsing JSON files yet. I merged master in and it was fine, just one compile issue fixed here: proximacapital@e6867e1 Was wondering if there was an eta? I'll continue building out my json usage locally but struggling to see a practical (i.e. not compiling from source) way to get access to this feature in CI before it merges? |

|

|

|

Build currently failing but ill def use that flag in the future, for now running off of a fork |

mattsse

left a comment

mattsse

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

a general usage is limited due to some assumptions and limitations regarding encoding, but it is definitely useful and we should add that

needs rebase though

What limitations are these? Also @odyslam should this be taken out of draft now? |

|

Tomorrow will do the following:

Then, I will create a new branch to start working on @mattsse suggestion for Sorry for delaying the feature for so long. |

|

SGTM. Let's get this over the line and iterate on the |

b99283e to

2573fc7

Compare

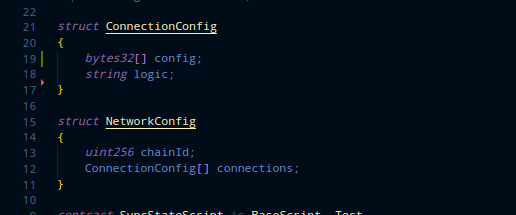

for structs, we currently have no way of knowing how the target struct looks like so current assumption is that the sol type matches the json object 1:1, right @odyslam? |

|

Not only that, but that also the attributes of the struct are defined in alphabetical order

|

|

@mattsse will proceed with Thanks for the JSON file test fix. |

|

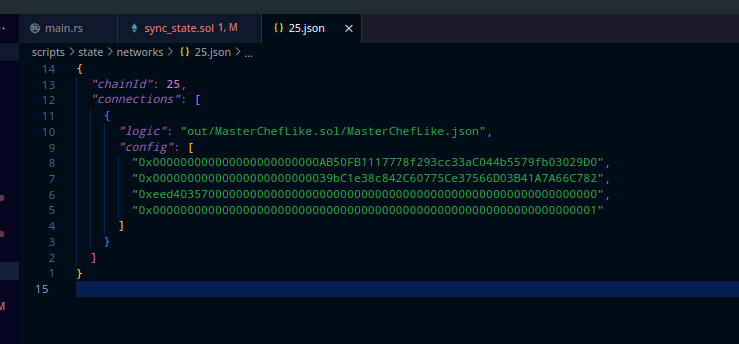

Does this work with |

|

@0xPhaze I am able to parse this: |

|

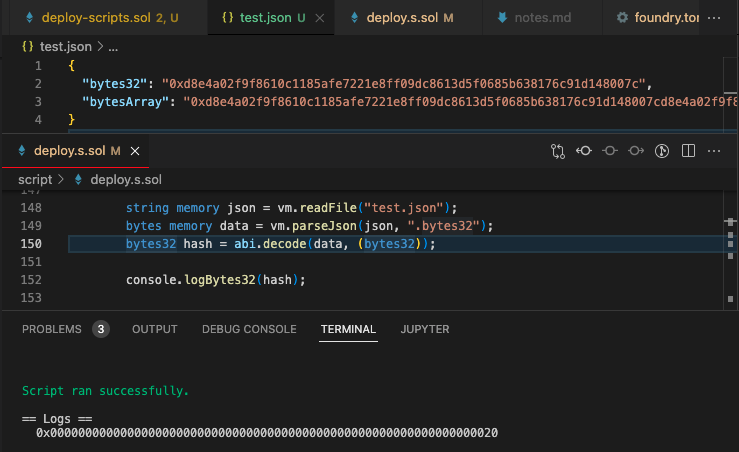

Hey, so currently the above will be returned as a type string. I have added a fix for that and will pr now, but they will be returned as type bytes. |

* refactor get_env * feat: test all possible types * chore: add jsonpath * feat: parse JSON paths to abi-encoded bytes * feat: flat nested json into a vec of <Value, abi_type> * fix: support nested json objects as tuples * chore: add test for nested object * feat: function overload to load entire json * fix: minor improvements * chore: add comments * chore: forge fmt * feat: writeJson(), without tests * fix: remove commented-out test * fix: improve error handling * fix: address Matt's comments * fix: bool * chore: remove writeJson code * fix: cherry pick shenanigan * chore: format, lint, remove old tests * fix: cargo clippy * fix: json file test Co-authored-by: Matthias Seitz <[email protected]>

Motivation

I am opening a draft PR to gather feedback and improve code quality.

Checklist