-

Notifications

You must be signed in to change notification settings - Fork 1.7k

tensorboard dev export: If an error occurs, keep exporting the next experiment #3219

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: master

Are you sure you want to change the base?

Conversation

|

Hi @shawwn! Glad to see you here, and thanks for the PR. :-) If you still have it handy, could you attach the reference code that you |

|

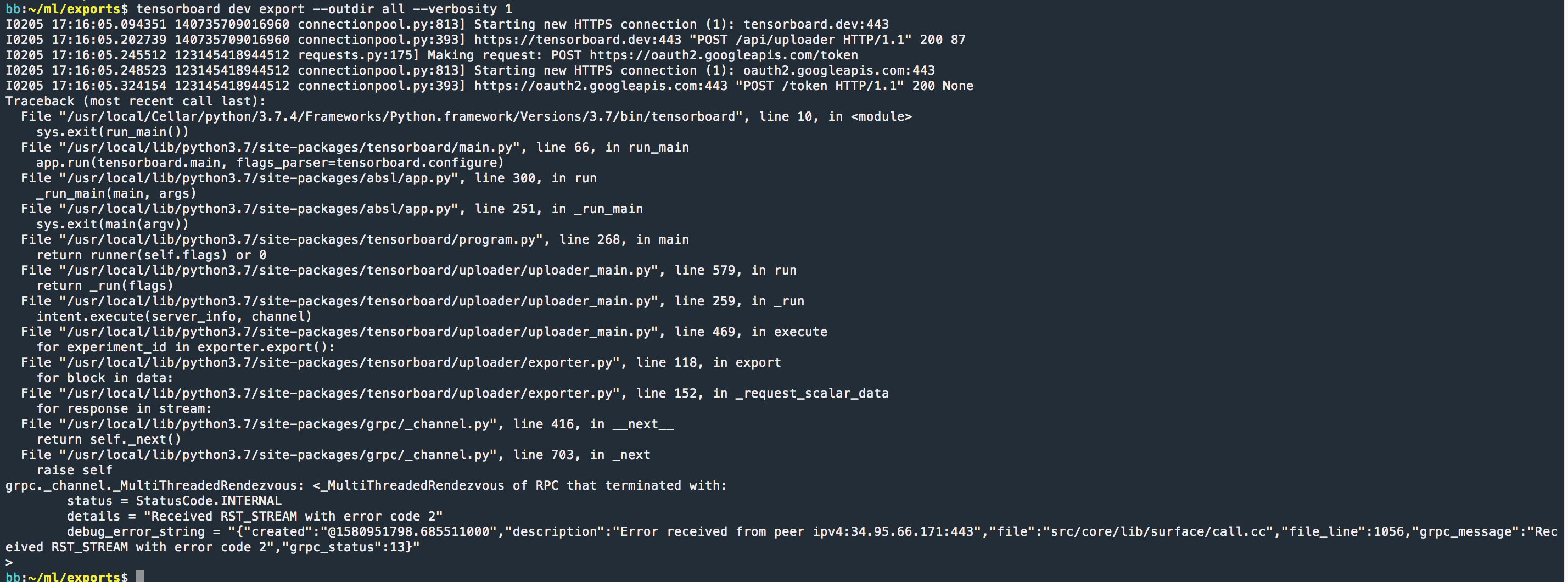

It actually doesn't generate a reference code. The error is: |

|

Interesting. Thanks for the reference. We haven’t seen this before, and |

| import traceback | ||

| traceback.print_exc() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I’m a little wary to just print the exception and continue, because (if

I’m reading this correctly) this causes the exporter to emit a partially

completed experiment file. Once the export “completes”, the user won’t

have any indication as to which of their experiments are incomplete,

which looks like a data loss bug even though it isn’t one.

One way to handle this would be to collect a list of failed/partial

exports and surface failures both immediately and at the end of the

export session; I’ll get some input from the team about how we want to

surface this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Fair! I didn't think it was a very good solution either.

The trouble is, an error when exporting one experiment causes the export process to fail for all experiments. And I seem to have many experiments that cause this error.

One hypothesis: When I interrupt a tensorboard.dev upload, perhaps it generates an experiment which then fails to export.

(If you have access to my account, feel free to try to export the experiments associated with [email protected]. It'll reproduce the error, I think.)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(If you have access to my account, feel free to try to export the

experiments associated with [email protected]. It'll reproduce

the error, I think.)

Thanks. I’ve just done so and can indeed reproduce the error. I can also

reproduce this when exporting large experiments not written by you, so I

don’t think that it’s anything particular to your account. These

experiments are different shapes and sizes (lots of runs, long time

series, etc.) but one thing that they have in common is that the

RST_STREAM failure occurs about 31–32 seconds after process start, so

I suspect that there’s simply a 30-second timeout somewhere in the

stack. (My understanding was that gRPC was supposed to send RST_STREAM

with payload CANCEL (0x08) rather than INTERNAL (0x02) on streaming

request timeout, so it’s not clear to me exactly why this is manifesting

the way that it is.)

We’ll keep looking into this next week. We may be able to deploy a

short-term server-side patch to increase the timeouts to unblock you,

and work on a longer-term solution such that these aren’t limited to a

single streaming RPC and thus aren’t subject to timeouts at all.

I’m assuming that you’re able to run this patch locally and partially

export your experiments—is that correct? If not (e.g., if you’re having

trouble building), let me know, and I can send you a modified wheel with

this patch.

(Googlers, see http://b/149120509.)

I was running into an issue with

tensorboard dev exportwhere it was failing to export a certain experiment. (It threw an internal error.) This prevented me from exporting most of my experiment data.This PR solves the problem by printing the error rather than aborting.