-

Notifications

You must be signed in to change notification settings - Fork 730

Closed

Labels

Description

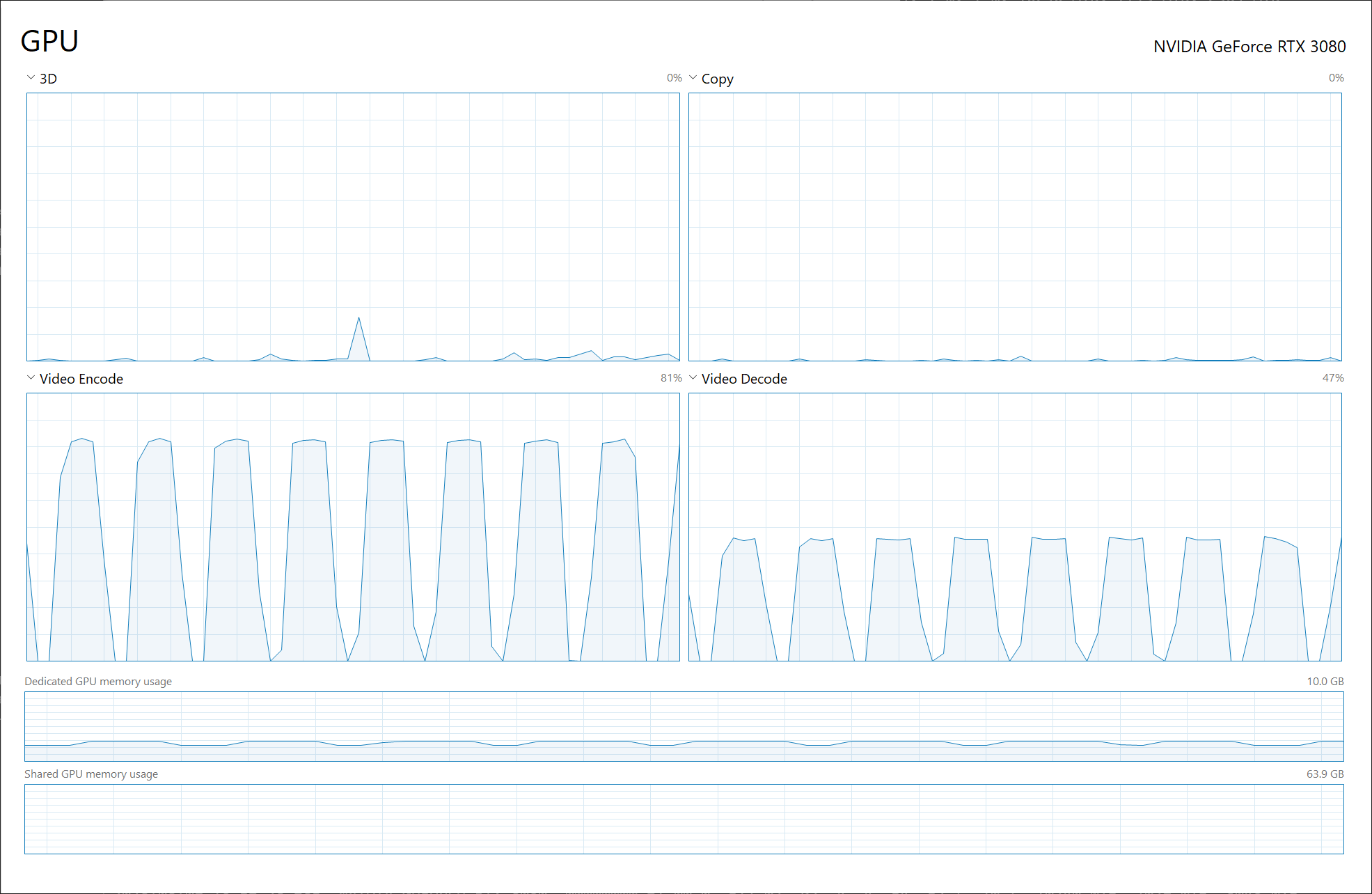

Currently when performing HW decoding / encoding, HW contexts are recreated at each invocation.

Looking at the design of AVBufferRef and how it is used for AVCodecContext::hw_device_ctx in hw_decode.c, they should be reusable across different invocations (and perhaps encoding/decoding.)

Reusing should reduce the GPU memory usage and initialization time.

Note: Currently, launching GPU decoder/encoder at the same time consumes about 600 MB of GPU memory.

code

import time

from datetime import datetime

import torchaudio

from [torchaudio.io](http://torchaudio.io/) import StreamReader, StreamWriter

# torchaudio.utils.ffmpeg_utils.set_log_level(36)

input = "NASAs_Most_Scientifically_Complex_Space_Observatory_Requires_Precision-MP4.mp4"

output = "foo.mp4"

def test():

r = StreamReader(input)

i = r.get_src_stream_info(r.default_video_stream)

r.add_video_stream(10, decoder="h264_cuvid", hw_accel="cuda:0")

w = StreamWriter(output)

w.add_video_stream(

height=i.height,

width=i.width,

frame_rate=i.frame_rate,

format="yuv444p",

encoder_format="yuv444p",

encoder="h264_nvenc",

hw_accel="cuda:0",

)

with [w.open](http://w.open/)():

num_frames = 0

for chunk, in [r.stream](http://r.stream/)():

num_frames += chunk.size(0)

w.write_video_chunk(0, chunk)

return num_frames

total_num_frames = 0

while True:

t0 = time.monotonic()

num_frames = test()

elapsed = time.monotonic() - t0

total_num_frames += num_frames

time.sleep(10)

print(f"{[datetime.now](http://datetime.now/)()}: {elapsed} [sec], {num_frames} frames ({total_num_frames})")Implementation Idea

- When GPU decode/encode is firstly launched, store a reference to HW [device|frames] context to cache store.

- Subsequent call will reuse the cache.

- Should have utility to remove cache, (and disable reuse?)