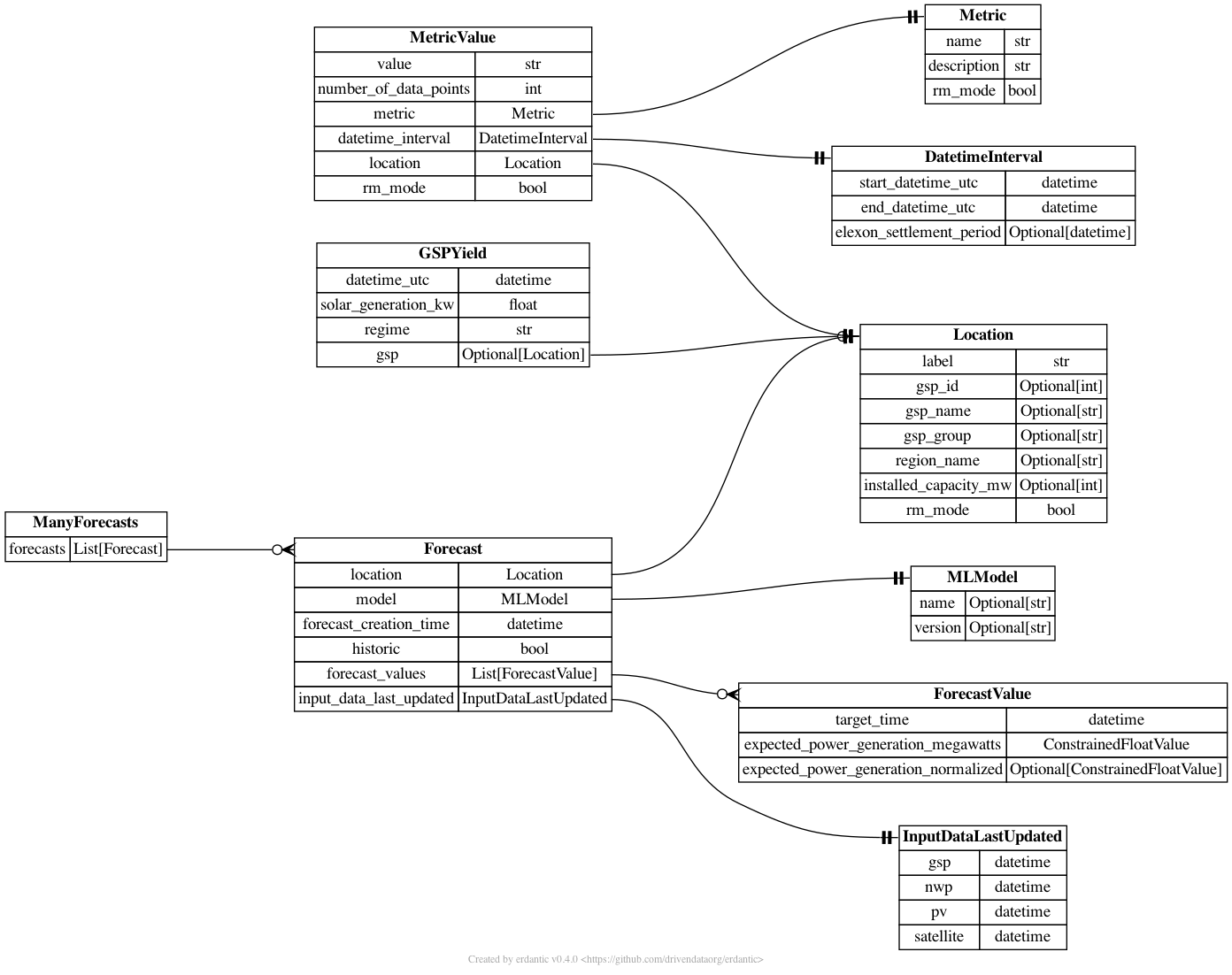

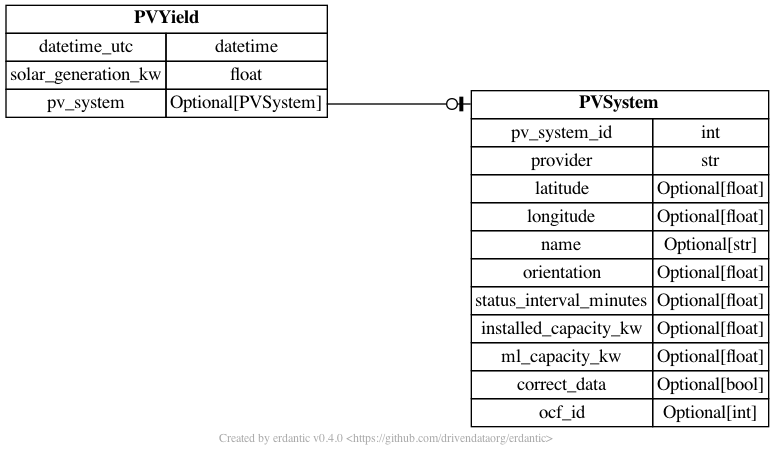

Datamodel for the nowcasting project

The data model has been made using sqlalchemy with a mirrored model in pydantic.

Future: The data model could be moved, to be a more modular solution.

All models are in nowcasting_datamodel.models.py.

The diagram below shows how the different tables are connected.

nowcasting_datamodel.connection.py contains a connection class which can be used to make a sqlalchemy session.

from nowcasting_datamodel.connection import DatabaseConnection

# make connection object

db_connection = DatabaseConnection(url='sqlite:///test.db')

# make sessions

with db_connection.get_session() as session:

# do something with the database

passnowcasting_datamodel.read.py contains functions to read the database.

The idea is that these are easy to use functions that query the database in an efficient and easy way.

- get_latest_forecast: Get the latest

Forecastfor a specific GSP. - get_all_gsp_ids_latest_forecast: Get the latest

Forecastfor all GSPs. - get_forecast_values: Gets the latest

ForecastValuefor a specific GSP - get_latest_national_forecast: Returns the latest national forecast

- get_location: Gets a

Locationobject

from nowcasting_datamodel.connection import DatabaseConnection

from nowcasting_datamodel.read import get_latest_forecast

# make connection object

db_connection = DatabaseConnection(url='sqlite:///test.db')

# make sessions

with db_connection.get_session() as session:

f = get_latest_forecast(session=session, gsp_id=1)nowcasting_datamodel.save.py has one functions to save a list of Forecast to the database

nowcasting_datamodel.fake.py has a useful function for adding up forecasts for all GSPs into a national Forecast.

nowcasting_datamodel.fake.py

Functions used to make fake model data.

Tests are run by using the following command

docker stop $(docker ps -a -q)

docker-compose -f test-docker-compose.yml build

docker-compose -f test-docker-compose.yml run testsThese sets up postgres in a docker container and runs the tests in another docker container.

This slightly more complicated testing framework is needed (compared to running pytest)

as some queries can not be fully tested on a sqlite database

An upstream builds issue of libgp may cause the following error:

sqlalchemy.exc.OperationalError: (psycopg2.OperationalError) SCRAM authentication requires libpq version 10 or above

As suggested in this thread, a temporary fix is to set the env variable DOCKER_DEFAULT_PLATFORM=linux/amd64 prior to building the test images - although this reportedly comes with performance penalties.

.github/workflows contains a number of CI actions

- linters.yaml: Runs linting checks on the code

- release.yaml: Make and pushes docker files on a new code release

- test-docker.yaml': Runs tests on every push

The docker file is in the folder infrastructure/docker/

The version is bumped automatically for any push to main.

- DB_URL: The database url which the forecasts will be saved too

Thanks goes to these wonderful people (emoji key):

Brandon Ly 💻 |

Chris Lucas 💻 |

James Fulton 💻 |

Rosheen Naeem 💻 |

Henri Dewilde 💻 |

Sahil Chhoker 💻 |

Abdallah salah 💻 |

tmi 💻 |

Database Missing no1 💻 |

This project follows the all-contributors specification. Contributions of any kind welcome!