-

Notifications

You must be signed in to change notification settings - Fork 2.7k

Make img2img strength 1 behave the same as txt2img #2895

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

(Note that txt2img technically mixes in all-zeros for its initial latent, which is what we now do for img2img and inpainting when strength is 1.) |

damian0815

left a comment

damian0815

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks good

|

This is where I have to eat my words about "Inpaint Replace," as you've disproven my hypothesis that adding noise at full strength is effectively equivalent to overwriting the input entirely. This is because no matter how much noise we're adding, it's still a Gaussian distribution we are adding to what was there before, so if the input image is significantly far away from the default case (latent The proposal you have here is to abruptly change the behavior of the function as soon as the strength hits 1.0. I'm not a fan of the way that introduces a big discontinuity between 0.999 and 1.0. [Oops, this has been merged before I finished my thoughts. Oh well. Now it's my turn to tell JP "omg something just changed the mechanics of I'm assuming the use case here is "I want to inpaint the masked area and use only the prompt without any consideration of what was underneath at all." I think the most straightforward way to do that in terms of the tools the canvas already has would be to use the eraser, not the mask layer, setting In fact, if that works, I think that's an argument against this (now already-merged) proposal, as it makes the two tools equivalent but only under a specific condition, yet not under things that are nearly-but-not-quite that condition. If we need more finesse over this control, I can imagine experimenting with alternatives like nudging the noise distribution so that when do (There would be some difference, because the eraser tool operates in pixel-space and the img2img noising is in latent space, but I don't know if that would be an important difference.) |

|

@keturn I appreciate your comments, so first thanks for taking the time to write all of that. I operated under the assumption (based on what we all discussed) that in UI-land 1.0 would replace inpaint replace, i.e. a complete replacement of the erased region. That's why 0.99 was removed as the upper limit and we simplified the interface to no longer have inpaint replace as an option. I think this is a great UI adjustment, but the problem (as you identified) is that we didn't actually implement inpaint replace at 1.0. That's what I set out to do. With the new code, img2img with strength 1.0 completely replaces the underlying image with latent noise based on the seed you choose. This now works for img2img, inpainting, masking, you name it. It is a true inpaint replace. Extracting this, or reverting it, and moving it to a "noise" infill method would probably leave people pretty confused. "Why would I want to replace the selected region with noise?" A bigger issue at the moment is that all of the infill methods operate in image space, not latent space. There is no real way to get an infill method to do a true replacement today. So, if (as you're suggesting) 1.0 is not a complete replacement of the image (img2img) or mask/erased region (inpainting), what does 1.0 actually mean? (And while we're here, I would also argue that we're working with discrete steps and it's a bad UX to offer up strength that isn't directly tied to steps. Why can I set it to 0.98 when I have 30 steps? Do I expect that to look different than 0.97? This is precisely why I didn't want a floating point value as the symmetry point in the UI even if the CLI supports it.) As for the partial transparency eraser, at least as I understand what you wrote, would that not be equivalent to masking with a reduced strength? If not, would you blend the actual pixels in that region with the selected infill method at the desired transparency level and then use strength to set the schedule and noise? This seems very complicated and I'm not sure how beneficial it would be. |

|

I agree with the intention of this PR. But, can we please not have magic values? If I (as a UI) want to do a true Let's have a flag to enable this code path, which uses true noise. Don't make the end user have to remember that 1 isn't actually 1. Provide an explicit API and let the UI expose it in a human-friendly way to the end user. |

|

Also, this PR includes a change that causes the bug in #2931 |

* Fix img2img and inpainting code so a strength of 1 behaves the same as txt2img. * Make generated images identical to their txt2img counterparts when strength is 1.

* Fix img2img and inpainting code so a strength of 1 behaves the same as txt2img. * Make generated images identical to their txt2img counterparts when strength is 1.

So what does "1" mean? If you want a separate UI element, then we really shouldn't allow "1" at all as it holds no meaning, and setting it to "1" should throw an exception as it's not valid. |

Once I get some clarification about what "1" means, I can look into that. I suspect we were relying on special behavior from |

It doesn't matter what it means (and I won't pretend to really understand what it means on a deep technical level). There are two contexts in which I am disagreeing with this PR. The first context is general and perhaps philosophical. The change in this PR means a change in a particular dimension (the strength parameter) has a direct effect in some other very different dimension (the logic and code path used). That's a "magic value". It is inherently counterintuitive. This PR is going deep enough that no consumer of the InvokeAI API can reasonably bypass the change. That is exactly the level at which we should not be using magic values. Let the user-facing parts of the code handle that - that is what UI is for. Provide an explicit API so the UI can wrap that up in a bow for the user. The second context is that of the specific change at hand. Based on the discussion in this thread, it sounds like If |

|

I think of this as the same as linear interpolation, where a value of 0 means starting with "all initial image" and a value of 1 means "all noise". Perhaps that interpretation is wrong. I understand that you are philosophically upset about the discontinuity at 1, and you feel that mathematically it's dishonest to have a value of 1 that no longer includes the underlying latents derived from the image. Regardless of the math, my interpretation of the user's intent is that they want to replace the image/inpainting region completely with noise and not preserve any characteristics of the underlying image. We removed inpaint replace and at that time set the maximum from 0.99... to 1. Whatever the reason, when we did that, we left no alternative for pure noise generation to replace an area. So that has to go in, IMO, if the particular change to generate pure noise here is undone. I also think we should think about whether 0.9999 makes sense mathematically when we're using 50, 100, 200 discrete steps. Does the scheduler take that into account? Should we quantize strength based on the number of steps? I don't have a good answer and I didn't dig deeply enough. |

|

The strength is the portion of the diffusion process that runs on the image. time=0 is when the image is pristine, time=1000 is when it's as noisy as it gets. A linear schedule (which is what we usually use) for inference steps=8 looks like this:

There are two ways of talking about this: one is the length of time the image has been diffusing for, the other puts a number on how noisy it is and calls it 𝜎. Those two things are directly correlated and you may see a scheduler talking about one or the other, depending on how the author formulated the problem. The "strength" is applied in a straightforward manner by taking that fraction of the timesteps: strength=1/4 takes the last quarter of those, steps 7 and 8. strength=7/8 would be steps 2–8. strength=8/8 (aka The steps at higher time values can be very important to determining the broad features of the composition, so making sure you're including that first step is important if you're using the input to set the average tone of the image but need the composition to come from the prompt. I agree that this is a pretty crummy interface, as the caller can't be certain if different strength values will produce different results unless they are also attending to the step count and reverse-engineering the math. It also severely limits the precision based on step count. I'd prefer setting |

To clarify, we allow a floating point value of 0.9 or 0.99, but that ultimately gets quantized/mapped onto a specific number of steps. 0.9999 is the same as 0.9 is the same as 0.875 in your example above with 8 steps, which means that 7/8 of the steps will be noised. 0.874 gets 6/8. Quick testing shows that to be the case, with no difference in output images for those first 3 values and a big jump with 0.874. This means that we're already presenting it to the user as something it isn't, and I don't like that, either. A good compromise that doesn't affect the UX at all would be to have some kind of interface hint that does the quick calculation to tell the user that their run will be for

So, does that mean that we should use pure noise or let it be influenced in some way by the init_image? |

|

Thanks for the explanation y'all. If I understand correctly, here is what I would like to see:

(The internals of the UI need to know how many true steps are used (because some samplers do not use the number of steps selected directly) in order to dynamically change the scale of the img2img steps.)

(I would also love to expose more of the scheduling to the user but that's a different topic)Let's not think of "strength" as the "traditional" or "established" way to interface with step selection. This is a brand new, rapidly evolving technology, and the current API or parameter set was decided by a bunch of mathematicians who made some debatable decisions on how to allow people to use the system. We can improve this and help to set a better precedent. |

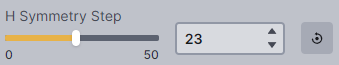

Sure, that could work to replace what's there now - like what the UI currently does for symmetry: I don't know if the UI supports, it, but it might be a good transitional interface to have both steps and strength labeled.

Correct. If 8 of 8 steps is pure noise (and thus a strength of 1.0 is pure noise), then that should yield the same image as txt2img. That's what set me off down this path in the first place. If it's not pure noise, then a value of 1.0 or |

|

@keturn I'm not sure that the underlying math syncs up with your explanation. Maybe it does. Take a look at this line:

In the case of If we set a strength of 0.01 (the lowest I can set in the UI), we get Any guidance? |

|

The UI can support anything; we have full control. Let's not let the UI direct the backend. Very little of SD translates easily to non technical language. Clearly, "strength" is not a good translation, and sounds to me like an ultimately unsuccessful attempt to abstract some complicated math to something a normal human can understand. But again this is a job for a UI, not a technical API. Admittedly there are a few levels below the PR but this is the lowest level api we offer so imo it should not abstract stuff like those. |

When the user picks a strength of 1 for img2img or inpainting, the current code slightly mixes in the initial image with random noise. Strength 1 should be a complete replacement and should yield the same results as txt2img in the img2img case.