-

Notifications

You must be signed in to change notification settings - Fork 18.4k

Description

What version of Go are you using (go version)?

$ go version

go version go1.14.2 darwin/amd64

Does this issue reproduce with the latest release?

Yes.

What operating system and processor architecture are you using?

- macOS 10.14.6 (Darwin Kernel Version 18.7.0 x86_64) with 2.8 GHz Intel Core i7 (4 cores w/ Hyper-Threading for 8 CPUs)

- CentOS Linux release 7.4.1708 (Core) (Linux 3.10.0-693.21.1.el7.x86_64) with Intel(R) Xeon(R) CPU E5-2660 v4 @ 2.00GHz (28 cores w/ Hyper-Threading for 56 CPUs)

What did you do?

Run the following test built with Go 1.13.9 and Go 1.14.2:

package timertest

import (

"context"

"fmt"

"runtime"

"runtime/trace"

"strconv"

"sync"

"testing"

"time"

)

func TestTimerScheduling(t *testing.T) {

const (

highFreq = time.Millisecond

highDur = highFreq / 10

highGrace = highFreq * 4

lowFreq = time.Second

lowDur = lowFreq / 50

lowGrace = lowFreq * 2

)

gmp := runtime.GOMAXPROCS(0)

testCases := []struct {

name string

doHighFreq bool

doLowFreq bool

}{

{"lowFreq", false, true},

{"highFreq", true, false},

{"mixedFreq", true, true},

}

for _, tc := range testCases {

t.Run(tc.name, func(t *testing.T) {

ctx, cancel := context.WithTimeout(context.Background(), 5*time.Second)

defer cancel()

var wg sync.WaitGroup

for i := 0; i < gmp; i++ {

if tc.doHighFreq {

wg.Add(1)

go func(i int) {

defer wg.Done()

timedWork(ctx, t, fmt.Sprintf("highFreq-%d", i), highFreq, highDur, highGrace)

}(i)

}

if tc.doLowFreq && i < gmp/4 {

wg.Add(1)

go func(i int) {

defer wg.Done()

timedWork(ctx, t, fmt.Sprintf("lowFreq-%d", 0), lowFreq, lowDur, lowGrace)

}(i)

}

}

wg.Wait()

})

}

}

func timedWork(ctx context.Context, t *testing.T, name string, interval, dur, grace time.Duration) {

timer := time.NewTimer(interval)

defer timer.Stop()

now := time.Now()

expectedWakeup := now.Add(interval)

deadline := now.Add(grace)

overdueCount := 0

var latest time.Duration

done := ctx.Done()

for {

runCtx, task := trace.NewTask(ctx, name)

trace.Log(runCtx, "expectedWakeup", expectedWakeup.String())

trace.Log(runCtx, "deadline", deadline.String())

sleepRgn := trace.StartRegion(runCtx, "sleep")

select {

case <-timer.C:

sleepRgn.End()

if overdue := time.Since(deadline); overdue > 0 {

trace.Log(runCtx, "sleepOverdueMicros", strconv.FormatInt(int64(overdue/time.Microsecond), 10))

overdueCount++

if overdue > latest {

latest = overdue

}

}

sleepDur := time.Until(expectedWakeup)

for sleepDur <= 0 {

workRgn := trace.StartRegion(runCtx, "doWork")

doWork(dur)

workRgn.End()

expectedWakeup = expectedWakeup.Add(interval)

deadline = deadline.Add(interval)

sleepDur = time.Until(expectedWakeup)

}

timer.Reset(sleepDur)

task.End()

case <-done:

sleepRgn.End()

if overdueCount > 0 {

t.Errorf("%s: wakeup overdue %v times, latest: %v", name, overdueCount, latest)

}

task.End()

return

}

}

}

func doWork(dur time.Duration) {

start := time.Now()

for time.Since(start) < dur {

}

}What did you expect to see?

All tests pass on both versions of Go.

What did you see instead?

On the 56 CPU Linux server with nothing else of significance running:

$ GOMAXPROCS=8 ./go1.13-late-timer-test.test -test.v

=== RUN TestTimerScheduling

=== RUN TestTimerScheduling/lowFreq

=== RUN TestTimerScheduling/highFreq

=== RUN TestTimerScheduling/mixedFreq

--- PASS: TestTimerScheduling (15.02s)

--- PASS: TestTimerScheduling/lowFreq (5.00s)

--- PASS: TestTimerScheduling/highFreq (5.00s)

--- PASS: TestTimerScheduling/mixedFreq (5.02s)

PASS

$ GOMAXPROCS=8 ./go1.14-late-timer-test.test -test.v

=== RUN TestTimerScheduling

=== RUN TestTimerScheduling/lowFreq

=== RUN TestTimerScheduling/highFreq

=== RUN TestTimerScheduling/mixedFreq

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-5: wakeup overdue 1 times, latest: 5.560066ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-7: wakeup overdue 1 times, latest: 10.296257ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-0: wakeup overdue 1 times, latest: 7.205679ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-6: wakeup overdue 1 times, latest: 10.195891ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-1: wakeup overdue 2 times, latest: 5.554398ms

--- FAIL: TestTimerScheduling (15.02s)

--- PASS: TestTimerScheduling/lowFreq (5.02s)

--- PASS: TestTimerScheduling/highFreq (5.00s)

--- FAIL: TestTimerScheduling/mixedFreq (5.00s)

FAIL

I get similar results on macOS although we don't deploy the application on that platform.

$ go1.13.9 test -v

=== RUN TestTimerScheduling

=== RUN TestTimerScheduling/lowFreq

=== RUN TestTimerScheduling/highFreq

=== RUN TestTimerScheduling/mixedFreq

--- PASS: TestTimerScheduling (15.05s)

--- PASS: TestTimerScheduling/lowFreq (5.03s)

--- PASS: TestTimerScheduling/highFreq (5.00s)

--- PASS: TestTimerScheduling/mixedFreq (5.02s)

PASS

ok late-timer-test 15.052s

$ go test -v

=== RUN TestTimerScheduling

=== RUN TestTimerScheduling/lowFreq

=== RUN TestTimerScheduling/highFreq

=== RUN TestTimerScheduling/mixedFreq

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-5: wakeup overdue 1 times, latest: 3.310788ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-7: wakeup overdue 2 times, latest: 9.31654ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-4: wakeup overdue 3 times, latest: 9.350977ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-6: wakeup overdue 3 times, latest: 10.29899ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-2: wakeup overdue 1 times, latest: 10.362095ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-1: wakeup overdue 2 times, latest: 9.594361ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-0: wakeup overdue 2 times, latest: 9.607532ms

--- FAIL: TestTimerScheduling (15.04s)

--- PASS: TestTimerScheduling/lowFreq (5.02s)

--- PASS: TestTimerScheduling/highFreq (5.00s)

--- FAIL: TestTimerScheduling/mixedFreq (5.02s)

FAIL

Additional Background

I initially discovered this problem while testing a Go 1.14 build of our application to see if we could upgrade to the newest version of Go on the project. This is the same application described in #28808 and in my Gophercon 2019 talk: Death by 3,000 Timers. Unfortunately I discovered that this regression in Go 1.14 forces us to stay with Go 1.13 for that application.

Note that our application can tolerate late wake ups as long as they are not too late. The grace period of four intervals on the high frequency timer implements that idea. I derived the constants in the test from our real world requirements, rounded off to keep the numbers simple.

The mixedFreq test case above best models our application's work load. It spends most of its time sending UDP packets on the network at a target bitrate (the high frequency work) but must also perform some heavier tasks to prepare each new stream of UDP packets (the low frequency work).

I added the test cases with homogenous workloads to verify that the issue isn't a fundamental performance issue, but rather an emergent behavior of the scheduler.

Analysis

As you can see, the test code above uses runtime/trace annotations. We have similar annotations in our production code. Analyzing execution traces from either source leads to the same conclusion. The runtime scheduler in Go 1.14 introduces unnecessary timer latency for this kind of application by not stealing timers from non-preemptable P's as described in the following code comment.

Lines 2164 to 2171 in 96745b9

| // Consider stealing timers from p2. | |

| // This call to checkTimers is the only place where | |

| // we hold a lock on a different P's timers. | |

| // Lock contention can be a problem here, so avoid | |

| // grabbing the lock if p2 is running and not marked | |

| // for preemption. If p2 is running and not being | |

| // preempted we assume it will handle its own timers. | |

| if i > 2 && shouldStealTimers(p2) { |

Evidence

Here is an execution trace from the Linux host:

$ GOMAXPROCS=8 ./go1.14-late-timer-test.test -test.v -test.trace go1.14.trace -test.run=/mixedFreq

=== RUN TestTimerScheduling

=== RUN TestTimerScheduling/mixedFreq

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-5: wakeup overdue 2 times, latest: 8.667704ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-7: wakeup overdue 1 times, latest: 2.17004ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-6: wakeup overdue 1 times, latest: 1.411159ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-2: wakeup overdue 2 times, latest: 2.403263ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-1: wakeup overdue 1 times, latest: 6.429298ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-0: wakeup overdue 2 times, latest: 11.728868ms

TestTimerScheduling/mixedFreq: late-timer-test_test.go:114: highFreq-4: wakeup overdue 1 times, latest: 11.563101ms

--- FAIL: TestTimerScheduling (5.00s)

--- FAIL: TestTimerScheduling/mixedFreq (5.00s)

FAIL

If you open the tracer UI for the trace and look at the "User-defined tasks" page you see that most of the timings for the highFreq work fall in the two buckets straddling the 1ms frequency it runs at, but there are a small number of outliers on the high side as seen below.

If we drill into the outlier in the 15ms bucket we see this trace log that shows the task did not wake up until more than 11ms after it's deadline, which is more than 14ms past when the timer was set to fire. After it finally woke up it had to do a lot of work to make up for the missed events. In our real application we cannot afford to miss more than four events like this. Go 1.13 achieves that goal far more consistently, as we will see later.

When Elapsed Goroutine ID Events

4.006214439s 16.86265ms Task 31866 (complete)

4.006214439 . 9 task highFreq-0 (id 31866, parent 0) created

4.006225415 . 10976 9 expectedWakeup=2020-05-04 18:46:13.026124086 +0000 UTC m=+4.009840522

4.006231304 . 5889 9 deadline=2020-05-04 18:46:13.029124086 +0000 UTC m=+4.012840522

4.006234152 . 2848 9 region sleep started (duration: 15.045051ms)

4.021282883 . 15048731 9 sleepOverdueMicros=11728

4.021285795 . 2912 9 region doWork started (duration: 103.362µs)

4.021391909 . 106114 9 region doWork started (duration: 102.722µs)

4.021497255 . 105346 9 region doWork started (duration: 102.753µs)

4.021602600 . 105345 9 region doWork started (duration: 102.658µs)

4.021707818 . 105218 9 region doWork started (duration: 102.69µs)

4.021813100 . 105282 9 region doWork started (duration: 102.722µs)

4.021918446 . 105346 9 region doWork started (duration: 102.593µs)

4.022023663 . 105217 9 region doWork started (duration: 102.69µs)

4.022128913 . 105250 9 region doWork started (duration: 102.626µs)

4.022234291 . 105378 9 region doWork started (duration: 102.722µs)

4.022339733 . 105442 9 region doWork started (duration: 103.041µs)

4.022445398 . 105665 9 region doWork started (duration: 102.818µs)

4.022550840 . 105442 9 region doWork started (duration: 102.658µs)

4.022656090 . 105250 9 region doWork started (duration: 102.786µs)

4.022761500 . 105410 9 region doWork started (duration: 102.657µs)

4.022866717 . 105217 9 region doWork started (duration: 102.498µs)

4.022971551 . 104834 9 region doWork started (duration: 102.658µs)

4.023077089 . 105538 9 task end

GC:0s

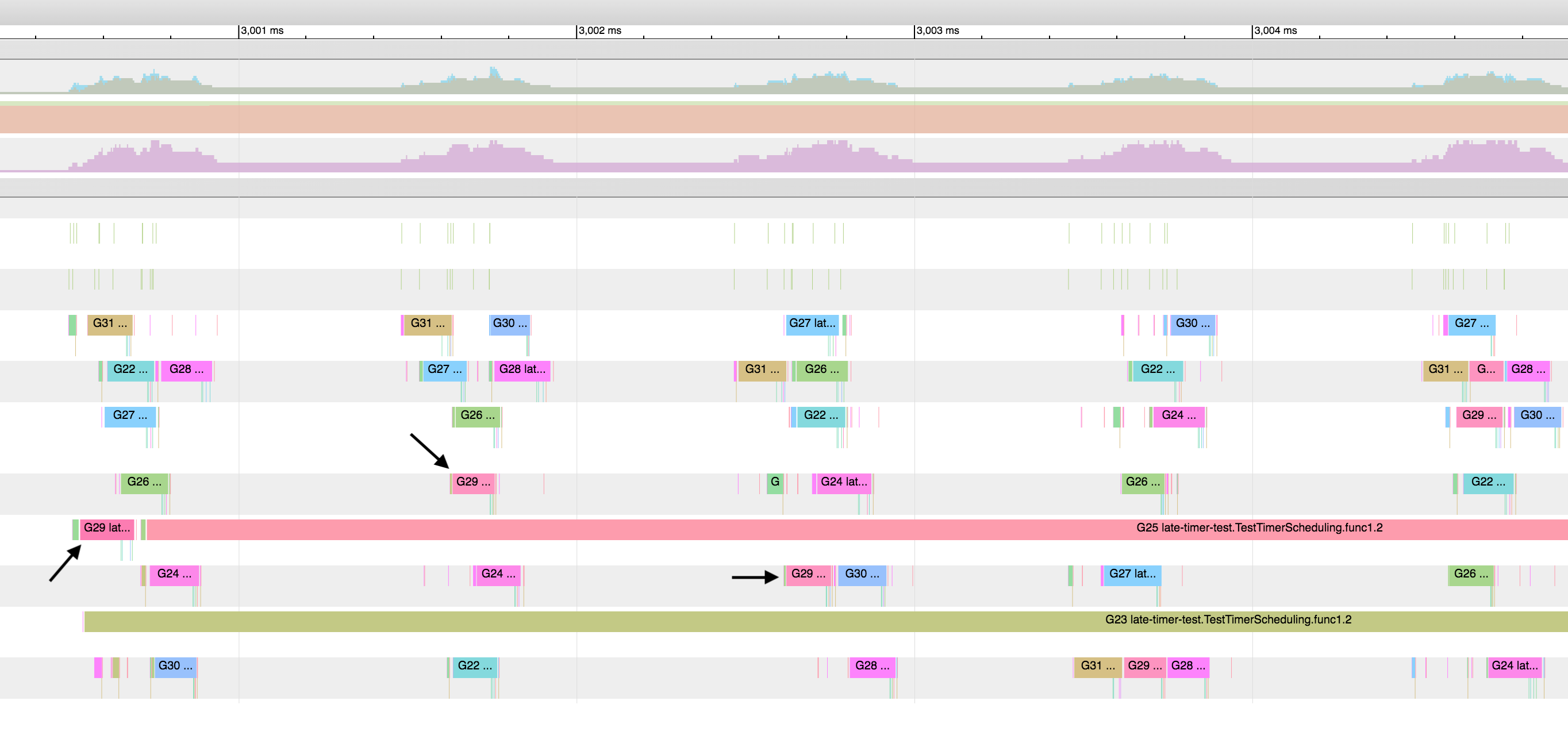

From the above trace it is useful to take note of the goroutine ID (9) and that the sleep started at 4.006234152 seconds into the trace. Opening the "View trace" page from the trace UI home page and navigating to that time we can see what happened in the program to cause the timer latency and that the scheduler missed many opportunities to do a better job.

(click the image for full resolution)

In the above screen shot G9 goes to sleep just before G10 is scheduled on the same P. G10 monopolizes the P for several milliseconds before yielding and only then does the scheduler check the timers and schedule G9 again, albeit very late.

The arrow labeled "another late wakeup" pointing to G11 shows another example. In this case G12 runs long enough to get preempted by the sysmon thread and allow G11 time to run, also late. But it also shows that another P steals G12 almost immediately to complete its processing.

Throughout this time there are plenty of idle P's available to do work, but the scheduler rules prevent them them from stealing the available expired timers.

Comparison to Go 1.13

Here is a trace file using the Go 1.13 build and collected the same way.

The task histograms do not have the same outliers. It is also noteworthy that all of the highFreq goroutines performed exactly 5,000 sleeps during the 5 second trace, an indication that the inner loop never had perform any catch up iterations.

The trace timeline shows goroutines sleeping just before a longer task but getting picked up by a different P on schedule.

(click the image for full resolution)

Summary

The new timer implementation in Go 1.14 does a great job reducing the overhead of servicing the timer queues, but the decision not to steal timers from P's not marked for preemption introduces opportunities for timer latencies of several milliseconds even when there are idle P's available to do the work.

We would like the runtime to give goroutines waiting on an expired timer the same priority for work stealing as runnable goroutines already in the run queue. Prior versions of Go exhibited that property because the timer queue was serviced by a goroutine on par with all other goroutines.

Also, could this behavior be part of what's going on in #36298?