-

Notifications

You must be signed in to change notification settings - Fork 13.3k

Description

I built llama.cpp using cmake and then Visual Studio (after many trials and tribulations since I'm pretty new to this), but finally got it working.

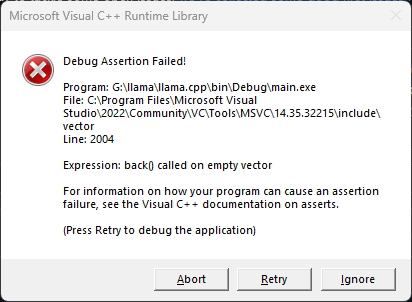

Using the 7B model the outputs are reasonable, but when I put the -i tag, it runs, then I hit Ctrl+C, it allows me to enter text, but when I hit enter an error pops up in a windows shown below:

I'm running this on my windows machine, but I have been using WSL to get some stuff to work.

Here's an example of it failing:

(base) PS G:\llama\llama.cpp> .\bin\Debug\main.exe -m ..\LLaMA\7B\ggml-model-q4_0.bin -i -n 124 -t 24

`(base) PS G:\llama\llama.cpp> .\bin\Debug\main.exe -m ..\LLaMA\7B\ggml-model-q4_0.bin -i -n 124 -t 24

main: seed = 1680110536

llama_model_load: loading model from '..\LLaMA\7B\ggml-model-q4_0.bin' - please wait ...

llama_model_load: n_vocab = 32000

llama_model_load: n_ctx = 512

llama_model_load: n_embd = 4096

llama_model_load: n_mult = 256

llama_model_load: n_head = 32

llama_model_load: n_layer = 32

llama_model_load: n_rot = 128

llama_model_load: f16 = 2

llama_model_load: n_ff = 11008

llama_model_load: n_parts = 1

llama_model_load: type = 1

llama_model_load: ggml ctx size = 4273.34 MB

llama_model_load: mem required = 6065.34 MB (+ 1026.00 MB per state)

llama_model_load: loading model part 1/1 from '..\LLaMA\7B\ggml-model-q4_0.bin'

llama_model_load: .................................... done

llama_model_load: model size = 4017.27 MB / num tensors = 291

llama_init_from_file: kv self size = 256.00 MB

system_info: n_threads = 24 / 24 | AVX = 1 | AVX2 = 1 | AVX512 = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | VSX = 0 |

main: interactive mode on.

sampling: temp = 0.800000, top_k = 40, top_p = 0.950000, repeat_last_n = 64, repeat_penalty = 1.100000

generate: n_ctx = 512, n_batch = 8, n_predict = 124, n_keep = 0

== Running in interactive mode. ==

- Press Ctrl+C to interject at any time.

- Press Return to return control to LLaMa.

- If you want to submit another line, end your input in ''.

2016 has been a big year for Uber. The ride-sharing app has

Some inputted text

(base) PS G:\llama\llama.cpp>`