-

Notifications

You must be signed in to change notification settings - Fork 21.3k

Description

System information

Geth version: v1.10.1

OS & Version: Linux

Expected behaviour

After the geth node is synced it is able to serve and incoming eth_calls without any issues.

Actual behaviour

The geth node frequently OOMs the moment it starts serving eth_calls , with execution taking a very long time and eventually timing out. The node is immediately killed as it OOMs with memory usage spiking to from 1gb to 10 - 12 gb in a matter of seconds. This behaviour wasn't observed in earlier releases ( 1.9.25 and earlier).

Steps to reproduce the behaviour

Run geth with the following flags

--v5disc

--http

--http.addr=0.0.0.0

--http.corsdomain=*

--http.vhosts=*

--ws

--ws.addr=0.0.0.0

--ws.origins=*

--datadir=/ethereum

--keystore=/keystore

--verbosity=3

--light.serve=50

--metrics

--ethstats=/ethstats_secret

--goerli

--snapshot=false

--txlookuplimit=0

--cache.preimages

--rpc.allow-unprotected-txs

--pprof

We have reverted all the big changes from v1.10 onwards however it hasn't made a difference.

Backtrace

INFO [03-24|11:39:23.079] Loaded local transaction journal transactions=3130 dropped=0

INFO [03-24|11:39:23.092] Regenerated local transaction journal transactions=3130 accounts=536

WARN [03-24|11:39:23.092] Switch sync mode from fast sync to full sync

INFO [03-24|11:39:23.140] Unprotected transactions allowed

WARN [03-24|11:39:23.141] Old unclean shutdowns found count=141

WARN [03-24|11:39:23.141] Unclean shutdown detected booted=2021-03-24T10:59:08+0000 age=40m15s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:04:52+0000 age=34m31s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:10:32+0000 age=28m51s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:14:56+0000 age=24m27s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:18:12+0000 age=21m11s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:21:23+0000 age=18m

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:23:03+0000 age=16m20s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:24:49+0000 age=14m34s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:27:13+0000 age=12m10s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:31:47+0000 age=7m36s

WARN [03-24|11:39:23.142] Unclean shutdown detected booted=2021-03-24T11:35:22+0000 age=4m1s

INFO [03-24|11:39:23.142] Allocated cache and file handles database=/ethereum/geth/les.server cache=16.00MiB handles=16

INFO [03-24|11:39:23.174] Configured checkpoint oracle address=0x18CA0E045F0D772a851BC7e48357Bcaab0a0795D signers=5 threshold=2

INFO [03-24|11:39:23.185] Loaded latest checkpoint section=136 head="cb1485…e62327" chtroot="4fb6c4…a2b521" bloomroot="443066…099a79"

INFO [03-24|11:39:23.185] Starting peer-to-peer node instance=Geth/v1.10.1-stable-c2d2f4ed/linux-amd64/go1.16

INFO [03-24|11:39:23.276] New local node record seq=470 id=xxxxxxxxxxxx ip=127.0.0.1 udp=30303 tcp=30303

INFO [03-24|11:39:23.305] Started P2P networking self=enode://[email protected]:30303

INFO [03-24|11:39:23.306] IPC endpoint opened url=/ethereum/geth.ipc

INFO [03-24|11:39:23.308] HTTP server started endpoint=[::]:8545 prefix= cors=* vhosts=*

INFO [03-24|11:39:23.308] WebSocket enabled url=ws://[::]:8546

INFO [03-24|11:39:23.308] Stats daemon started

INFO [03-24|11:39:33.721] Looking for peers peercount=1 tried=35 static=0

INFO [03-24|11:39:37.922] Block synchronisation started

INFO [03-24|11:39:38.849] New local node record seq=471 id=xxxxxxx ip=xxxxxxxxx udp=xxxxx tcp=30303

WARN [03-24|11:39:39.683] Served eth_call conn=127.0.0.1:49070 reqid=107580 t=6.568050388s err="execution aborted (timeout = 5s)"

WARN [03-24|11:39:43.317] Served eth_call conn=127.0.0.1:53254 reqid=1 t=10.147648933s err="execution aborted (timeout = 5s)"

INFO [03-24|11:39:43.987] Looking for peers peercount=1 tried=24 static=0

WARN [03-24|11:39:46.515] Served eth_call conn=127.0.0.1:35870 reqid=1 t="559.32µs" err="execution reverted"

WARN [03-24|11:39:46.622] Served eth_call conn=127.0.0.1:60854 reqid=1 t="479.825µs" err="execution reverted"

INFO [03-24|11:39:46.683] Submitted transaction hash=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx from=xxxxxxxxxxxxxxxxxxxxx nonce=xxxx recipient=xxxxxxxxxxxxxxxxxx value=0

WARN [03-24|11:39:47.966] Served eth_call conn=127.0.0.1:42870 reqid=290669 t=10.325244195s err="execution aborted (timeout = 5s)"

WARN [03-24|11:39:48.460] Failed to retrieve stats server message err="websocket: close 1006 (abnormal closure): unexpected EOF"

WARN [03-24|11:39:51.966] Served eth_estimateGas conn=127.0.0.1:60412 reqid=3971546 t=5.387361441s err="execution aborted (timeout = 0s)"

WARN [03-24|11:39:54.181] Full stats report failed err="use of closed network connection"

These are the last logs before it gets killed due to an OOM. A restart doesn't help as it goes through this whole process again and gets killed in the next few minutes again while serving an eth_call . While it is expected for our geth node to have higher than normal memory usage due to serving public rpc requests, this hasn't come up before for us which is the reason this issue has been opened.

This is the heap profile of geth right before it gets killed.

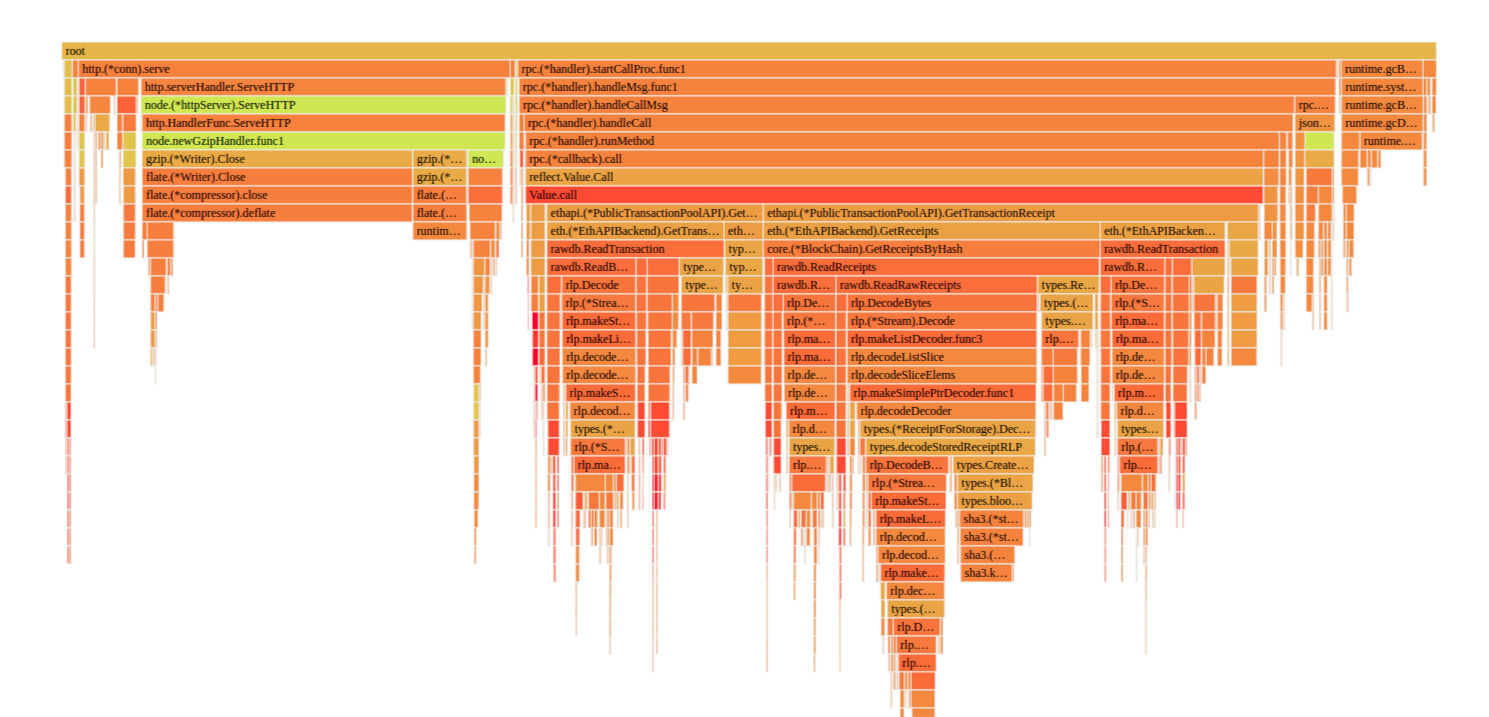

This is the cpu profile right before it gets killed.

From both the above figures, it appears that serving these RPC requests causes great stress to the node, and large increases in memory usage due to encoding the response to the request.

These are the raw profiles if it will help you debug this further: