-

Notifications

You must be signed in to change notification settings - Fork 29k

[SPARK-26601][SQL] Make broadcast-exchange thread pool configurable #23670

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #101752 has finished for PR 23670 at commit

|

|

@viirya @maropu @HyukjinKwon |

| .createWithDefault(1000) | ||

|

|

||

| val BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD = | ||

| buildStaticConf("spark.sql.broadcastExchange.maxThreadNumber") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

spark.sql.broadcastExchange.maxThreadThreshold

| // for other test | ||

| SparkSession.getActiveSession.get.sparkContext.conf. | ||

| set(StaticSQLConf.BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD, previousNumber) | ||

| } |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We need this test? In the other similar prs (e.g., https://github.com/apache/spark/pull/22847/files), we added no test. If necessary, we can add tests in ExecutorSideSQLConfSuite to check if executors can reference this static value...

|

Test build #101755 has finished for PR 23670 at commit

|

|

Hi, @caneGuy . |

|

Thanks @dongjoon-hyun @maropu i remove the suite.since i think it's no need to test that. |

|

Test build #102387 has finished for PR 23670 at commit

|

|

|

||

| val BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD = | ||

| buildStaticConf("spark.sql.broadcastExchange.maxThreadThreshold") | ||

| .doc("The maximum degree of parallelism to fetch and broadcast the table." + |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: add a single space: ...table." ->...table. "

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done

| buildStaticConf("spark.sql.broadcastExchange.maxThreadThreshold") | ||

| .doc("The maximum degree of parallelism to fetch and broadcast the table." + | ||

| "If we encounter memory issue like frequently full GC or OOM when broadcast table " + | ||

| "we can decrease this number in order to reduce memory usage." + |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ditto

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done @maropu

|

Test build #102439 has finished for PR 23670 at commit

|

|

Could you help retest this please? @maropu @dongjoon-hyun |

|

Test build #102573 has finished for PR 23670 at commit

|

|

The failed unit tests is below which i think is not related with the pr @dongjoon-hyun @maropu |

|

retest this please. |

|

Test build #102908 has finished for PR 23670 at commit

|

|

Sorry for bothering @viirya but the failed case i think is still not related with this pr. |

|

retest this please. |

|

Test build #103120 has finished for PR 23670 at commit

|

|

retest this please |

|

Test build #103129 has finished for PR 23670 at commit

|

|

@dilipbiswal I have resolve the conflict,please help check this review,thanks |

|

Test build #103973 has finished for PR 23670 at commit

|

done @dongjoon-hyun thanks! |

|

Test build #104495 has finished for PR 23670 at commit

|

|

failed case is not caused by this case |

|

retest this please |

| "cause longer waiting for other broadcasting. Also, increasing parallelism may " + | ||

| "cause memory problem.") | ||

| .intConf | ||

| .checkValue(thres => thres > 0, "The threshold should be positive.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If this number cannot be more than 128, let's add that condition.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1 for checking thres <= 128, too.

|

retest this please |

| "cause longer waiting for other broadcasting. Also, increasing parallelism may " + | ||

| "cause memory problem.") | ||

| .intConf | ||

| .checkValue(thres => thres > 0, "The threshold should be positive.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Let's make it .internal() so that we can remove this away if there's anything wrong found with this configuration later.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, I just want to comment like that. I also think this is advanced and should not be exposed to users in general. +1

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

+1.

|

LGTM otheriwse. @cloud-fan, I will get this in. Please let me know if you have other concerns. |

|

Test build #104686 has finished for PR 23670 at commit

|

|

Test build #104687 has finished for PR 23670 at commit

|

|

Retest this please. |

|

Test build #104787 has finished for PR 23670 at commit

|

|

ping @caneGuy |

|

Sorry for late reply, i will fix today @HyukjinKwon |

|

@HyukjinKwon refine!Thanks too much |

|

Test build #105309 has finished for PR 23670 at commit

|

|

@HyukjinKwon ping thanks! |

| "cause longer waiting for other broadcasting. Also, increasing parallelism may " + | ||

| "cause memory problem.") | ||

| .intConf | ||

| .checkValue(thres => thres > 0 && thres <= 128, "The threshold should be positive.") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is a maximum limit (128) for this config. The error message doesn't reflect that. Once an invalid value like 200 is set, it can't figure out what goes wrong with it, if not reading this code.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yea let's improve this one before getting this in

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done @HyukjinKwon @viirya Thanks!

| .intConf | ||

| .createWithDefault(1000) | ||

|

|

||

| val BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD -> BROADCAST_EXCHANGE_MAX_THREAD_THRESHOLD

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

oh sorry for this error,i have fixed that

| private[execution] val executionContext = ExecutionContext.fromExecutorService( | ||

| ThreadUtils.newDaemonCachedThreadPool("broadcast-exchange", 128)) | ||

| ThreadUtils.newDaemonCachedThreadPool("broadcast-exchange", | ||

| SQLConf.get.getConf(StaticSQLConf.BROADCAST_EXCHANGE_MAX_THREAD_THREASHOLD))) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ditto

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ping @caneGuy seems like the last nit

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done @HyukjinKwon @kiszk thanks!

|

Test build #105337 has finished for PR 23670 at commit

|

|

Merged to master. Related tests and build passed |

| object BroadcastExchangeExec { | ||

| private[execution] val executionContext = ExecutionContext.fromExecutorService( | ||

| ThreadUtils.newDaemonCachedThreadPool("broadcast-exchange", 128)) | ||

| ThreadUtils.newDaemonCachedThreadPool("broadcast-exchange", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: the previous indentation was correct.

|

Test build #105351 has finished for PR 23670 at commit

|

What changes were proposed in this pull request?

Currently,thread number of broadcast-exchange thread pool is fixed and keepAliveSeconds is also fixed as 60s.

But some times, if the Thead object do not GC quickly it may caused server(driver) OOM. In such case,we need to make this thread pool configurable.

A case has described in https://issues.apache.org/jira/browse/SPARK-26601

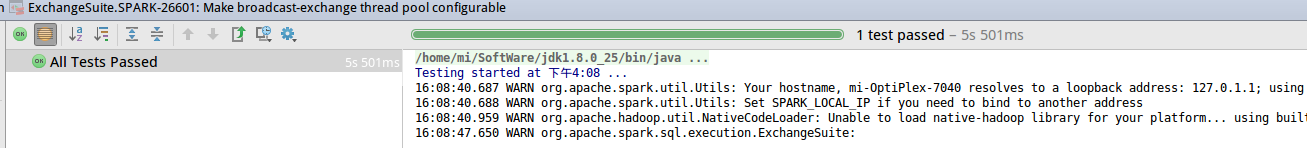

How was this patch tested?

UT