feat(project): Initialize UR16e pick and place project #6258

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Proposed change(s)

Description

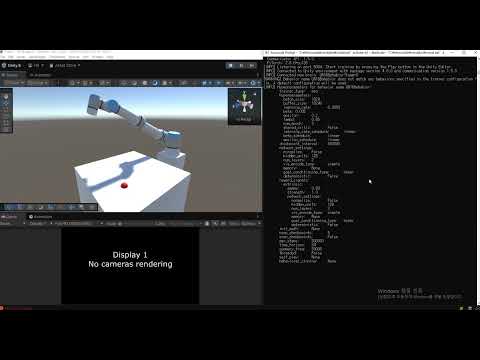

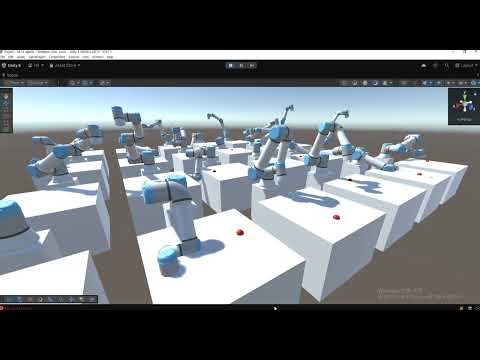

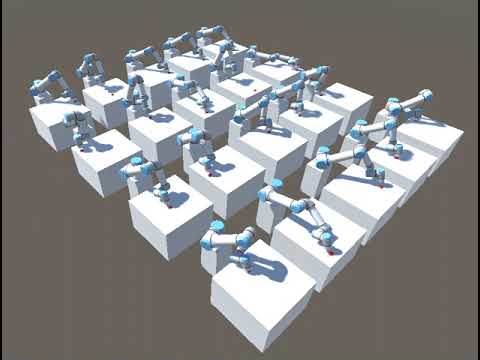

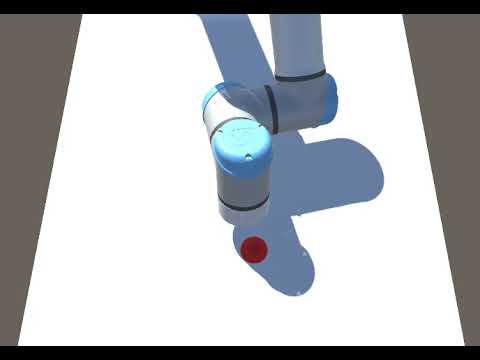

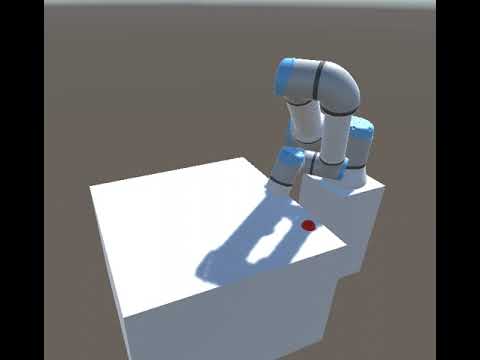

This PR establishes the initial framework for the UR16e Pick and

Place project within the ML-Agents repository. It introduces the

core components required to train a UR16e robotic arm for a

pick-and-place task using reinforcement learning.

Key Changes

UR16Agent.cs): A new agent script hasbeen added to manage the robot's learning process. It defines

the state observations, action space, and reward function

tailored for the pick-and-place task.

UR16 agents.unity): A dedicated Unityscene is included, featuring the UR16e robot, the target object,

and the necessary ML-Agents components for training and

inference.

README.md,README.ko.md): Comprehensivedocumentation has been added in both English and Korean. The

READMEs explain the project's purpose, structure, and provide

clear instructions on how to get started and run the simulation.

Purpose

The goal of this PR is to formally initialize the project and

provide a solid foundation for future development and

experimentation with the UR16e robot. By adding the core logic

and documentation upfront, it makes the project accessible and

understandable for other contributors.

How to Test

compatible).

the new README files.

Vidoes

Useful links (Github issues, JIRA tickets, ML-Agents forum threads etc.)

Types of change(s)

Checklist

Other comments