-

Notifications

You must be signed in to change notification settings - Fork 108

Add token completion #324

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add token completion #324

Conversation

|

It looks reasonable to me. But I also think that this feature are implemented in many editors via word based completion, but sure about VS code though. |

|

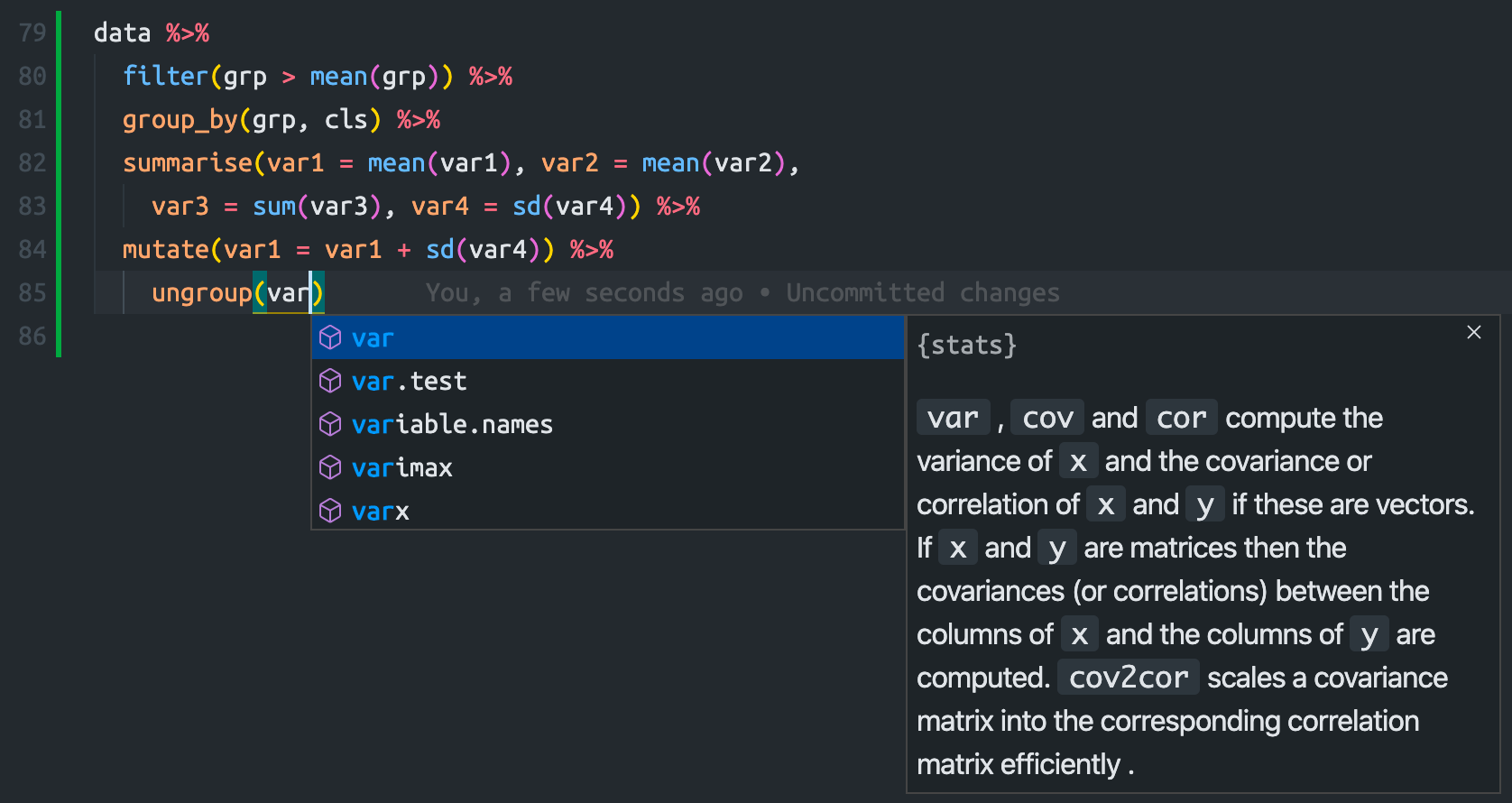

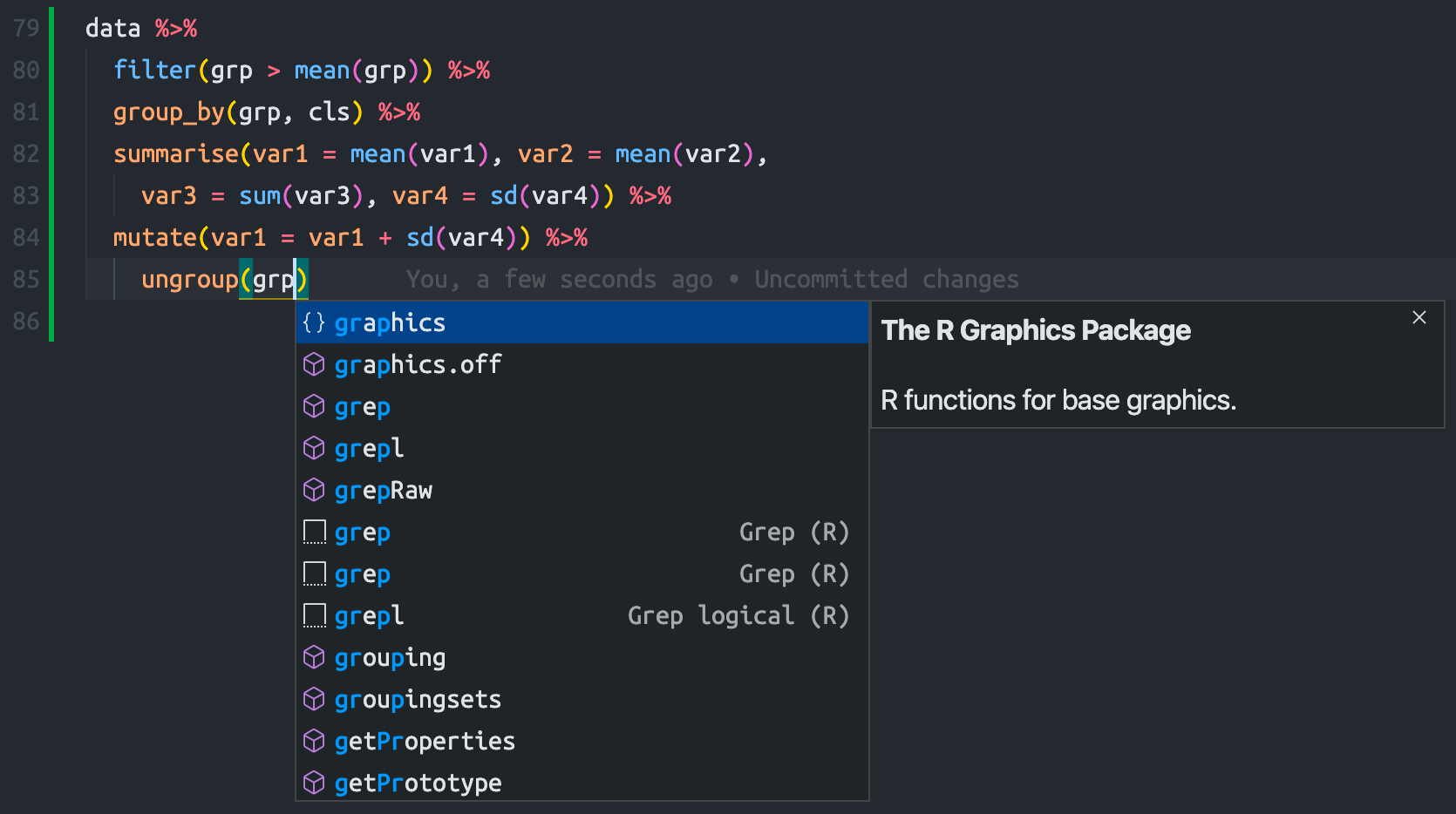

Yes, word completion is provided by many editors. From my experience in VSCode, I observe that the word completion is only triggered when there's no completion provided for a token and then the editor tries to put up some words from the document to provide as word completions. Therefore, it is quite laggy compared with real completions and seem to only match relatively long, unique words most of the time, and could behave quite randomly. For example, when editing the following code: data %>%

filter(grp > mean(grp)) %>%

group_by(grp, cls) %>%

summarise(var1 = mean(var1), var2 = mean(var2),

var3 = sum(var3), var4 = sd(var4)) %>%

mutate(var1 = var1 + sd(var4)) %>%

ungroup()There's no word completion by the editor of all symbols like In R, if the symbol is not lengthy, it would probably have some valid completions since default packages (e.g. base, stats) have so many exported functions, and there's no word completion anymore in this case. |

|

I've been trying this for several days in my work and the completions works well for me as a good supplement for cases where static analysis does not yield useful results. |

|

Thank you for testing it. It seems to break some of the tests tough. |

|

The Windows tests seem always stuck at |

It is hard to provide accurate completion based on static code analysis in the following cases:

This PR is an attempt to partially address these cases by providing completions from symbols that appear in the current document so that the symbols are recognized as texts that could be repeatedly used in the document, as demonstrated in the following images.

Since the token completion items have the lowest ranking, they won't interrupt normal completions but provide some help when the normal code analysis does not provide anything.