-

Notifications

You must be signed in to change notification settings - Fork 412

docs: improve llama.cpp install instructions. #720

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice, thank you!

|

cc: @gary149 - can I get your blessings to merge this PR, too, please? 🙏 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

lgtm with my suggestion, wdyt?

Co-authored-by: Julien Chaumond <[email protected]>

|

Sounds good to me, I will wait for @pcuenca to confirm that it looks good to him, too (since it's slightly deviating from his original idea) before merging. |

|

I don't know why the E2E error fails, will wait for @coyotte508's guidance |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice! The tests pass after re-running.

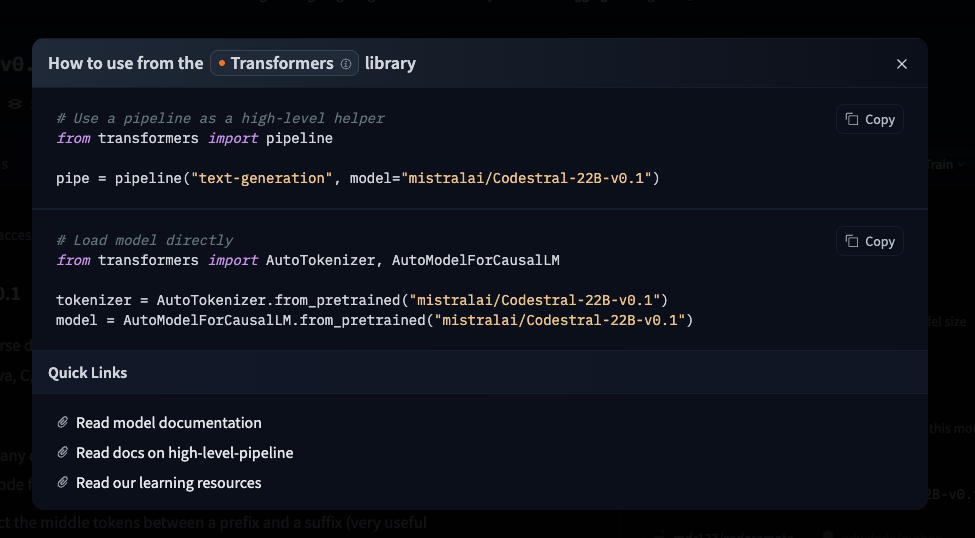

follow up to #720 just like we show two snippets for transformers (pipeline & automodel) (example [here](https://huggingface.co/mistralai/Codestral-22B-v0.1?library=transformers))  we should show the two options for llama.cpp clearly as well. The two options are: installing from brew and installing from source  --------- Co-authored-by: Victor Muštar <[email protected]>

Does two things:

-mto--hf-filethis would make sure that the models are cached