-

Notifications

You must be signed in to change notification settings - Fork 2k

Cleanup Enums #1201

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Cleanup Enums #1201

Conversation

|

A couple thoughts: First, do you have any performance metrics for this change? This replaces a local const with a dictionary lookup for Second, could you explain the impact of this change on the use of the library? Aside from some extra verbosity cost of replacing |

|

I've also heard reports that |

I look at different parts of

And if I see inconsistency I can’t understand if it it intentional or for historical reasons. In this case, I noticed recently added directiveLocation.js and was wondering why other enums are defined differently. You are absolutely right that this PR doesn't change anything for library consumers but I think such changes improve quality of "reference implementation".

The only thing I could find is reports that applying

Not initially 😞

I profiled it both with #1167 and node profiler and haven’t noticed any changes.

I haven’t measured any difference between using const int or a string length. But when I moved So it looks like V8 inlined both build-in for So my speculation is that |

Enum values are best left as representative opaque values, so we should avoid `value.length`. Minor variable name changes to preserve existing formatting

|

Thanks for the analysis, @IvanGoncharov - this looks great |

Agree 👍 |

I converted

Kindinto an object and createdKindEnumandTokenKindEnumtypes.To do this I wrapped enums into

Object.freezeas required by Flow.It's safe to use

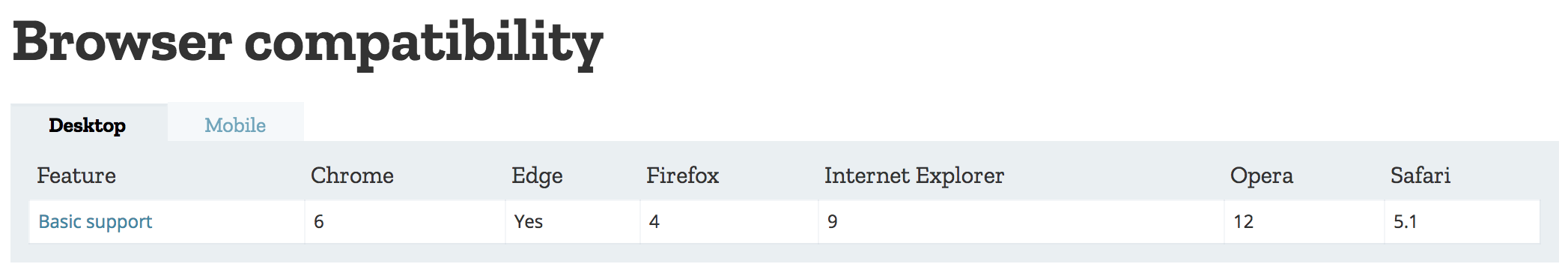

Object.freezesince it supported by all browsers supported by this lib:If for some reason

Object.freezecan't be used in this lib I can wrap it into code comment as suggested here: facebook/flow#5465 (comment)