-

Notifications

You must be signed in to change notification settings - Fork 10.5k

Closed

dotnet/coreclr

#24099Milestone

Description

Actually in CoreFx, but opening in aspnet until I get more information

Updated

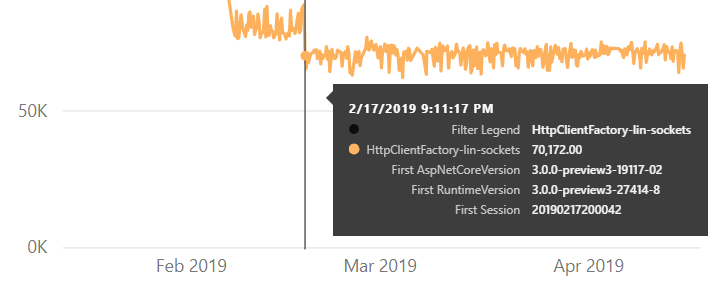

3.0.0-preview-27408-1 -> 3.0.0-preview-27409-1

Microsoft.NetCore.App / Core FX

dotnet/corefx@c2bbb6a...e1c0e1d

Microsoft.NetCore.App / Core CLR

dotnet/coreclr@a51af08...4e5df11

Command lines:

dotnet run -- --server http://10.10.10.2:5001 --client http://10.10.10.4:5002 `

-j ..\Benchmarks\benchmarks.httpclient.json -n HttpClientFactory --sdk Latest --self-contained --aspnetcoreversion 3.0.0-preview3-19116-01 --runtimeversion 3.0.0-preview-27408-1

dotnet run -- --server http://10.10.10.2:5001 --client http://10.10.10.4:5002 `

-j ..\Benchmarks\benchmarks.httpclient.json -n HttpClientFactory --sdk Latest --self-contained --aspnetcoreversion 3.0.0-preview3-19116-01 --runtimeversion 3.0.0-preview-27409-1

Metadata

Metadata

Assignees

Labels

No labels