diff --git a/.github/workflows/build-and-test-callable.yml b/.github/workflows/build-and-test-callable.yml

new file mode 100644

index 000000000..aac512186

--- /dev/null

+++ b/.github/workflows/build-and-test-callable.yml

@@ -0,0 +1,188 @@

+# build-and-test-callable.yml

+#

+# - Builds PyMFEM in three stages:

+# - MFEM dependencies + MFEM

+# - SWIG bindings

+# - PyMFEM

+# - Runs tests under `run_examples.py`

+# - If there is a failure, uploads test outputs as an artifact

+

+name: Build and Test

+

+on:

+ workflow_call:

+ inputs:

+ os:

+ description: 'Operating system'

+ type: string

+ default: 'ubuntu-latest'

+ python-version:

+ description: 'Python version'

+ type: string

+ default: '3.9'

+ mfem-branch:

+ description: 'MFEM branch to checkout'

+ type: string

+ default: 'default' # 'default' uses a specific commit hash defined in setup.py:repos_sha

+ parallel:

+ description: 'Build parallel version'

+ type: boolean

+ default: false

+ cuda:

+ description: 'Build with CUDA'

+ type: boolean

+ default: false

+ cuda-toolkit-version:

+ type: string

+ default: '12.6.0'

+ cuda-driver-version:

+ type: string

+ default: '560.28.03'

+ libceed:

+ description: 'Build with libCEED'

+ type: boolean

+ default: false

+ gslib:

+ description: 'Build with GSlib'

+ type: boolean

+ default: false

+ phases:

+ description: 'When true, run each build step individually (mfem, swig, pymfem)'

+ type: boolean

+ default: true

+

+jobs:

+ build-and-test:

+ runs-on: ${{ inputs.os }}

+

+ # Reference for $${{ x && y || z }} syntax: https://7tonshark.com/posts/github-actions-ternary-operator/

+ name: >-

+ ${{ inputs.os }} |

+ ${{ inputs.mfem-branch }} |

+ ${{ inputs.python-version }} |

+ ${{ inputs.parallel && 'parallel' || 'serial' }}

+ ${{ inputs.cuda && '| cuda' || '' }}${{ inputs.libceed && '| libceed' || '' }}${{ inputs.gslib && '| gslib' || '' }}

+

+ env:

+ CUDA_HOME: '/usr/local/cuda'

+ # These are all passed to setup.py as one concatenated string

+ build-flags: >-

+ ${{ inputs.parallel && '--with-parallel' || '' }}

+ ${{ inputs.cuda && '--with-cuda' || '' }}

+ ${{ inputs.libceed && '--with-libceed' || '' }}

+ ${{ inputs.gslib && '--with-gslib' || '' }}

+ ${{ (!(inputs.mfem-branch == 'default') && format('--mfem-branch=''{0}''', inputs.mfem-branch)) || '' }}

+

+ # -------------------------------------------------------------------------------------------------

+ # Begin workflow

+ # -------------------------------------------------------------------------------------------------

+ steps:

+ - name: Checkout repo

+ uses: actions/checkout@v4

+

+ - name: Set up Python ${{ inputs.python-version }}

+ uses: actions/setup-python@v5

+ with:

+ python-version: ${{ inputs.python-version }}

+

+ # -------------------------------------------------------------------------------------------------

+ # Download/install dependencies

+ # -------------------------------------------------------------------------------------------------

+ - name: Install core dependencies via requirements.txt

+ run: |

+ pip install setuptools

+ pip install -r requirements.txt --verbose

+

+ - name: Install MPI

+ if: inputs.parallel

+ run: |

+ sudo apt-get install openmpi-bin libopenmpi-dev

+ export OMPI_MCA_rmaps_base_oversubscribe=1

+ # (broken?) sudo apt-get install mpich libmpich-dev

+ pip install mpi4py

+ mpirun -np 2 python -c "from mpi4py import MPI;print(MPI.COMM_WORLD.rank)"

+

+ - name: Purge PIP chach

+ run: pip cache purge

+

+ - name: Cache CUDA

+ if: inputs.cuda

+ id: cache-cuda

+ uses: actions/cache@v4

+ with:

+ path: ~/cache

+ key: cuda-installer-${{ inputs.cuda-toolkit-version }}-${{ inputs.cuda-driver-version }}

+

+ - name: Download CUDA

+ if: inputs.cuda && steps.cache-cuda.outputs.cache-hit == false

+ run: |

+ CUDA_URL="https://developer.download.nvidia.com/compute/cuda/${{ inputs.cuda-toolkit-version }}/local_installers/cuda_${{ inputs.cuda-toolkit-version }}_${{ inputs.cuda-driver-version }}_linux.run"

+ curl -o ~/cache/cuda.run --create-dirs $CUDA_URL

+

+ - name: Install CUDA

+ if: inputs.cuda

+ run: |

+ # The --silent flag is necessary to bypass user-input, e.g. accepting the EULA

+ sudo sh ~/cache/cuda.run --silent --toolkit

+ echo "/usr/local/cuda/bin" >> $GITHUB_PATH

+

+ - name: Print dependency information

+ run: |

+ pip list

+ printf "\n\n---------- MPI ----------\n"

+ mpiexec --version || printf "MPI not installed"

+ printf "\n\n---------- CUDA ----------\n"

+ nvcc --version || printf "CUDA not installed"

+

+ # -------------------------------------------------------------------------------------------------

+ # Build MFEM + SWIG Bindings + PyMFEM

+ # -------------------------------------------------------------------------------------------------

+ - name: Build MFEM (step 1)

+ if: inputs.phases

+ run: python setup.py install --ext-only --vv ${{ env.build-flags }}

+

+ - name: Build SWIG wrappers (step 2)

+ if: inputs.phases

+ run: python setup.py install --swig --vv ${{ env.build-flags }}

+

+ - name: Build PyMFEM (step 3)

+ if: inputs.phases

+ run: python setup.py install --skip-ext --skip-swig --vv ${{ env.build-flags }}

+

+ - name: Build all (steps 1-3)

+ if: inputs.phases == false

+ run: python setup.py install --vv ${{ env.build-flags }}

+

+ # -------------------------------------------------------------------------------------------------

+ # Run tests

+ # -------------------------------------------------------------------------------------------------

+ - name: Run tests (serial)

+ if: inputs.parallel == false

+ run: |

+ cd test

+ python run_examples.py -serial -verbose

+

+ - name: Run tests (parallel)

+ if: inputs.parallel

+ run: |

+ cd test

+ python run_examples.py -parallel -verbose -np 2

+

+ # -------------------------------------------------------------------------------------------------

+ # Generate an artifact (output of tests) on failure

+ # -------------------------------------------------------------------------------------------------

+ - name: Generate test results artifact

+ id: generate-artifact

+ run: |

+ tar -cvzf sandbox.tar.gz test/sandbox

+ # generate a name for the artifact

+ txt=$(python -c "import datetime;print(datetime.datetime.now().strftime('%H_%M_%S_%f'))")

+ echo name="test_results_"${txt}"_"${{ github.run_id }}".tar.gz" >> $GITHUB_OUTPUT

+

+ - name: Upload Artifact

+ uses: actions/upload-artifact@v4

+ if: failure()

+ with:

+ name: ${{ steps.generate-artifact.outputs.name }}

+ path: sandbox.tar.gz

+ retention-days: 1

diff --git a/.github/workflows/build-and-test-dispatch.yml b/.github/workflows/build-and-test-dispatch.yml

new file mode 100644

index 000000000..6f0ff98db

--- /dev/null

+++ b/.github/workflows/build-and-test-dispatch.yml

@@ -0,0 +1,119 @@

+# build-and-test-dispatch.yml

+#

+# Dispatch workflow for build-and-test-callable.yml

+name: Build and Test (dispatch)

+

+on:

+ workflow_dispatch:

+ inputs:

+ test_options:

+ type: choice

+ required: true

+ description: 'Test all options. If set to false, will only trigger the CI for the default options.'

+ default: 'defaults'

+ options:

+ - 'defaults'

+ - 'fast'

+ - 'cuda'

+ - 'libceed'

+ - 'gslib'

+ - 'all'

+ pull_request:

+

+jobs:

+ # -------------------------------------------------------------------------------------------------

+ # Build and test whole matrix of options on linux

+ # -------------------------------------------------------------------------------------------------

+ test-linux-serial:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' }}

+ strategy:

+ fail-fast: false

+ matrix:

+ mfem-branch: [master, default] # 'default' uses a specific commit hash defined in setup.py:repos_sha

+ python-version: ['3.8', '3.9', '3.10', '3.11', '3.12'] # 3.12 is not supported by scipy

+ parallel: [false]

+ name: test-linux | ${{ matrix.mfem-branch }} | ${{ matrix.python-version }} | ${{ matrix.parallel && 'parallel' || 'serial' }}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ os: ubuntu-latest

+ mfem-branch: ${{ matrix.mfem-branch }}

+ python-version: ${{ matrix.python-version }}

+ parallel: ${{ matrix.parallel }}

+

+ test-linux-parallel:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' }}

+ strategy:

+ fail-fast: false

+ matrix:

+ mfem-branch: [master, default] # 'default' uses a specific commit hash defined in setup.py:repos_sha

+ python-version: ['3.9', '3.10', '3.11', '3.12'] # 3.12 is not supported by scipy

+ parallel: [true]

+ name: test-linux | ${{ matrix.mfem-branch }} | ${{ matrix.python-version }} | ${{ matrix.parallel && 'parallel' || 'serial' }}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ os: ubuntu-latest

+ mfem-branch: ${{ matrix.mfem-branch }}

+ python-version: ${{ matrix.python-version }}

+ parallel: ${{ matrix.parallel }}

+

+ # -------------------------------------------------------------------------------------------------

+ # Fast test

+ # -------------------------------------------------------------------------------------------------

+ test-fast:

+ if: ${{ inputs.test_options == 'fast' }}

+ strategy:

+ fail-fast: false

+ matrix:

+ mfem-branch: [master, default] # 'default' uses a specific commit hash defined in setup.py:repos_sha

+ parallel: [false, true]

+ name: test-fast | ${{ matrix.mfem-branch }} | ${{ matrix.parallel && 'parallel' || 'serial' }}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ os: ubuntu-latest

+ mfem-branch: ${{ matrix.mfem-branch }}

+ python-version: '3.9'

+ parallel: ${{ matrix.parallel }}

+

+ # -------------------------------------------------------------------------------------------------

+ # Specific cases (we want these to use defaults, and not expand the dimensions of the matrix)

+ # -------------------------------------------------------------------------------------------------

+ test-macos:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' }}

+ strategy:

+ fail-fast: false

+ matrix:

+ mfem-branch: [master, default]

+ name: test-macos | ${{ matrix.mfem-branch }}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ os: macos-latest

+ mfem-branch: ${{ matrix.mfem-branch }}

+

+ test-cuda:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' || inputs.test_options == 'cuda'}}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ cuda: true

+ name: test-cuda

+

+ test-libceed:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' || inputs.test_options == 'libceed'}}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ libceed: true

+ name: test-libceed

+

+ test-gslib:

+ if: ${{ github.event_name == 'pull_request' && !contains(github.event.pull_request.labels.*.name, 'skip-ci') || inputs.test_options == 'all' || inputs.test_options == 'gslib'}}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ with:

+ gslib: true

+ name: test-gslib

+

+ # -------------------------------------------------------------------------------------------------

+ # Build and test defaults

+ # -------------------------------------------------------------------------------------------------

+ test-defaults:

+ if: ${{ github.event_name == 'workflow_dispatch' && inputs.test_options == 'defaults' }}

+ uses: ./.github/workflows/build-and-test-callable.yml

+ name: test-defaults

diff --git a/.github/workflows/release_binary.yml b/.github/workflows/release_binary.yml

index 0437a2473..df79b5e92 100644

--- a/.github/workflows/release_binary.yml

+++ b/.github/workflows/release_binary.yml

@@ -36,11 +36,11 @@ jobs:

ls -l dist/

#python3 -m twine upload --repository-url https://test.pypi.org/legacy/ --password ${{ secrets.TEST_PYPI_TOKEN }} --username __token__ --verbose dist/*

python3 -m twine upload --password ${{ secrets.PYPI_TOKEN }} --username __token__ --verbose dist/*

- make_binary_3_7_8_9_10:

+ make_binary_3_8_9_10_11:

needs: make_sdist

strategy:

matrix:

- pythonpath: ["cp37-cp37m", "cp38-cp38", "cp39-cp39", "cp310-cp310"]

+ pythonpath: ["cp38-cp38", "cp39-cp39", "cp310-cp310", "cp311-cp311"]

runs-on: ubuntu-latest

container: quay.io/pypa/manylinux2014_x86_64

@@ -59,14 +59,10 @@ jobs:

#

ls -l /opt/python/

export PATH=/opt/python/${{ matrix.pythonpath }}/bin:$PATH

- pip3 install six auditwheel twine

+ pip3 install auditwheel twine

pip3 install urllib3==1.26.6 # use older version to avoid OpenSSL vesion issue

- pip3 install numpy==1.21.6

- pip3 install numba-scipy

- if [ -f requirements.txt ]; then

- pip3 install -r requirements.txt

- fi

+ pip3 install -r requirements.txt

CWD=$PWD

yum install -y zlib-devel

diff --git a/.github/workflows/test_with_MFEM_master.yml b/.github/workflows/test_with_MFEM_master.yml

deleted file mode 100644

index a2c3ca0c0..000000000

--- a/.github/workflows/test_with_MFEM_master.yml

+++ /dev/null

@@ -1,157 +0,0 @@

-name: Test_with_MFEM_master

-

-on:

- pull_request:

- types:

- - labeled

- #push:

- # branches: [ test ]

- #pull_request:

- # branches: [ test ]

- # types: [synchronize]

-

-jobs:

- build:

- if: contains(github.event.pull_request.labels.*.name, 'in-test-with-mfem-master')

- strategy:

- fail-fast: false

- matrix:

- python-version: ["3.7", "3.8", "3.9", "3.10"]

- #python-version: ["3.10"]

- os: [ubuntu-20.04]

- # USE_FLAGS : cuda, parallel, libceed

- env:

- - { USE_FLAGS: "000"}

- - { USE_FLAGS: "100"}

- - { USE_FLAGS: "010"}

- - { USE_FLAGS: "110"}

-

- include:

- - os: macos-latest

- python-version: 3.9

- env: {USE_FLAGS: "000"}

-# - os: [ubuntu-20.04]

-# python-version: 3.9

-# env: {USE_FLAGS: "001"}

-#

- runs-on: ${{ matrix.os }}

- #env: ${{ matrix.env }}

- env:

- USE_FLAGS: ${{ matrix.env.USE_FLAGS }}

- CUDA: "11.5"

-

- steps:

- - uses: actions/checkout@v3

-# with:

-# ref: master_tracking_branch

-

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v4

- with:

- python-version: ${{ matrix.python-version }}

-

- - name: Install dependencies

- run: |

- #python -m pip install --upgrade pip setuptools wheel

- python -c "import setuptools;print(setuptools.__version__)"

-

- PYTHONLIB=${HOME}/sandbox/lib/python${{ matrix.python-version }}/site-packages

- mkdir -p $PYTHONLIB

-

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo "PYTHONPATH:"$PYTHONPATH

-

- pip install six numpy --verbose

- if [ "${{matrix.python-version}}" = "3.10" ] ; then

- pip install numba numba-scipy --verbose

- #pip install scipy numba --verbose

- else

- pip install numba numba-scipy --verbose

- #pip install numba scipy --verbose

- fi

-

- if [ -f requirements.txt ]; then

- pip install -r requirements.txt --prefix=${HOME}/sandbox --verbose

- fi

-

- python -c "import sys;print(sys.path)"

- python -c "import numpy;print(numpy.__file__)"

-

- echo ${HOME}

- which swig # this default is 4.0.1

- pip install swig --prefix=${HOME}/sandbox

- export PATH=/usr/local/cuda-${CUDA}/bin:${HOME}/sandbox/bin:$PATH

- ls ${HOME}/sandbox/bin

- which swig

- swig -version

- ${HOME}/sandbox/bin/swig -version

-

- if [ "${USE_FLAGS:0:1}" = "1" ] ; then

- echo $cuda

- source ./ci_scripts/add_cuda_11_5.sh;

- fi

- if [ "${USE_FLAGS:1:1}" = "1" ] ; then

- sudo apt-get install mpich;

- sudo apt-get install libmpich-dev;

- pip install mpi4py

- #pip install mpi4py --no-cache-dir --prefix=${HOME}/sandbox;

- #python -m pip install git+https://github.com/mpi4py/mpi4py

- #python -c "import mpi4py;print(mpi4py.get_include())";

- fi

- ls -l $PYTHONLIB

-

- - name: Build

- run: |

- export PATH=/usr/local/cuda-${CUDA}/bin:${HOME}/sandbox/bin:$PATH

- PYTHONLIB=${HOME}/sandbox/lib/python${{ matrix.python-version }}/site-packages

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo $PATH

- echo $PYTHONPATH

- echo "SWIG exe"$(which swig)

-

- if [ "${USE_FLAGS}" = "000" ]; then

- python setup.py install --user --mfem-branch='master'

- fi

- if [ "${USE_FLAGS}" = "010" ]; then

- python setup.py install --with-parallel --prefix=${HOME}/sandbox --mfem-branch='master'

- fi

- if [ "${USE_FLAGS}" = "100" ]; then

- ls -l /usr/local/cuda-${CUDA}/include

- python setup.py install --with-cuda --prefix=${HOME}/sandbox --mfem-branch='master'

- fi

- if [ "${USE_FLAGS}" = "110" ]; then

- python setup.py install --with-cuda --with-parallel --mfem-branch='master'

- fi

- if [ "${USE_FLAGS}" = "001" ]; then

- python setup.py install --prefix=${HOME}/sandbox --with-libceed --mfem-branch='master'

- fi

-

- - name: RUN_EXAMPLES

- run: |

- export PATH=/usr/local/cuda-${CUDA}/bin:$PATH

- PYTHONLIB=${HOME}/sandbox/lib/python${{ matrix.python-version }}/site-packages

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo $PATH

- echo $PYTHONPATH

- cd test

- echo $PATH

- echo $PYTHONPATH

- if [ "${USE_FLAGS}" = "000" ]; then

- #python ../examples/ex1.py

- #python ../examples/ex9.py

- python run_examples.py -serial -verbose

- fi

- if [ "${USE_FLAGS}" = "010" ]; then

- which mpicc

- python run_examples.py -parallel -verbose -np 2

- fi

- if [ "${USE_FLAGS}" = "100" ]; then

- python ../examples/ex1.py --pa

- fi

- if [ "${USE_FLAGS}" = "110" ]; then

- mpirun -np 2 python ../examples/ex1.py --pa

- fi

- if [ "${USE_FLAGS}" = "001" ]; then

- python ../examples/ex1.py -d ceed-cpu

- python ../examples/ex9.py -d ceed-cpu

- fi

diff --git a/.github/workflows/test_with_MFEM_release.yml b/.github/workflows/test_with_MFEM_release.yml

deleted file mode 100644

index 9d5e6db1d..000000000

--- a/.github/workflows/test_with_MFEM_release.yml

+++ /dev/null

@@ -1,158 +0,0 @@

-name: Test_with_MFEM_release

-

-on:

- pull_request:

- types:

- - labeled

- #push:

- # branches: [ test ]

- #pull_request:

- # branches: [ test ]

- # types: [synchronize]

-

-jobs:

- build:

- if: contains(github.event.pull_request.labels.*.name, 'in-test-with-mfem-release')

- strategy:

- fail-fast: false

- matrix:

- python-version: ["3.7", "3.8", "3.9"]

- #python-version: ["3.10"]

- #os: [ubuntu-latest]

- os: [ubuntu-20.04]

- # USE_FLAGS : cuda, parallel, libceed

- env:

- - { USE_FLAGS: "000"}

- - { USE_FLAGS: "010"}

-

- include:

- - os: macos-latest

- python-version: 3.9

- env: {USE_FLAGS: "000"}

-

- runs-on: ${{ matrix.os }}

- #env: ${{ matrix.env }}

- env:

- USE_FLAGS: ${{ matrix.env.USE_FLAGS }}

- CUDA: "11.5"

- SANDBOX: ~/sandbox

-

- steps:

- - uses: actions/checkout@v3

-# with:

-# ref: master_tracking_branch

-

- - name: Set up Python ${{ matrix.python-version }}

- uses: actions/setup-python@v4

- with:

- python-version: ${{ matrix.python-version }}

-

- - name: Install dependencies

- run: |

- #python -m pip install --upgrade pip setuptools wheel

- python -c "import setuptools;print(setuptools.__version__)"

-

- PYTHONLIB=~/sandbox/lib/python${{ matrix.python-version }}/site-packages

- mkdir -p $PYTHONLIB

-

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo "PYTHONPATH:"$PYTHONPATH

-

- pip install six --verbose

- if [ "${{matrix.python-version}}" = "3.10" ] ; then

- pip install numba numba-scipy --verbose

- #pip install scipy

- else

- pip install numba numba-scipy --verbose

- fi

-

- if [ -f requirements.txt ]; then

- pip install -r requirements.txt --prefix=~/sandbox --verbose

- fi

-

- python -c "import sys;print(sys.path)"

- python -c "import numpy;print(numpy.__file__)"

-

- which swig # this default is 4.0.1

- pip install swig --prefix=~/sandbox

- export PATH=/usr/local/cuda-${CUDA}/bin:~/sandbox/bin:$PATH

- which swig

- swig -version

-

- if [ "${USE_FLAGS:0:1}" = "1" ] ; then

- echo $cuda

- source ./ci_scripts/add_cuda_11_5.sh;

- fi

- if [ "${USE_FLAGS:1:1}" = "1" ] ; then

- sudo apt-get install mpich;

- sudo apt-get install libmpich-dev;

- pip install mpi4py --prefix=~/sandbox

- #pip install mpi4py --no-cache-dir --prefix=~/sandbox;

- #python -m pip install git+https://github.com/mpi4py/mpi4py

- python -c "import mpi4py;print(mpi4py.get_include())";

- fi

- ls -l $PYTHONLIB

-

- - name: Build

- run: |

- export PATH=/usr/local/cuda-${CUDA}/bin:~/sandbox/bin:$PATH

- PYTHONLIB=~/sandbox/lib/python${{ matrix.python-version }}/site-packages

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo $PATH

- echo $PYTHONPATH

- echo $SANDBOX

- echo "SWIG exe"$(which swig)

-

- if [ "${USE_FLAGS}" = "000" ]; then

- # test workflow to manually run swig

- python setup.py clean --swig

- python setup.py install --ext-only --with-gslib --verbose

- python setup.py install --swig --with-gslib --verbose

- python setup.py install --skip-ext --skip-swig --with-gslib --verbose

- fi

- if [ "${USE_FLAGS}" = "010" ]; then

- python setup.py install --with-gslib --with-parallel --prefix=$SANDBOX

- fi

-

- - name: RUN_EXAMPLES

- run: |

-

- export PATH=/usr/local/cuda-${CUDA}/bin:$PATH

- PYTHONLIB=~/sandbox/lib/python${{ matrix.python-version }}/site-packages

- export PYTHONPATH=$PYTHONLIB:$PYTHONPATH

- echo $PATH

- echo $PYTHONPATH

- echo $SANDBOX

- cd test

- echo $PATH

- echo $PYTHONPATH

- if [ "${USE_FLAGS}" = "000" ]; then

- python run_examples.py -serial -verbose

- fi

- if [ "${USE_FLAGS}" = "010" ]; then

- which mpicc

- python run_examples.py -parallel -verbose -np 2

- fi

-

- - name: Generate Artifact

- if: always()

- run: |

- tar -cvzf sandbox.tar.gz test/sandbox

-

- - name: Generate artifact name

- if: always()

- id: generate-name

- run: |

- txt=$(python -c "import datetime;print(datetime.datetime.now().strftime('%H_%M_%S_%f'))")

- name="test_result_"${txt}"_"${{ github.run_id }}".tar.gz"

- echo $name

- # (deprecated) echo "::set-output name=artifact::${name}"

- echo "artifact=${name}" >> $GITHUB_OUTPUT

-

- - name: Upload Artifact

- uses: actions/upload-artifact@v3

- if: failure()

- with:

- name: ${{ steps.generate-name.outputs.artifact }}

- path: sandbox.tar.gz

- retention-days: 1

diff --git a/.github/workflows/testrelease_binary.yml b/.github/workflows/testrelease_binary.yml

index 15b099053..ae08741eb 100644

--- a/.github/workflows/testrelease_binary.yml

+++ b/.github/workflows/testrelease_binary.yml

@@ -36,11 +36,11 @@ jobs:

ls -l dist/

python3 -m twine upload --repository-url https://test.pypi.org/legacy/ --password ${{ secrets.TEST_PYPI_TOKEN }} --username __token__ --verbose dist/*

#python3 -m twine upload --password ${{ secrets.PYPI_TOKEN }} --username __token__ --verbose dist/*

- make_binary_3_7_8_9_10:

+ make_binary_3_8_9_10_11:

needs: make_sdist

strategy:

matrix:

- pythonpath: ["cp37-cp37m", "cp38-cp38", "cp39-cp39", "cp310-cp310"]

+ pythonpath: ["cp38-cp38", "cp39-cp39", "cp310-cp310", "cp311-cp311"]

runs-on: ubuntu-latest

container: quay.io/pypa/manylinux2014_x86_64

@@ -59,14 +59,11 @@ jobs:

#

ls -l /opt/python/

export PATH=/opt/python/${{ matrix.pythonpath }}/bin:$PATH

- pip3 install six auditwheel twine

- pip3 install numpy==1.21.6

+ pip3 install auditwheel twine

pip3 install urllib3==1.26.6 # use older version to avoid OpenSSL vesion issue

- pip3 install numba-scipy

- if [ -f requirements.txt ]; then

- pip3 install -r requirements.txt

- fi

+

+ pip3 install -r requirements.txt

CWD=$PWD

yum install -y zlib-devel

diff --git a/INSTALL.md b/INSTALL.md

new file mode 100644

index 000000000..dcd61d519

--- /dev/null

+++ b/INSTALL.md

@@ -0,0 +1,178 @@

+# Installation Guide

+

+## Basic install

+

+Most users will be fine using the binary bundled in the default `pip` install:

+

+```shell

+pip install mfem

+```

+The above installation will download and install a *serial* version of `MFEM`.

+

+## Building from source

+PyMFEM has many options for installation, when building from source, including:

+ - Serial and parallel (MPI) wrappers

+ - Using pre-built local dependencies

+ - Installing additional dependencies such as

+ - `hypre`

+ - `gslib`

+ - `libceed`

+ - `metis`

+

+Most of the options for PyMFEM can be used directly when installing via `python setup.py install`, e.g.

+```shell

+git clone git@github:mfem/PyMFEM.git

+cd PyMFEM

+python setup.py install --user

+```

+For example, parallel (MPI) support is built with the `--with-parallel` flag:

+```shell

+python setup.py install --with-parallel

+```

+

+Note: this option turns on building `metis` and `Hypre`

+

+## Commonly used flags

+

+| Flag | Description |

+|------|-------------|

+| `--with-parallel` | Install both serial and parallel versions of `MFEM` and the wrapper

(note: this option turns on building `metis` and `hypre`) |

+| `--mfem-branch=` | Download/install MFEM using a specific reference (`git` `branch`, `hash`, or `tag`) |

+| `--user` | Install in user's site-package |

+

+In order to see the full list of options, use

+

+```shell

+python setup.py install --help

+```

+

+## Advanced options

+

+### Suitesparse

+`--with-suitesparse` : build MFEM with `suitesparse`. `suitesparse` needs to be installed separately.

+Point to the location of `suitesparse` using the flag `--suitesparse-prefix=`

+

+Note: this option turns on building `metis` in serial

+

+### CUDA

+`--with-cuda` : build MFEM with CUDA. Hypre cuda build is also supported using

+`--with-cuda-hypre`. `--cuda-arch` can be used to specify cuda compute capablility.

+(See table in https://en.wikipedia.org/wiki/CUDA#Supported_GPUs)

+

+CUDA needs to be installed separately and nvcc must be found in PATH ([Example](https://github.com/mfem/PyMFEM/blob/e1466a6a/.github/workflows/build-and-test-callable.yml#L111-L122)).

+

+(examples)

+```shell

+python setup.py install --with-cuda

+

+python setup.py install --with-cuda --with-cuda-hypre

+

+python setup.py install --with-cuda --with-cuda-hypre --cuda-arch=80 (A100)

+

+python setup.py install --with-cuda --with-cuda-hypre --cuda-arch=75 (Turing)

+```

+

+### gslib

+`--with-gslib` : build MFEM with [GSlib](https://github.com/Nek5000/gslib)

+

+Note: this option downloads and builds GSlib

+

+### libCEED

+`--with-libceed` : build MFEM with [libCEED](https://github.com/CEED/libCEED)

+

+Note: this option downloads and builds libCEED

+

+### Specifying compilers

+| Flag | Description |

+|------|--------|

+| `--CC` | c compiler |

+| `--CXX` | c++ compiler |

+| `--MPICC` | mpic compiler |

+| `--MPICXX` | mpic++ compiler |

+

+(example)

+Using Intel compiler

+```shell

+python setup.py install --with-parallel --CC=icc, --CXX=icpc, --MPICC=mpiicc, --MPICXX=mpiicpc

+```

+

+### Building MFEM with specific version

+By default, setup.py build MFEM with specific SHA (which is usually the released latest version).

+In order to use the latest MFEM in Github. One can specify the branch name or SHA using mfem-branch

+option.

+

+`--mfem-branch = `

+

+(example)

+```shell

+python setup.py install --mfem-branch=master

+```

+

+### Using MFEM build externally.

+These options are used to link PyMFEM wrapper with existing MFEM library. We need `--mfem-source`

+and `--mfem-prefix`

+

+| Flag | Description |

+|----------------------------|-------------------------------------------------------------------|

+| `--mfem-source ` | The location of MFEM source used to build MFEM |

+| `--mfem-prefix ` | The location of the MFEM library. `libmfem.so` needs to be found in `/lib` |

+| `--mfems-prefix `| (optional) Specify serial MFEM location separately |

+| `--mfemp-prefix `| (optional) Specify parallel MFEM location separately |

+

+

+### Blas and Lapack

+--with-lapack : build MFEM with lapack

+

+`` is used for CMAKE call to buid MFEM

+`--blas-libraries=`

+`--lapack-libraries=`

+

+### Options for development and testing

+| Flag | Description |

+|------|--------|

+| `--swig` | run swig only |

+| `--skip-swig` | build without running swig` |

+| `--skip-ext` | skip building external libraries.|

+| `--ext-only` | build exteranl libraries and exit.|

+

+During the development, often we update depenencies (such as MFEM) and edit `*.i` file.

+

+

+First clean everything.

+

+```shell

+python setup.py clean --all

+```

+

+Then, build externals alone

+```shell

+python setup.py install --with-parallel --ext-only --mfem-branch=master

+```

+

+Then, genrate swig wrappers.

+```shell

+python setup.py install --with-parallel --swig --mfem-branch=master

+```

+

+If you are not happy with the wrapper (`*.cxx` and `*.py`), you edit `*.i` and redo

+the same. When you are happy, build the wrapper. `--swig` does not clean the

+existing wrapper. So, it will only update wrapper for updated `*.i`

+

+When building a wrapper, you can use `--skip-ext` option. By default, it will re-run

+swig to generate entire wrapper codes.

+```shell

+python setup.py install --with-parallel --skip-ext --mfem-branch=master

+```

+

+If you are sure, you could use `--skip-swig` option, so that it compiles the wrapper

+codes without re-generating it.

+```shell

+python setup.py install --with-parallel --skip-ext --skip-swig --mfem-branch=master

+```

+

+### Other options

+`--unverifiedSSL` :

+ This addresses error relating SSL certificate. Typical error message is

+ ``

+

+

diff --git a/README.md b/README.md

index ef631a977..fe8806552 100644

--- a/README.md

+++ b/README.md

@@ -11,9 +11,8 @@ By default, "pip install mfem" downloads and builds the serial version of MFEM a

Additionally, the installer supports building MFEM with specific options together with other external libraries, including MPI version.

## Install

-```

+```shell

pip install mfem # binary install is available only on linux platforms (Py36-310)

-pip install mfem --no-binary mfem # install serial MFEM + wrapper from source

```

@@ -23,13 +22,9 @@ with pip, or download the package manually and run the script. For example, the

and build parallel version of MFEM library (linked with Metis and Hypre)

and installs under /mfem. See also, docs/install.txt

-### Using pip

-```

-$ pip install mfem --install-option="--with-parallel"

-```

### Build from local source file

-```

+```shell

# Download source and build

$ pip download mfem --no-binary mfem (expand tar.gz file and move to the downloaded directory)

or clone this repository

@@ -59,36 +54,42 @@ $ python setup.py install --help

```

## Usage

-Here is an example to solve div(alpha grad(u)) = f in a square and to plot the result

-with matplotlib (modified from ex1.cpp). Use the badge above to open this in Binder.

-```

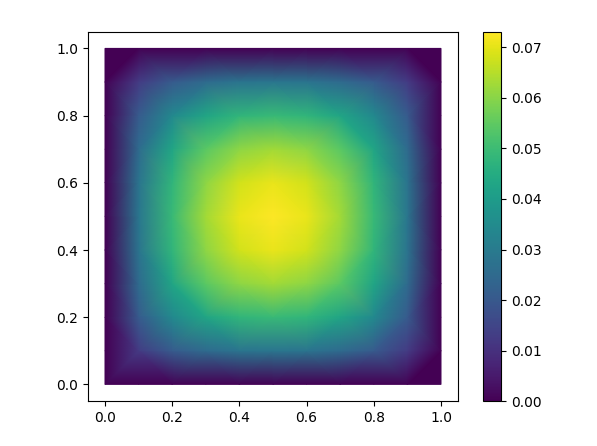

+This example (modified from `ex1.cpp`) solves the Poisson equation,

+$$\nabla \cdot (\alpha \nabla u) = f$$

+in a square and plots the result using matplotlib.

+Use the badge above to open this in Binder.

+

+```python

import mfem.ser as mfem

-# create sample mesh for square shape

+# Create a square mesh

mesh = mfem.Mesh(10, 10, "TRIANGLE")

-# create finite element function space

+# Define the finite element function space

fec = mfem.H1_FECollection(1, mesh.Dimension()) # H1 order=1

fespace = mfem.FiniteElementSpace(mesh, fec)

-#

+# Define the essential dofs

ess_tdof_list = mfem.intArray()

ess_bdr = mfem.intArray([1]*mesh.bdr_attributes.Size())

fespace.GetEssentialTrueDofs(ess_bdr, ess_tdof_list)

-# constant coefficient (diffusion coefficient and RHS)

+# Define constants for alpha (diffusion coefficient) and f (RHS)

alpha = mfem.ConstantCoefficient(1.0)

rhs = mfem.ConstantCoefficient(1.0)

-# Note:

-# Diffusion coefficient can be variable. To use numba-JIT compiled

-# functio. Use the following, where x is numpy-like array.

-# @mfem.jit.scalar

-# def alpha(x):

-# return x+1.0

-#

+"""

+Note

+-----

+In order to represent a variable diffusion coefficient, you

+must use a numba-JIT compiled function. For example:

-# define Bilinear and Linear operator

+>>> @mfem.jit.scalar

+>>> def alpha(x):

+>>> return x+1.0

+"""

+

+# Define the bilinear and linear operators

a = mfem.BilinearForm(fespace)

a.AddDomainIntegrator(mfem.DiffusionIntegrator(alpha))

a.Assemble()

@@ -96,38 +97,37 @@ b = mfem.LinearForm(fespace)

b.AddDomainIntegrator(mfem.DomainLFIntegrator(rhs))

b.Assemble()

-# create gridfunction, which is where the solution vector is stored

-x = mfem.GridFunction(fespace);

+# Initialize a gridfunction to store the solution vector

+x = mfem.GridFunction(fespace)

x.Assign(0.0)

-# form linear equation (AX=B)

+# Form the linear system of equations (AX=B)

A = mfem.OperatorPtr()

B = mfem.Vector()

X = mfem.Vector()

-a.FormLinearSystem(ess_tdof_list, x, b, A, X, B);

+a.FormLinearSystem(ess_tdof_list, x, b, A, X, B)

print("Size of linear system: " + str(A.Height()))

-# solve it using PCG solver and store the solution to x

+# Solve the linear system using PCG and store the solution in x

AA = mfem.OperatorHandle2SparseMatrix(A)

M = mfem.GSSmoother(AA)

mfem.PCG(AA, M, B, X, 1, 200, 1e-12, 0.0)

a.RecoverFEMSolution(X, b, x)

-# extract vertices and solution as numpy array

+# Extract vertices and solution as numpy arrays

verts = mesh.GetVertexArray()

sol = x.GetDataArray()

-# plot solution using Matplotlib

-

+# Plot the solution using matplotlib

import matplotlib.pyplot as plt

import matplotlib.tri as tri

triang = tri.Triangulation(verts[:,0], verts[:,1])

-fig1, ax1 = plt.subplots()

-ax1.set_aspect('equal')

-tpc = ax1.tripcolor(triang, sol, shading='gouraud')

-fig1.colorbar(tpc)

+fig, ax = plt.subplots()

+ax.set_aspect('equal')

+tpc = ax.tripcolor(triang, sol, shading='gouraud')

+fig.colorbar(tpc)

plt.show()

```

diff --git a/ci_scripts/add_cuda_10_1.sh b/ci_scripts/add_cuda_10_1.sh

deleted file mode 100755

index fbc8da44d..000000000

--- a/ci_scripts/add_cuda_10_1.sh

+++ /dev/null

@@ -1,19 +0,0 @@

-#!/bin/bash

-set -ev

-

-UBUNTU_VERSION=$(lsb_release -sr)

-UBUNTU_VERSION=ubuntu"${UBUNTU_VERSION//.}"

-CUDA=10.1.105-1

-CUDA_SHORT=10.1

-

-INSTALLER=cuda-repo-${UBUNTU_VERSION}_${CUDA}_amd64.deb

-wget http://developer.download.nvidia.com/compute/cuda/repos/${UBUNTU_VERSION}/x86_64/${INSTALLER}

-sudo dpkg -i ${INSTALLER}

-wget https://developer.download.nvidia.com/compute/cuda/repos/${UBUNTU_VERSION}/x86_64/7fa2af80.pub

-sudo apt-key add 7fa2af80.pub

-sudo apt update -qq

-sudo apt install -y cuda-core-${CUDA_SHORT/./-} cuda-cudart-dev-${CUDA_SHORT/./-} cuda-cufft-dev-${CUDA_SHORT/./-}

-sudo apt clean

-

-

-

diff --git a/ci_scripts/add_cuda_11_1.sh b/ci_scripts/add_cuda_11_1.sh

deleted file mode 100755

index c004d6dcd..000000000

--- a/ci_scripts/add_cuda_11_1.sh

+++ /dev/null

@@ -1,11 +0,0 @@

-#!/bin/bash

-set -ev

-

-wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

-sudo mv cuda-ubuntu2004.pin /etc/apt/preferences.d/cuda-repository-pin-600

-wget https://developer.download.nvidia.com/compute/cuda/11.1.1/local_installers/cuda-repo-ubuntu2004-11-1-local_11.1.1-455.32.00-1_amd64.deb

-sudo dpkg -i cuda-repo-ubuntu2004-11-1-local_11.1.1-455.32.00-1_amd64.deb

-sudo apt-key add /var/cuda-repo-ubuntu2004-11-1-local/7fa2af80.pub

-sudo apt-get update

-sudo apt-get -y install cuda

-

diff --git a/ci_scripts/add_cuda_11_5.sh b/ci_scripts/add_cuda_11_5.sh

deleted file mode 100644

index 0c3c23fad..000000000

--- a/ci_scripts/add_cuda_11_5.sh

+++ /dev/null

@@ -1,9 +0,0 @@

-#!/bin/bash

-set -ev

-wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

-sudo mv cuda-ubuntu2004.pin /etc/apt/preferences.d/cuda-repository-pin-600

-wget https://developer.download.nvidia.com/compute/cuda/11.5.0/local_installers/cuda-repo-ubuntu2004-11-5-local_11.5.0-495.29.05-1_amd64.deb

-sudo dpkg -i cuda-repo-ubuntu2004-11-5-local_11.5.0-495.29.05-1_amd64.deb

-sudo apt-key add /var/cuda-repo-ubuntu2004-11-5-local/7fa2af80.pub

-sudo apt-get update

-sudo apt-get -y install cuda

diff --git a/data/compass.geo b/data/compass.geo

new file mode 100644

index 000000000..90572084b

--- /dev/null

+++ b/data/compass.geo

@@ -0,0 +1,118 @@

+SetFactory("OpenCASCADE");

+

+order = 1;

+

+R = 1;

+r = 0.2;

+

+Point(1) = {0,0,0};

+

+Point(2) = {r/Sqrt(2),r/Sqrt(2),0};

+Point(3) = {-r/Sqrt(2),r/Sqrt(2),0};

+Point(4) = {-r/Sqrt(2),-r/Sqrt(2),0};

+Point(5) = {r/Sqrt(2),-r/Sqrt(2),0};

+

+Point(6) = {R,0,0};

+Point(7) = {R/Sqrt(2),R/Sqrt(2),0};

+Point(8) = {0,R,0};

+Point(9) = {-R/Sqrt(2),R/Sqrt(2),0};

+Point(10) = {-R,0,0};

+Point(11) = {-R/Sqrt(2),-R/Sqrt(2),0};

+Point(12) = {0,-R,0};

+Point(13) = {R/Sqrt(2),-R/Sqrt(2),0};

+

+Line(1) = {1,2};

+Line(2) = {1,3};

+Line(3) = {1,4};

+Line(4) = {1,5};

+

+Line(5) = {1,6};

+Line(6) = {1,8};

+Line(7) = {1,10};

+Line(8) = {1,12};

+

+Line(9) = {2,6};

+Line(10) = {2,8};

+Line(11) = {3,8};

+Line(12) = {3,10};

+Line(13) = {4,10};

+Line(14) = {4,12};

+Line(15) = {5,12};

+Line(16) = {5,6};

+

+Line(17) = {6,7};

+Line(18) = {7,8};

+Line(19) = {8,9};

+Line(20) = {9,10};

+Line(21) = {10,11};

+Line(22) = {11,12};

+Line(23) = {12,13};

+Line(24) = {13,6};

+

+Transfinite Curve{1:24} = 2;

+

+Physical Curve("ENE") = {17};

+Physical Curve("NNE") = {18};

+Physical Curve("NNW") = {19};

+Physical Curve("WNW") = {20};

+Physical Curve("WSW") = {21};

+Physical Curve("SSW") = {22};

+Physical Curve("SSE") = {23};

+Physical Curve("ESE") = {24};

+

+Curve Loop(1) = {9,17,18,-10};

+Curve Loop(2) = {11,19,20,-12};

+Curve Loop(3) = {13,21,22,-14};

+Curve Loop(4) = {15,23,24,-16};

+

+Plane Surface(1) = {1};

+Plane Surface(2) = {2};

+Plane Surface(3) = {3};

+Plane Surface(4) = {4};

+

+Transfinite Surface{1} = {2,6,7,8};

+Transfinite Surface{2} = {3,8,9,10};

+Transfinite Surface{3} = {4,10,11,12};

+Transfinite Surface{4} = {5,12,13,6};

+Recombine Surface{1:4};

+

+Physical Surface("Base") = {1,2,3,4};

+

+Curve Loop(5) = {1,10,-6};

+Plane Surface(5) = {5};

+Physical Surface("N Even") = {5};

+

+Curve Loop(6) = {6,-11,-2};

+Plane Surface(6) = {6};

+Physical Surface("N Odd") = {6};

+

+Curve Loop(7) = {2,12,-7};

+Plane Surface(7) = {7};

+Physical Surface("W Even") = {7};

+

+Curve Loop(8) = {7,-13,-3};

+Plane Surface(8) = {8};

+Physical Surface("W Odd") = {8};

+

+Curve Loop(9) = {3,14,-8};

+Plane Surface(9) = {9};

+Physical Surface("S Even") = {9};

+

+Curve Loop(10) = {8,-15,-4};

+Plane Surface(10) = {10};

+Physical Surface("S Odd") = {10};

+

+Curve Loop(11) = {4,16,-5};

+Plane Surface(11) = {11};

+Physical Surface("E Even") = {11};

+

+Curve Loop(12) = {5,-9,-1};

+Plane Surface(12) = {12};

+Physical Surface("E Odd") = {12};

+

+// Generate 2D mesh

+Mesh 2;

+SetOrder order;

+Mesh.MshFileVersion = 2.2;

+

+Save "compass.msh";

diff --git a/data/compass.mesh b/data/compass.mesh

new file mode 100644

index 000000000..8bff51ce5

--- /dev/null

+++ b/data/compass.mesh

@@ -0,0 +1,96 @@

+MFEM mesh v1.3

+

+#

+# MFEM Geometry Types (see mesh/geom.hpp):

+#

+# POINT = 0

+# SEGMENT = 1

+# TRIANGLE = 2

+# SQUARE = 3

+# TETRAHEDRON = 4

+# CUBE = 5

+# PRISM = 6

+#

+

+dimension

+2

+

+elements

+12

+10 2 7 0 1

+11 2 0 7 2

+12 2 9 0 2

+13 2 0 9 3

+14 2 11 0 3

+15 2 0 11 4

+16 2 5 0 4

+17 2 0 5 1

+9 3 1 5 6 7

+9 3 2 7 8 9

+9 3 3 9 10 11

+9 3 4 11 12 5

+

+attribute_sets

+16

+"Base" 1 9

+"E Even" 1 16

+"E Odd" 1 17

+"East" 2 16 17

+"N Even" 1 10

+"N Odd" 1 11

+"North" 2 10 11

+"Rose" 8 10 11 12 13 14 15 16 17

+"Rose Even" 4 10 12 14 16

+"Rose Odd" 4 11 13 15 17

+"S Even" 1 14

+"S Odd" 1 15

+"South" 2 14 15

+"W Even" 1 12

+"W Odd" 1 13

+"West" 2 12 13

+

+boundary

+8

+1 1 5 6

+2 1 6 7

+3 1 7 8

+4 1 8 9

+5 1 9 10

+6 1 10 11

+7 1 11 12

+8 1 12 5

+

+bdr_attribute_sets

+13

+"Boundary" 8 1 2 3 4 5 6 7 8

+"ENE" 1 1

+"ESE" 1 8

+"Eastern Boundary" 2 1 8

+"NNE" 1 2

+"NNW" 1 3

+"Northern Boundary" 2 2 3

+"SSE" 1 7

+"SSW" 1 6

+"Southern Boundary" 2 6 7

+"WNW" 1 4

+"WSW" 1 5

+"Western Boundary" 2 4 5

+

+vertices

+13

+2

+0 0

+0.14142136 0.14142136

+-0.14142136 0.14142136

+-0.14142136 -0.14142136

+0.14142136 -0.14142136

+1 0

+0.70710678 0.70710678

+0 1

+-0.70710678 0.70710678

+-1 0

+-0.70710678 -0.70710678

+0 -1

+0.70710678 -0.70710678

+

+mfem_mesh_end

diff --git a/data/compass.msh b/data/compass.msh

new file mode 100644

index 000000000..f9229490c

--- /dev/null

+++ b/data/compass.msh

@@ -0,0 +1,62 @@

+$MeshFormat

+2.2 0 8

+$EndMeshFormat

+$PhysicalNames

+17

+1 1 "ENE"

+1 2 "NNE"

+1 3 "NNW"

+1 4 "WNW"

+1 5 "WSW"

+1 6 "SSW"

+1 7 "SSE"

+1 8 "ESE"

+2 9 "Base"

+2 10 "N Even"

+2 11 "N Odd"

+2 12 "W Even"

+2 13 "W Odd"

+2 14 "S Even"

+2 15 "S Odd"

+2 16 "E Even"

+2 17 "E Odd"

+$EndPhysicalNames

+$Nodes

+13

+1 0 0 0

+2 0.1414213562373095 0.1414213562373095 0

+3 -0.1414213562373095 0.1414213562373095 0

+4 -0.1414213562373095 -0.1414213562373095 0

+5 0.1414213562373095 -0.1414213562373095 0

+6 1 0 0

+7 0.7071067811865475 0.7071067811865475 0

+8 0 1 0

+9 -0.7071067811865475 0.7071067811865475 0

+10 -1 0 0

+11 -0.7071067811865475 -0.7071067811865475 0

+12 0 -1 0

+13 0.7071067811865475 -0.7071067811865475 0

+$EndNodes

+$Elements

+20

+1 1 2 1 17 6 7

+2 1 2 2 18 7 8

+3 1 2 3 19 8 9

+4 1 2 4 20 9 10

+5 1 2 5 21 10 11

+6 1 2 6 22 11 12

+7 1 2 7 23 12 13

+8 1 2 8 24 13 6

+9 2 2 10 5 1 2 8

+10 2 2 11 6 1 8 3

+11 2 2 12 7 1 3 10

+12 2 2 13 8 1 10 4

+13 2 2 14 9 1 4 12

+14 2 2 15 10 1 12 5

+15 2 2 16 11 1 5 6

+16 2 2 17 12 1 6 2

+17 3 2 9 1 2 6 7 8

+18 3 2 9 2 3 8 9 10

+19 3 2 9 3 4 10 11 12

+20 3 2 9 4 5 12 13 6

+$EndElements

diff --git a/docs/changelog.txt b/docs/changelog.txt

index 07638f9f8..db4f8430f 100644

--- a/docs/changelog.txt

+++ b/docs/changelog.txt

@@ -1,5 +1,22 @@

<<< Change Log. >>>

+2024 08

+ * MFEM 4.7 support

+ - AttributeSets are supported. ex39 and ex39p are added to demonstrate how to use it from Python

+ - Hyperbolic conservation element/face form integrators (hyperbolic.hpp) are supported. ex18.py and

+ ex18.py are updated to conform with the updated C++ examples.

+ - Update SWIG requirement to >= 4.2.1 (required to wrap MFEM routines which use recent C++ features)

+ - Buiding --with-libceed will download libceed=0.12.0, as required by MFEM 4.7

+ - Fixed eltrans::transformback

+ - Improved testing using Github actions

+ - New caller and dispatch yml configulations allows for running a test manually

+ - Test runs automatically for PR and PR update

+ - Test using Python 3.11 is added

+ - Refresh install instruction (Install.md)

+ - Python 3.7 has reached EOL and is no longer supported. This version will support Python 3.8 and above, and

+ will likely be the last version to support Python 3.8.

+

+

2023 11 - 2024 01

* MFEM 4.6 support

- Default MFEM SHA is updated to a version on 11/26/2023 (slightly newer than

diff --git a/docs/install.txt b/docs/install.txt

deleted file mode 100644

index c3a7224f1..000000000

--- a/docs/install.txt

+++ /dev/null

@@ -1,130 +0,0 @@

-# Install Guide

-

-PyMFEM wrapper provides MPI version and non-MPI version of wrapper.

-

-Default pip install installs serial MFEM + wrapper

-

-$ pip install mfem

-or

-$ pip install mfem --no-binary mfem

-

-For other configuration such as parallel version, one can either use --install-option

-flags with pip or download the package as follows and run setup script, manually.

-

-$ pip download mfem --no-binary mfem

-

-In order to see the full list of options, use

-

-$ python setup.py install --help

-

-In below, for the brevity, examples are mostly shown using "python setup.py install" convention.

-When using PIP, each option needs to be passed using --install-option.

-

-## Parallel MFEM

---with-parallel : build both serial and parallel version of MFEM and wrapper

-

-Note: this option turns on building metis and Hypre

-

-## Suitesparse

---with-suitesparse : build MFEM with suitesparse. SuiteSparse needs to be installed separately.

---suitesparse-prefix=

-

-Note: this option turns on building metis in serial

-

-## CUDA

---with-cuda option build MFEM with CUDA. Hypre cuda build is also supported using

---with-cuda-hypre. --cuda-arch can be used to specify cuda compute capablility.

-(See table in https://en.wikipedia.org/wiki/CUDA#Supported_GPUs)

-

--with-cuda : build MFEM using CUDA on

---cuda-arch= : specify cuda compute capability version

---with-cuda-hypre : build Hypre with cuda

-

-(example)

-$ python setup.py install --with-cuda

-$ python setup.py install --with-cuda --with-cuda-hypre

-$ python setup.py install --with-cuda --with-cuda-hypre --cuda-arch=80 (A100)

-$ python setup.py install --with-cuda --with-cuda-hypre --cuda-arch=75 (Turing)

-

-## gslib

---with-gslib : build MFEM with GSlib

-

-Note: this option builds GSlib

-

-## libceed

---with-libceed : build MFEM with libceed

-Note: this option builds libceed

-

-## Specify compilers

---CC c compiler

---CXX c++ compiler

---MPICC mpic compiler

---MPICXX mpic++ compiler

-

-(example)

-Using Intel compiler

-$ python setup.py install --with-parallel --CC=icc, --CXX=icpc, --MPICC=mpiicc, --MPICXX=mpiicpc

-

-## Building MFEM with specific version

-By default, setup.py build MFEM with specific SHA (which is usually the released latest version).

-In order to use the latest MFEM in Github. One can specify the branch name or SHA using mfem-branch

-option.

-

--mfem-branch =

-

-(example)

-$ python setup.py install --mfem-branch=master

-

-## Using MFEM build externally.

-These options are used to link PyMFEM wrapper with existing MFEM library. We need --mfem-source

-and --mfem-prefix

-

---mfem-source : : the location of MFEM source used to build MFEM

---mfem-prefix : : the location of MFEM library. libmfem.so needs to be found in /lib

---mfems-prefix : : (optional) specify serial MFEM location separately

---mfemp-prefix : : (ooptional)specify parallel MFEM location separately

-

-## Blas and Lapack

---with-lapack : build MFEM with lapack

-

- is used for CMAKE call to buid MFEM

---blas_-libraries=

---lapack-libraries=

-

-## Development and testing options

---swig : run swig only

---skip-swig : build without running swig

---skip-ext : skip building external libraries.

---ext-only : build exteranl libraries and exit.

-

-During the development, often we update depenencies (such as MFEM) and edit *.i file.

-

-First clean everything.

-

-$ python setup.py clean --all

-

-Then, build externals alone

-$ python setup.py install --with-parallel --ext-only --mfem-branch="master"

-

-Then, genrate swig wrappers.

-$ python setup.py install --with-parallel --swig --mfem-branch="master"

-

-If you are not happy with the wrapper (*.cxx and *.py), you edit *.i and redo

-the same. When you are happy, build the wrapper. --swig does not clean the

-existing wrapper. So, it will only update wrapper for updated *.i

-

-When building a wrapper, you can use --skip-ext option. By default, it will re-run

-swig to generate entire wrapper codes.

-$ python setup.py install --with-parallel --skip-ext --mfem-branch="master"

-

-If you are sure, you could use --skip-swig option, so that it compiles the wrapper

-codes without re-generating it.

-$ python setup.py install --with-parallel --skip-ext --skip-swig --mfem-branch="master"

-

-

-## Other options

---unverifiedSSL :

- This addresses error relating SSL certificate. Typical error message is

- ""

-

-

diff --git a/examples/ex18.py b/examples/ex18.py

index 772f8e966..1c089ceb5 100644

--- a/examples/ex18.py

+++ b/examples/ex18.py

@@ -3,8 +3,15 @@

This is a version of Example 18 with a simple adaptive mesh

refinement loop.

See c++ version in the MFEM library for more detail

+

+ Sample runs:

+

+ python ex18.py -p 1 -r 2 -o 1 -s 3

+ python ex18.py -p 1 -r 1 -o 3 -s 4

+ python ex18.py -p 1 -r 0 -o 5 -s 6

+ python ex18.py -p 2 -r 1 -o 1 -s 3 -mf

+ python ex18.py -p 2 -r 0 -o 3 -s 3 -mf

'''

-from ex18_common import FE_Evolution, InitialCondition, RiemannSolver, DomainIntegrator, FaceIntegrator

from mfem.common.arg_parser import ArgParser

import mfem.ser as mfem

from mfem.ser import intArray

@@ -13,32 +20,39 @@

from numpy import sqrt, pi, cos, sin, hypot, arctan2

from scipy.special import erfc

-# Equation constant parameters.(using globals to share them with ex18_common)

-import ex18_common

+from ex18_common import (EulerMesh,

+ EulerInitialCondition,

+ DGHyperbolicConservationLaws)

def run(problem=1,

ref_levels=1,

order=3,

ode_solver_type=4,

- t_final=0.5,

+ t_final=2.0,

dt=-0.01,

cfl=0.3,

visualization=True,

vis_steps=50,

+ preassembleWeakDiv=False,

meshfile=''):

- ex18_common.num_equation = 4

- ex18_common.specific_heat_ratio = 1.4

- ex18_common.gas_constant = 1.0

- ex18_common.problem = problem

- num_equation = ex18_common.num_equation

+ specific_heat_ratio = 1.4

+ gas_constant = 1.0

+ IntOrderOffset = 1

# 2. Read the mesh from the given mesh file. This example requires a 2D

# periodic mesh, such as ../data/periodic-square.mesh.

- meshfile = expanduser(join(dirname(__file__), '..', 'data', meshfile))

- mesh = mfem.Mesh(meshfile, 1, 1)

+

+ mesh = EulerMesh(meshfile, problem)

dim = mesh.Dimension()

+ num_equation = dim + 2

+

+ # Refine the mesh to increase the resolution. In this example we do

+ # 'ref_levels' of uniform refinement, where 'ref_levels' is a

+ # command-line parameter.

+ for lev in range(ref_levels):

+ mesh.UniformRefinement()

# 3. Define the ODE solver used for time integration. Several explicit

# Runge-Kutta methods are available.

@@ -48,7 +62,7 @@ def run(problem=1,

elif ode_solver_type == 2:

ode_solver = mfem.RK2Solver(1.0)

elif ode_solver_type == 3:

- ode_solver = mfem.RK3SSolver()

+ ode_solver = mfem.RK3SSPSolver()

elif ode_solver_type == 4:

ode_solver = mfem.RK4Solver()

elif ode_solver_type == 6:

@@ -57,13 +71,7 @@ def run(problem=1,

print("Unknown ODE solver type: " + str(ode_solver_type))

exit

- # 4. Refine the mesh to increase the resolution. In this example we do

- # 'ref_levels' of uniform refinement, where 'ref_levels' is a

- # command-line parameter.

- for lev in range(ref_levels):

- mesh.UniformRefinement()

-

- # 5. Define the discontinuous DG finite element space of the given

+ # 4. Define the discontinuous DG finite element space of the given

# polynomial order on the refined mesh.

fec = mfem.DG_FECollection(order, dim)

@@ -78,70 +86,70 @@ def run(problem=1,

assert fes.GetOrdering() == mfem.Ordering.byNODES, "Ordering must be byNODES"

print("Number of unknowns: " + str(vfes.GetVSize()))

- # 6. Define the initial conditions, save the corresponding mesh and grid

- # functions to a file. This can be opened with GLVis with the -gc option.

- # The solution u has components {density, x-momentum, y-momentum, energy}.

- # These are stored contiguously in the BlockVector u_block.

-

- offsets = [k*vfes.GetNDofs() for k in range(num_equation+1)]

- offsets = mfem.intArray(offsets)

- u_block = mfem.BlockVector(offsets)

- mom = mfem.GridFunction(dfes, u_block, offsets[1])

-

- #

- # Define coefficient using VecotrPyCoefficient and PyCoefficient

- # A user needs to define EvalValue method

- #

- u0 = InitialCondition(num_equation)

- sol = mfem.GridFunction(vfes, u_block.GetData())

+ # 5. Define the initial conditions, save the corresponding mesh and grid

+ # functions to files. These can be opened with GLVis using:

+ # "glvis -m euler-mesh.mesh -g euler-1-init.gf" (for x-momentum).

+ u0 = EulerInitialCondition(problem,

+ specific_heat_ratio,

+ gas_constant)

+ sol = mfem.GridFunction(vfes)

sol.ProjectCoefficient(u0)

- mesh.Print("vortex.mesh", 8)

+ # (Python note): GridFunction pointing to the subset of vector FES.

+ # sol is Vector with dim*fes.GetNDofs()

+ # Since sol.GetDataArray() returns numpy array pointing to the data, we make

+ # Vector from a sub-vector of the returned numpy array and pass it to GridFunction

+ # constructor.

+

+ mom = mfem.GridFunction(dfes, mfem.Vector(

+ sol.GetDataArray()[fes.GetNDofs():]))

+ mesh.Print("euler-mesh.mesh", 8)

+

for k in range(num_equation):

- uk = mfem.GridFunction(fes, u_block.GetBlock(k).GetData())

- sol_name = "vortex-" + str(k) + "-init.gf"

+ uk = mfem.GridFunction(fes, mfem.Vector(

+ sol.GetDataArray()[k*fes.GetNDofs():]))

+ sol_name = "euler-" + str(k) + "-init.gf"

uk.Save(sol_name, 8)

- # 7. Set up the nonlinear form corresponding to the DG discretization of the

- # flux divergence, and assemble the corresponding mass matrix.

- Aflux = mfem.MixedBilinearForm(dfes, fes)

- Aflux.AddDomainIntegrator(DomainIntegrator(dim))

- Aflux.Assemble()

+ # 6. Set up the nonlinear form with euler flux and numerical flux

+ flux = mfem.EulerFlux(dim, specific_heat_ratio)

+ numericalFlux = mfem.RusanovFlux(flux)

+ formIntegrator = mfem.HyperbolicFormIntegrator(

+ numericalFlux, IntOrderOffset)

- A = mfem.NonlinearForm(vfes)

- rsolver = RiemannSolver()

- ii = FaceIntegrator(rsolver, dim)

- A.AddInteriorFaceIntegrator(ii)

-

- # 8. Define the time-dependent evolution operator describing the ODE

- # right-hand side, and perform time-integration (looping over the time

- # iterations, ti, with a time-step dt).

- euler = FE_Evolution(vfes, A, Aflux.SpMat())

+ euler = DGHyperbolicConservationLaws(vfes, formIntegrator,

+ preassembleWeakDivergence=preassembleWeakDiv)

+ # 7. Visualize momentum with its magnitude

if (visualization):

sout = mfem.socketstream("localhost", 19916)

sout.precision(8)

sout << "solution\n" << mesh << mom

+ sout << "window_title 'momentum, t = 0'\n"

+ sout << "view 0 0\n" # view from top

+ sout << "keys jlm\n" # turn off perspective and light, show mesh

sout << "pause\n"

sout.flush()

print("GLVis visualization paused.")

print(" Press space (in the GLVis window) to resume it.")

- # Determine the minimum element size.

- hmin = 0

+ # 8. Time integration

+ hmin = np.inf

if (cfl > 0):

hmin = min([mesh.GetElementSize(i, 1) for i in range(mesh.GetNE())])

+ # Find a safe dt, using a temporary vector. Calling Mult() computes the

+ # maximum char speed at all quadrature points on all faces (and all

+ # elements with -mf).

+ z = mfem.Vector(sol.Size())

+ euler.Mult(sol, z)

+

+ max_char_speed = euler.GetMaxCharSpeed()

+ dt = cfl * hmin / max_char_speed / (2 * order + 1)

+

t = 0.0

euler.SetTime(t)

ode_solver.Init(euler)

- if (cfl > 0):

- # Find a safe dt, using a temporary vector. Calling Mult() computes the

- # maximum char speed at all quadrature points on all faces.

- z = mfem.Vector(A.Width())

- A.Mult(sol, z)

-

- dt = cfl * hmin / ex18_common.max_char_speed / (2*order+1)

# Integrate in time.

done = False

@@ -152,23 +160,29 @@ def run(problem=1,

t, dt_real = ode_solver.Step(sol, t, dt_real)

if (cfl > 0):

- dt = cfl * hmin / ex18_common.max_char_speed / (2*order+1)

+ max_char_speed = euler.GetMaxCharSpeed()

+ dt = cfl * hmin / max_char_speed / (2*order+1)

ti = ti+1

done = (t >= t_final - 1e-8*dt)

if (done or ti % vis_steps == 0):

print("time step: " + str(ti) + ", time: " + "{:g}".format(t))

if (visualization):

- sout << "solution\n" << mesh << mom << flush

-

- # 9. Save the final solution. This output can be viewed later using GLVis:

- # "glvis -m vortex.mesh -g vortex-1-final.gf".

+ sout << "window_title 'momentum, t = " << "{:g}".format(

+ t) << "'\n"

+ sout << "solution\n" << mesh << mom

+ sout.flush()

+

+ # 8. Save the final solution. This output can be viewed later using GLVis:

+ # "glvis -m euler.mesh -g euler-1-final.gf".

+ mesh.Print("euler-mesh-final.mesh", 8)

for k in range(num_equation):

- uk = mfem.GridFunction(fes, u_block.GetBlock(k).GetData())

- sol_name = "vortex-" + str(k) + "-final.gf"

+ uk = mfem.GridFunction(fes, mfem.Vector(

+ sol.GetDataArray()[k*fes.GetNDofs():]))

+ sol_name = "euler-" + str(k) + "-final.gf"

uk.Save(sol_name, 8)

print(" done")

- # 10. Compute the L2 solution error summed for all components.

+ # 9. Compute the L2 solution error summed for all components.

if (t_final == 2.0):

error = sol.ComputeLpError(2., u0)

print("Solution error: " + "{:g}".format(error))

@@ -178,7 +192,7 @@ def run(problem=1,

parser = ArgParser(description='Ex18')

parser.add_argument('-m', '--mesh',

- default='periodic-square.mesh',

+ default='',

action='store', type=str,

help='Mesh file to use.')

parser.add_argument('-p', '--problem',

@@ -203,15 +217,39 @@ def run(problem=1,

parser.add_argument('-c', '--cfl_number',

action='store', default=0.3, type=float,

help="CFL number for timestep calculation.")

- parser.add_argument('-vis', '--visualization',

- action='store_true',

- help='Enable GLVis visualization')

+ parser.add_argument('-novis', '--no_visualization',

+ action='store_true', default=False,

+ help='Disable GLVis visualization')

+ parser.add_argument("-ea", "--element-assembly-divergence",

+ action='store_true', default=False,

+ help="Weak divergence assembly level\n" +

+ " ea - Element assembly with interpolated")

+ parser.add_argument("-mf", "--matrix-free-divergence",

+ action='store_true', default=False,

+ help="Weak divergence assembly level\n" +

+ " mf - Nonlinear assembly in matrix-free manner")

parser.add_argument('-vs', '--visualization-steps',

action='store', default=50, type=float,

help="Visualize every n-th timestep.")

args = parser.parse_args()

+ visualization = not args.no_visualization

+

+ if (not args.matrix_free_divergence and

+ not args.element_assembly_divergence):

+ args.element_assembly_divergence = True

+ args.matrix_free_divergence = False

+ preassembleWeakDiv = True

+

+ elif args.element_assembly_divergence:

+ args.matrix_free_divergence = False

+ preassembleWeakDiv = True

+

+ elif args.matrix_free_divergence:

+ args.element_assembly_divergence = False

+ preassembleWeakDiv = False

+

parser.print_options(args)

run(problem=args.problem,

@@ -221,6 +259,7 @@ def run(problem=1,

t_final=args.t_final,

dt=args.time_step,

cfl=args.cfl_number,

- visualization=args.visualization,

+ visualization=visualization,

vis_steps=args.visualization_steps,

+ preassembleWeakDiv=preassembleWeakDiv,

meshfile=args.mesh)

diff --git a/examples/ex18_common.py b/examples/ex18_common.py

index 9664f718b..5c6fe1ed7 100644

--- a/examples/ex18_common.py

+++ b/examples/ex18_common.py

@@ -3,12 +3,6 @@

This is a python translation of ex18.hpp

- note: following variabls are set from ex18 or ex18p

- problem

- num_equation

- max_char_speed

- specific_heat_ratio;

- gas_constant;

'''

import numpy as np

@@ -19,332 +13,200 @@

else:

import mfem.par as mfem

-num_equation = 0

-specific_heat_ratio = 0

-gas_constant = 0

-problem = 0

-max_char_speed = 0

-

-

-class FE_Evolution(mfem.TimeDependentOperator):

- def __init__(self, vfes, A, A_flux):

- self.dim = vfes.GetFE(0).GetDim()

- self.vfes = vfes

- self.A = A

- self.Aflux = A_flux

- self.Me_inv = mfem.DenseTensor(vfes.GetFE(0).GetDof(),

- vfes.GetFE(0).GetDof(),

- vfes.GetNE())

-

- self.state = mfem.Vector(num_equation)

- self.f = mfem.DenseMatrix(num_equation, self.dim)

- self.flux = mfem.DenseTensor(vfes.GetNDofs(), self.dim, num_equation)

- self.z = mfem.Vector(A.Height())

-

- dof = vfes.GetFE(0).GetDof()

- Me = mfem.DenseMatrix(dof)

- inv = mfem.DenseMatrixInverse(Me)

- mi = mfem.MassIntegrator()

- for i in range(vfes.GetNE()):

- mi.AssembleElementMatrix(vfes.GetFE(

- i), vfes.GetElementTransformation(i), Me)

- inv.Factor()

- inv.GetInverseMatrix(self.Me_inv(i))

- super(FE_Evolution, self).__init__(A.Height())

-

- def GetFlux(self, x, flux):

- state = self.state

- dof = self.flux.SizeI()

- dim = self.flux.SizeJ()

-

- flux_data = []

- for i in range(dof):

- for k in range(num_equation):

- self.state[k] = x[i, k]

- ComputeFlux(state, dim, self.f)

-

- flux_data.append(self.f.GetDataArray().transpose().copy())

- # flux[i].Print()

- # print(self.f.GetDataArray())

- # for d in range(dim):

- # for k in range(num_equation):

- # flux[i, d, k] = self.f[k, d]

-

- mcs = ComputeMaxCharSpeed(state, dim)

- if (mcs > globals()['max_char_speed']):

- globals()['max_char_speed'] = mcs

-

- flux.Assign(np.stack(flux_data))

- #print("max char speed", globals()['max_char_speed'])

+from os.path import expanduser, join, dirname

- def Mult(self, x, y):

- globals()['max_char_speed'] = 0.

- num_equation = globals()['num_equation']

- # 1. Create the vector z with the face terms -.

- self.A.Mult(x, self.z)

-

- # 2. Add the element terms.

- # i. computing the flux approximately as a grid function by interpolating

- # at the solution nodes.

- # ii. multiplying this grid function by a (constant) mixed bilinear form for

- # each of the num_equation, computing (F(u), grad(w)) for each equation.

-

- xmat = mfem.DenseMatrix(

- x.GetData(), self.vfes.GetNDofs(), num_equation)

- self.GetFlux(xmat, self.flux)

-

- for k in range(num_equation):

- fk = mfem.Vector(self.flux[k].GetData(),

- self.dim * self.vfes.GetNDofs())

- o = k * self.vfes.GetNDofs()

- zk = self.z[o: o+self.vfes.GetNDofs()]

- self.Aflux.AddMult(fk, zk)

-

- # 3. Multiply element-wise by the inverse mass matrices.

- zval = mfem.Vector()

- vdofs = mfem.intArray()

- dof = self.vfes.GetFE(0).GetDof()

- zmat = mfem.DenseMatrix()

- ymat = mfem.DenseMatrix(dof, num_equation)

- for i in range(self.vfes.GetNE()):

- # Return the vdofs ordered byNODES

- vdofs = mfem.intArray(self.vfes.GetElementVDofs(i))

- self.z.GetSubVector(vdofs, zval)

- zmat.UseExternalData(zval.GetData(), dof, num_equation)

- mfem.Mult(self.Me_inv[i], zmat, ymat)

- y.SetSubVector(vdofs, ymat.GetData())

-

-

-class DomainIntegrator(mfem.PyBilinearFormIntegrator):

- def __init__(self, dim):

- num_equation = globals()['num_equation']

- self.flux = mfem.DenseMatrix(num_equation, dim)

- self.shape = mfem.Vector()

- self.dshapedr = mfem.DenseMatrix()

- self.dshapedx = mfem.DenseMatrix()

- super(DomainIntegrator, self).__init__()

-

- def AssembleElementMatrix2(self, trial_fe, test_fe, Tr, elmat):

- # Assemble the form (vec(v), grad(w))

-

- # Trial space = vector L2 space (mesh dim)

- # Test space = scalar L2 space

-

- dof_trial = trial_fe.GetDof()

- dof_test = test_fe.GetDof()

- dim = trial_fe.GetDim()

-

- self.shape.SetSize(dof_trial)

- self.dshapedr.SetSize(dof_test, dim)

- self.dshapedx.SetSize(dof_test, dim)

-

- elmat.SetSize(dof_test, dof_trial * dim)

- elmat.Assign(0.0)

-

- maxorder = max(trial_fe.GetOrder(), test_fe.GetOrder())

- intorder = 2 * maxorder

- ir = mfem.IntRules.Get(trial_fe.GetGeomType(), intorder)

-

- for i in range(ir.GetNPoints()):

- ip = ir.IntPoint(i)

-

- # Calculate the shape functions

- trial_fe.CalcShape(ip, self.shape)

- self.shape *= ip.weight

-

- # Compute the physical gradients of the test functions

- Tr.SetIntPoint(ip)

- test_fe.CalcDShape(ip, self.dshapedr)

- mfem.Mult(self.dshapedr, Tr.AdjugateJacobian(), self.dshapedx)

-

- for d in range(dim):

- for j in range(dof_test):

- for k in range(dof_trial):

- elmat[j, k + d * dof_trial] += self.shape[k] * \

- self.dshapedx[j, d]

-

-

-class FaceIntegrator(mfem.PyNonlinearFormIntegrator):

- def __init__(self, rsolver, dim):

- self.rsolver = rsolver

- self.shape1 = mfem.Vector()

- self.shape2 = mfem.Vector()

- self.funval1 = mfem.Vector(num_equation)

- self.funval2 = mfem.Vector(num_equation)

- self.nor = mfem.Vector(dim)

- self.fluxN = mfem.Vector(num_equation)

- self.eip1 = mfem.IntegrationPoint()

- self.eip2 = mfem.IntegrationPoint()

- super(FaceIntegrator, self).__init__()

-

- self.fluxNA = np.atleast_2d(self.fluxN.GetDataArray())

-

- def AssembleFaceVector(self, el1, el2, Tr, elfun, elvect):

- num_equation = globals()['num_equation']

- # Compute the term on the interior faces.

- dof1 = el1.GetDof()

- dof2 = el2.GetDof()

-

- self.shape1.SetSize(dof1)

- self.shape2.SetSize(dof2)

-

- elvect.SetSize((dof1 + dof2) * num_equation)

- elvect.Assign(0.0)

-

- elfun1_mat = mfem.DenseMatrix(elfun.GetData(), dof1, num_equation)

- elfun2_mat = mfem.DenseMatrix(

- elfun[dof1*num_equation:].GetData(), dof2, num_equation)

-

- elvect1_mat = mfem.DenseMatrix(elvect.GetData(), dof1, num_equation)

- elvect2_mat = mfem.DenseMatrix(

- elvect[dof1*num_equation:].GetData(), dof2, num_equation)

-

- # Integration order calculation from DGTraceIntegrator

- if (Tr.Elem2No >= 0):

- intorder = (min(Tr.Elem1.OrderW(), Tr.Elem2.OrderW()) +

- 2*max(el1.GetOrder(), el2.GetOrder()))

+class DGHyperbolicConservationLaws(mfem.TimeDependentOperator):

+ def __init__(self, vfes_, formIntegrator_, preassembleWeakDivergence=True):

+

+ super(DGHyperbolicConservationLaws, self).__init__(

+ vfes_.GetTrueVSize())

+ self.num_equations = formIntegrator_.num_equations

+ self.vfes = vfes_

+ self.dim = vfes_.GetMesh().SpaceDimension()

+ self.formIntegrator = formIntegrator_

+

+ self.z = mfem.Vector(vfes_.GetTrueVSize())

+

+ self.weakdiv = None

+ self.max_char_speed = None

+

+ self.ComputeInvMass()

+

+ if mfem_mode == 'serial':

+ self.nonlinearForm = mfem.NonlinearForm(self.vfes)

+ else:

+ if isinstance(self.vfes, mfem.ParFiniteElementSpace):

+ self.nonlinearForm = mfem.ParNonlinearForm(self.vfes)

+ else:

+ self.nonlinearForm = mfem.NonlinearForm(self.vfes)

+

+ if preassembleWeakDivergence:

+ self.ComputeWeakDivergence()

else:

- intorder = Tr.Elem1.OrderW() + 2*el1.GetOrder()

+ self.nonlinearForm.AddDomainIntegrator(self.formIntegrator)

+

+ self.nonlinearForm.AddInteriorFaceIntegrator(self.formIntegrator)

+ self.nonlinearForm.UseExternalIntegrators()

+

+ def GetMaxCharSpeed(self):

+ return self.max_char_speed

- if (el1.Space() == mfem.FunctionSpace().Pk):

- intorder += 1

+ def ComputeInvMass(self):

+ inv_mass = mfem.InverseIntegrator(mfem.MassIntegrator())

- ir = mfem.IntRules.Get(Tr.GetGeometryType(), int(intorder))

+ self.invmass = [None]*self.vfes.GetNE()

+ for i in range(self.vfes.GetNE()):

+ dof = self.vfes.GetFE(i).GetDof()

+ self.invmass[i] = mfem.DenseMatrix(dof)

+ inv_mass.AssembleElementMatrix(self.vfes.GetFE(i),

+ self.vfes.GetElementTransformation(

+ i),

+ self.invmass[i])

+

+ def ComputeWeakDivergence(self):

+ weak_div = mfem.TransposeIntegrator(mfem.GradientIntegrator())

+

+ weakdiv_bynodes = mfem.DenseMatrix()

- mat1A = elvect1_mat.GetDataArray()

- mat2A = elvect2_mat.GetDataArray()

- shape1A = np.atleast_2d(self.shape1.GetDataArray())

- shape2A = np.atleast_2d(self.shape2.GetDataArray())

+ self.weakdiv = [None]*self.vfes.GetNE()

- for i in range(ir.GetNPoints()):

- ip = ir.IntPoint(i)

- Tr.Loc1.Transform(ip, self.eip1)

- Tr.Loc2.Transform(ip, self.eip2)

+ for i in range(self.vfes.GetNE()):

+ dof = self.vfes.GetFE(i).GetDof()

+ weakdiv_bynodes.SetSize(dof, dof*self.dim)

+ weak_div.AssembleElementMatrix2(self.vfes.GetFE(i),

+ self.vfes.GetFE(i),

+ self.vfes.GetElementTransformation(

+ i),

+ weakdiv_bynodes)

+ self.weakdiv[i] = mfem.DenseMatrix()

+ self.weakdiv[i].SetSize(dof, dof*self.dim)

+

+ # Reorder so that trial space is ByDim.

+ # This makes applying weak divergence to flux value simpler.

+ for j in range(dof):

+ for d in range(self.dim):

+ self.weakdiv[i].SetCol(

+ j*self.dim + d, weakdiv_bynodes.GetColumn(d*dof + j))

- # Calculate basis functions on both elements at the face

- el1.CalcShape(self.eip1, self.shape1)

- el2.CalcShape(self.eip2, self.shape2)

+ def Mult(self, x, y):

+ # 0. Reset wavespeed computation before operator application.

+ self.formIntegrator.ResetMaxCharSpeed()

+

+ # 1. Apply Nonlinear form to obtain an auxiliary result

+ # z = - _e

+ # If weak-divergence is not preassembled, we also have weak-divergence

+ # z = - _e + (F(u_h), ∇v)

+ self.nonlinearForm.Mult(x, self.z)

+ #print("!!!!", self.weakdiv)

+ if self.weakdiv is not None: # if weak divergence is pre-assembled

+ # Apply weak divergence to F(u_h), and inverse mass to z_loc + weakdiv_loc

+