diff --git a/docs/source/en/api/pipelines/marigold.md b/docs/source/en/api/pipelines/marigold.md

index 93ca39e77b9c..e9ca0df067ba 100644

--- a/docs/source/en/api/pipelines/marigold.md

+++ b/docs/source/en/api/pipelines/marigold.md

@@ -1,4 +1,6 @@

-

-# Marigold Pipelines for Computer Vision Tasks

+# Marigold Computer Vision

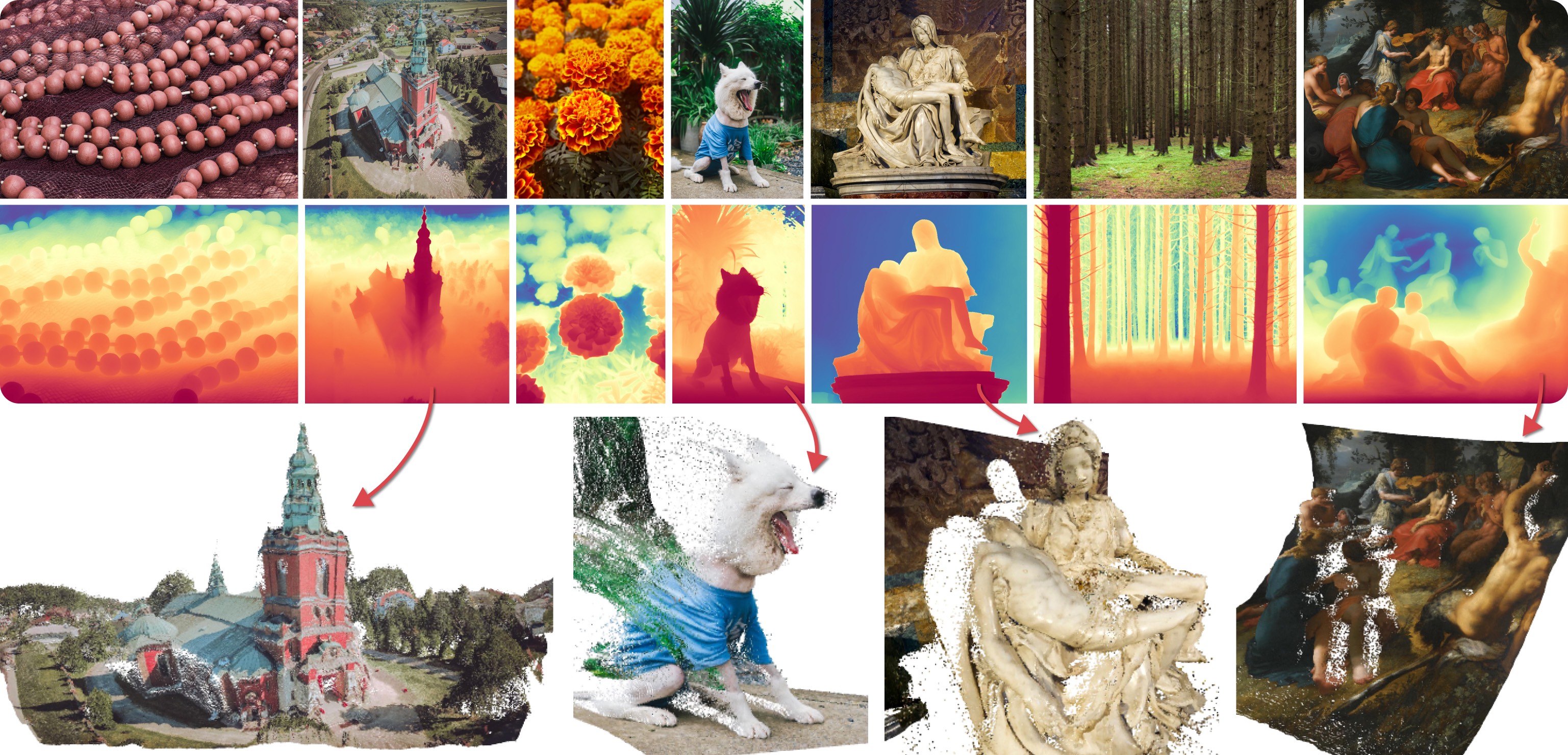

-Marigold was proposed in [Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145), a CVPR 2024 Oral paper by [Bingxin Ke](http://www.kebingxin.com/), [Anton Obukhov](https://www.obukhov.ai/), [Shengyu Huang](https://shengyuh.github.io/), [Nando Metzger](https://nandometzger.github.io/), [Rodrigo Caye Daudt](https://rcdaudt.github.io/), and [Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

-The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks.

-Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above.

-Later,

-- [Tianfu Wang](https://tianfwang.github.io/) trained the first Latent Consistency Model (LCM) of Marigold, which unlocked fast single-step inference;

-- [Kevin Qu](https://www.linkedin.com/in/kevin-qu-b3417621b/?locale=en_US) extended the approach to Surface Normals Estimation;

-- [Anton Obukhov](https://www.obukhov.ai/) contributed the pipelines and documentation into diffusers (enabled and supported by [YiYi Xu](https://yiyixuxu.github.io/) and [Sayak Paul](https://sayak.dev/)).

+Marigold was proposed in

+[Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145),

+a CVPR 2024 Oral paper by

+[Bingxin Ke](http://www.kebingxin.com/),

+[Anton Obukhov](https://www.obukhov.ai/),

+[Shengyu Huang](https://shengyuh.github.io/),

+[Nando Metzger](https://nandometzger.github.io/),

+[Rodrigo Caye Daudt](https://rcdaudt.github.io/), and

+[Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

+The core idea is to **repurpose the generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional

+computer vision tasks**.

+This approach was explored by fine-tuning Stable Diffusion for **Monocular Depth Estimation**, as demonstrated in the

+teaser above.

+

+Marigold was later extended in the follow-up paper,

+[Marigold: Affordable Adaptation of Diffusion-Based Image Generators for Image Analysis](https://huggingface.co/papers/2312.02145),

+authored by

+[Bingxin Ke](http://www.kebingxin.com/),

+[Kevin Qu](https://www.linkedin.com/in/kevin-qu-b3417621b/?locale=en_US),

+[Tianfu Wang](https://tianfwang.github.io/),

+[Nando Metzger](https://nandometzger.github.io/),

+[Shengyu Huang](https://shengyuh.github.io/),

+[Bo Li](https://www.linkedin.com/in/bobboli0202/),

+[Anton Obukhov](https://www.obukhov.ai/), and

+[Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

+This work expanded Marigold to support new modalities such as **Surface Normals** and **Intrinsic Image Decomposition**

+(IID), introduced a training protocol for **Latent Consistency Models** (LCM), and demonstrated **High-Resolution** (HR)

+processing capability.

-The abstract from the paper is:

+

-*Monocular depth estimation is a fundamental computer vision task. Recovering 3D depth from a single image is geometrically ill-posed and requires scene understanding, so it is not surprising that the rise of deep learning has led to a breakthrough. The impressive progress of monocular depth estimators has mirrored the growth in model capacity, from relatively modest CNNs to large Transformer architectures. Still, monocular depth estimators tend to struggle when presented with images with unfamiliar content and layout, since their knowledge of the visual world is restricted by the data seen during training, and challenged by zero-shot generalization to new domains. This motivates us to explore whether the extensive priors captured in recent generative diffusion models can enable better, more generalizable depth estimation. We introduce Marigold, a method for affine-invariant monocular depth estimation that is derived from Stable Diffusion and retains its rich prior knowledge. The estimator can be fine-tuned in a couple of days on a single GPU using only synthetic training data. It delivers state-of-the-art performance across a wide range of datasets, including over 20% performance gains in specific cases. Project page: https://marigoldmonodepth.github.io.*

+The early Marigold models (`v1-0` and earlier) were optimized for best results with at least 10 inference steps.

+LCM models were later developed to enable high-quality inference in just 1 to 4 steps.

+Marigold models `v1-1` and later use the DDIM scheduler to achieve optimal

+results in as few as 1 to 4 steps.

-## Available Pipelines

+

-Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a *prediction* of the modality of interest, such as a depth map of the input image.

-Currently, the following tasks are implemented:

+## Available Pipelines

-| Pipeline | Predicted Modalities | Demos |

-|---------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------:|

-| [MarigoldDepthPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_depth.py) | [Depth](https://en.wikipedia.org/wiki/Depth_map), [Disparity](https://en.wikipedia.org/wiki/Binocular_disparity) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-lcm), [Slow Original Demo (DDIM)](https://huggingface.co/spaces/prs-eth/marigold) |

-| [MarigoldNormalsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_normals.py) | [Surface normals](https://en.wikipedia.org/wiki/Normal_mapping) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-normals-lcm) |

+Each pipeline is tailored for a specific computer vision task, processing an input RGB image and generating a

+corresponding prediction.

+Currently, the following computer vision tasks are implemented:

+| Pipeline | Recommended Model Checkpoints | Spaces (Interactive Apps) | Predicted Modalities |

+|---------------------------------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------:|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [MarigoldDepthPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_depth.py) | [prs-eth/marigold-depth-v1-1](https://huggingface.co/prs-eth/marigold-depth-v1-1) | [Depth Estimation](https://huggingface.co/spaces/prs-eth/marigold) | [Depth](https://en.wikipedia.org/wiki/Depth_map), [Disparity](https://en.wikipedia.org/wiki/Binocular_disparity) |

+| [MarigoldNormalsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_normals.py) | [prs-eth/marigold-normals-v1-1](https://huggingface.co/prs-eth/marigold-normals-v1-1) | [Surface Normals Estimation](https://huggingface.co/spaces/prs-eth/marigold-normals) | [Surface normals](https://en.wikipedia.org/wiki/Normal_mapping) |

+| [MarigoldIntrinsicsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_intrinsics.py) | [prs-eth/marigold-iid-appearance-v1-1](https://huggingface.co/prs-eth/marigold-iid-appearance-v1-1),

[prs-eth/marigold-iid-lighting-v1-1](https://huggingface.co/prs-eth/marigold-iid-lighting-v1-1) | [Intrinsic Image Decomposition](https://huggingface.co/spaces/prs-eth/marigold-iid) | [Albedo](https://en.wikipedia.org/wiki/Albedo), [Materials](https://www.n.aiq3d.com/wiki/roughnessmetalnessao-map), [Lighting](https://en.wikipedia.org/wiki/Diffuse_reflection) |

## Available Checkpoints

-The original checkpoints can be found under the [PRS-ETH](https://huggingface.co/prs-eth/) Hugging Face organization.

+All original checkpoints are available under the [PRS-ETH](https://huggingface.co/prs-eth/) organization on Hugging Face.

+They are designed for use with diffusers pipelines and the [original codebase](https://github.com/prs-eth/marigold), which can also be used to train

+new model checkpoints.

+The following is a summary of the recommended checkpoints, all of which produce reliable results with 1 to 4 steps.

+

+| Checkpoint | Modality | Comment |

+|-----------------------------------------------------------------------------------------------------|--------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [prs-eth/marigold-depth-v1-1](https://huggingface.co/prs-eth/marigold-depth-v1-1) | Depth | Affine-invariant depth prediction assigns each pixel a value between 0 (near plane) and 1 (far plane), with both planes determined by the model during inference. |

+| [prs-eth/marigold-normals-v0-1](https://huggingface.co/prs-eth/marigold-normals-v0-1) | Normals | The surface normals predictions are unit-length 3D vectors in the screen space camera, with values in the range from -1 to 1. |

+| [prs-eth/marigold-iid-appearance-v1-1](https://huggingface.co/prs-eth/marigold-iid-appearance-v1-1) | Intrinsics | InteriorVerse decomposition is comprised of Albedo and two BRDF material properties: Roughness and Metallicity. |

+| [prs-eth/marigold-iid-lighting-v1-1](https://huggingface.co/prs-eth/marigold-iid-lighting-v1-1) | Intrinsics | HyperSim decomposition of an image \\(I\\) is comprised of Albedo \\(A\\), Diffuse shading \\(S\\), and Non-diffuse residual \\(R\\): \\(I = A*S+R\\). |

-Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines. Also, to know more about reducing the memory usage of this pipeline, refer to the ["Reduce memory usage"] section [here](../../using-diffusers/svd#reduce-memory-usage).

+Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff

+between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to

+efficiently load the same components into multiple pipelines.

+Also, to know more about reducing the memory usage of this pipeline, refer to the ["Reduce memory usage"] section

+[here](../../using-diffusers/svd#reduce-memory-usage).

-Marigold pipelines were designed and tested only with `DDIMScheduler` and `LCMScheduler`.

-Depending on the scheduler, the number of inference steps required to get reliable predictions varies, and there is no universal value that works best across schedulers.

-Because of that, the default value of `num_inference_steps` in the `__call__` method of the pipeline is set to `None` (see the API reference).

-Unless set explicitly, its value will be taken from the checkpoint configuration `model_index.json`.

-This is done to ensure high-quality predictions when calling the pipeline with just the `image` argument.

+Marigold pipelines were designed and tested with the scheduler embedded in the model checkpoint.

+The optimal number of inference steps varies by scheduler, with no universal value that works best across all cases.

+To accommodate this, the `num_inference_steps` parameter in the pipeline's `__call__` method defaults to `None` (see the

+API reference).

+Unless set explicitly, it inherits the value from the `default_denoising_steps` field in the checkpoint configuration

+file (`model_index.json`).

+This ensures high-quality predictions when invoking the pipeline with only the `image` argument.

-See also Marigold [usage examples](marigold_usage).

+See also Marigold [usage examples](../../using-diffusers/marigold_usage).

+

+## Marigold Depth Prediction API

-## MarigoldDepthPipeline

[[autodoc]] MarigoldDepthPipeline

- - all

- __call__

-## MarigoldNormalsPipeline

+[[autodoc]] pipelines.marigold.pipeline_marigold_depth.MarigoldDepthOutput

+

+[[autodoc]] pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_depth

+

+## Marigold Normals Estimation API

[[autodoc]] MarigoldNormalsPipeline

- - all

- __call__

-## MarigoldDepthOutput

-[[autodoc]] pipelines.marigold.pipeline_marigold_depth.MarigoldDepthOutput

+[[autodoc]] pipelines.marigold.pipeline_marigold_normals.MarigoldNormalsOutput

+

+[[autodoc]] pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_normals

+

+## Marigold Intrinsic Image Decomposition API

+

+[[autodoc]] MarigoldIntrinsicsPipeline

+ - __call__

+

+[[autodoc]] pipelines.marigold.pipeline_marigold_intrinsics.MarigoldIntrinsicsOutput

-## MarigoldNormalsOutput

-[[autodoc]] pipelines.marigold.pipeline_marigold_normals.MarigoldNormalsOutput

\ No newline at end of file

+[[autodoc]] pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_intrinsics

diff --git a/docs/source/en/api/pipelines/overview.md b/docs/source/en/api/pipelines/overview.md

index ece3ebb4c340..6a8e82a692e0 100644

--- a/docs/source/en/api/pipelines/overview.md

+++ b/docs/source/en/api/pipelines/overview.md

@@ -65,7 +65,7 @@ The table below lists all the pipelines currently available in 🤗 Diffusers an

| [Latte](latte) | text2image |

| [LEDITS++](ledits_pp) | image editing |

| [Lumina-T2X](lumina) | text2image |

-| [Marigold](marigold) | depth |

+| [Marigold](marigold) | depth-estimation, normals-estimation, intrinsic-decomposition |

| [MultiDiffusion](panorama) | text2image |

| [MusicLDM](musicldm) | text2audio |

| [PAG](pag) | text2image |

diff --git a/docs/source/en/using-diffusers/marigold_usage.md b/docs/source/en/using-diffusers/marigold_usage.md

index e9756b7f1c8e..b8e9a5838e8d 100644

--- a/docs/source/en/using-diffusers/marigold_usage.md

+++ b/docs/source/en/using-diffusers/marigold_usage.md

@@ -1,4 +1,6 @@

-

-# Marigold Pipelines for Computer Vision Tasks

+# Marigold Computer Vision

-[Marigold](../api/pipelines/marigold) is a novel diffusion-based dense prediction approach, and a set of pipelines for various computer vision tasks, such as monocular depth estimation.

+**Marigold** is a diffusion-based [method](https://huggingface.co/papers/2312.02145) and a collection of [pipelines](../api/pipelines/marigold) designed for

+dense computer vision tasks, including **monocular depth prediction**, **surface normals estimation**, and **intrinsic

+image decomposition**.

-This guide will show you how to use Marigold to obtain fast and high-quality predictions for images and videos.

+This guide will walk you through using Marigold to generate fast and high-quality predictions for images and videos.

-Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a *prediction* of the modality of interest, such as a depth map of the input image.

-Currently, the following tasks are implemented:

+Each pipeline is tailored for a specific computer vision task, processing an input RGB image and generating a

+corresponding prediction.

+Currently, the following computer vision tasks are implemented:

-| Pipeline | Predicted Modalities | Demos |

-|---------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------:|

-| [MarigoldDepthPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_depth.py) | [Depth](https://en.wikipedia.org/wiki/Depth_map), [Disparity](https://en.wikipedia.org/wiki/Binocular_disparity) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-lcm), [Slow Original Demo (DDIM)](https://huggingface.co/spaces/prs-eth/marigold) |

-| [MarigoldNormalsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_normals.py) | [Surface normals](https://en.wikipedia.org/wiki/Normal_mapping) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-normals-lcm) |

+| Pipeline | Recommended Model Checkpoints | Spaces (Interactive Apps) | Predicted Modalities |

+|---------------------------------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------:|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [MarigoldDepthPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_depth.py) | [prs-eth/marigold-depth-v1-1](https://huggingface.co/prs-eth/marigold-depth-v1-1) | [Depth Estimation](https://huggingface.co/spaces/prs-eth/marigold) | [Depth](https://en.wikipedia.org/wiki/Depth_map), [Disparity](https://en.wikipedia.org/wiki/Binocular_disparity) |

+| [MarigoldNormalsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_normals.py) | [prs-eth/marigold-normals-v1-1](https://huggingface.co/prs-eth/marigold-normals-v1-1) | [Surface Normals Estimation](https://huggingface.co/spaces/prs-eth/marigold-normals) | [Surface normals](https://en.wikipedia.org/wiki/Normal_mapping) |

+| [MarigoldIntrinsicsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_intrinsics.py) | [prs-eth/marigold-iid-appearance-v1-1](https://huggingface.co/prs-eth/marigold-iid-appearance-v1-1),

[prs-eth/marigold-iid-lighting-v1-1](https://huggingface.co/prs-eth/marigold-iid-lighting-v1-1) | [Intrinsic Image Decomposition](https://huggingface.co/spaces/prs-eth/marigold-iid) | [Albedo](https://en.wikipedia.org/wiki/Albedo), [Materials](https://www.n.aiq3d.com/wiki/roughnessmetalnessao-map), [Lighting](https://en.wikipedia.org/wiki/Diffuse_reflection) |

-The original checkpoints can be found under the [PRS-ETH](https://huggingface.co/prs-eth/) Hugging Face organization.

-These checkpoints are meant to work with diffusers pipelines and the [original codebase](https://github.com/prs-eth/marigold).

-The original code can also be used to train new checkpoints.

+All original checkpoints are available under the [PRS-ETH](https://huggingface.co/prs-eth/) organization on Hugging Face.

+They are designed for use with diffusers pipelines and the [original codebase](https://github.com/prs-eth/marigold), which can also be used to train

+new model checkpoints.

+The following is a summary of the recommended checkpoints, all of which produce reliable results with 1 to 4 steps.

-| Checkpoint | Modality | Comment |

-|-----------------------------------------------------------------------------------------------|----------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| [prs-eth/marigold-v1-0](https://huggingface.co/prs-eth/marigold-v1-0) | Depth | The first Marigold Depth checkpoint, which predicts *affine-invariant depth* maps. The performance of this checkpoint in benchmarks was studied in the original [paper](https://huggingface.co/papers/2312.02145). Designed to be used with the `DDIMScheduler` at inference, it requires at least 10 steps to get reliable predictions. Affine-invariant depth prediction has a range of values in each pixel between 0 (near plane) and 1 (far plane); both planes are chosen by the model as part of the inference process. See the `MarigoldImageProcessor` reference for visualization utilities. |

-| [prs-eth/marigold-depth-lcm-v1-0](https://huggingface.co/prs-eth/marigold-depth-lcm-v1-0) | Depth | The fast Marigold Depth checkpoint, fine-tuned from `prs-eth/marigold-v1-0`. Designed to be used with the `LCMScheduler` at inference, it requires as little as 1 step to get reliable predictions. The prediction reliability saturates at 4 steps and declines after that. |

-| [prs-eth/marigold-normals-v0-1](https://huggingface.co/prs-eth/marigold-normals-v0-1) | Normals | A preview checkpoint for the Marigold Normals pipeline. Designed to be used with the `DDIMScheduler` at inference, it requires at least 10 steps to get reliable predictions. The surface normals predictions are unit-length 3D vectors with values in the range from -1 to 1. *This checkpoint will be phased out after the release of `v1-0` version.* |

-| [prs-eth/marigold-normals-lcm-v0-1](https://huggingface.co/prs-eth/marigold-normals-lcm-v0-1) | Normals | The fast Marigold Normals checkpoint, fine-tuned from `prs-eth/marigold-normals-v0-1`. Designed to be used with the `LCMScheduler` at inference, it requires as little as 1 step to get reliable predictions. The prediction reliability saturates at 4 steps and declines after that. *This checkpoint will be phased out after the release of `v1-0` version.* |

-The examples below are mostly given for depth prediction, but they can be universally applied with other supported modalities.

+| Checkpoint | Modality | Comment |

+|-----------------------------------------------------------------------------------------------------|--------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [prs-eth/marigold-depth-v1-1](https://huggingface.co/prs-eth/marigold-depth-v1-1) | Depth | Affine-invariant depth prediction assigns each pixel a value between 0 (near plane) and 1 (far plane), with both planes determined by the model during inference. |

+| [prs-eth/marigold-normals-v0-1](https://huggingface.co/prs-eth/marigold-normals-v0-1) | Normals | The surface normals predictions are unit-length 3D vectors in the screen space camera, with values in the range from -1 to 1. |

+| [prs-eth/marigold-iid-appearance-v1-1](https://huggingface.co/prs-eth/marigold-iid-appearance-v1-1) | Intrinsics | InteriorVerse decomposition is comprised of Albedo and two BRDF material properties: Roughness and Metallicity. |

+| [prs-eth/marigold-iid-lighting-v1-1](https://huggingface.co/prs-eth/marigold-iid-lighting-v1-1) | Intrinsics | HyperSim decomposition of an image \\(I\\) is comprised of Albedo \\(A\\), Diffuse shading \\(S\\), and Non-diffuse residual \\(R\\): \\(I = A*S+R\\). |

+

+The examples below are mostly given for depth prediction, but they can be universally applied to other supported

+modalities.

We showcase the predictions using the same input image of Albert Einstein generated by Midjourney.

This makes it easier to compare visualizations of the predictions across various modalities and checkpoints.

@@ -47,19 +56,21 @@ This makes it easier to compare visualizations of the predictions across various

-### Depth Prediction Quick Start

+## Depth Prediction

-To get the first depth prediction, load `prs-eth/marigold-depth-lcm-v1-0` checkpoint into `MarigoldDepthPipeline` pipeline, put the image through the pipeline, and save the predictions:

+To get a depth prediction, load the `prs-eth/marigold-depth-v1-1` checkpoint into [`MarigoldDepthPipeline`],

+put the image through the pipeline, and save the predictions:

```python

import diffusers

import torch

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

- "prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

+ "prs-eth/marigold-depth-v1-1", variant="fp16", torch_dtype=torch.float16

).to("cuda")

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

depth = pipe(image)

vis = pipe.image_processor.visualize_depth(depth.prediction)

@@ -69,10 +80,13 @@ depth_16bit = pipe.image_processor.export_depth_to_16bit_png(depth.prediction)

depth_16bit[0].save("einstein_depth_16bit.png")

```

-The visualization function for depth [`~pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_depth`] applies one of [matplotlib's colormaps](https://matplotlib.org/stable/users/explain/colors/colormaps.html) (`Spectral` by default) to map the predicted pixel values from a single-channel `[0, 1]` depth range into an RGB image.

-With the `Spectral` colormap, pixels with near depth are painted red, and far pixels are assigned blue color.

+The [`~pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_depth`] function applies one of

+[matplotlib's colormaps](https://matplotlib.org/stable/users/explain/colors/colormaps.html) (`Spectral` by default) to map the predicted pixel values from a single-channel `[0, 1]`

+depth range into an RGB image.

+With the `Spectral` colormap, pixels with near depth are painted red, and far pixels are blue.

The 16-bit PNG file stores the single channel values mapped linearly from the `[0, 1]` range into `[0, 65535]`.

-Below are the raw and the visualized predictions; as can be seen, dark areas (mustache) are easier to distinguish in the visualization:

+Below are the raw and the visualized predictions. The darker and closer areas (mustache) are easier to distinguish in

+the visualization.

@@ -89,28 +103,33 @@ Below are the raw and the visualized predictions; as can be seen, dark areas (mu

-### Surface Normals Prediction Quick Start

+## Surface Normals Estimation

-Load `prs-eth/marigold-normals-lcm-v0-1` checkpoint into `MarigoldNormalsPipeline` pipeline, put the image through the pipeline, and save the predictions:

+Load the `prs-eth/marigold-normals-v1-1` checkpoint into [`MarigoldNormalsPipeline`], put the image through the

+pipeline, and save the predictions:

```python

import diffusers

import torch

pipe = diffusers.MarigoldNormalsPipeline.from_pretrained(

- "prs-eth/marigold-normals-lcm-v0-1", variant="fp16", torch_dtype=torch.float16

+ "prs-eth/marigold-normals-v1-1", variant="fp16", torch_dtype=torch.float16

).to("cuda")

image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

normals = pipe(image)

vis = pipe.image_processor.visualize_normals(normals.prediction)

vis[0].save("einstein_normals.png")

```

-The visualization function for normals [`~pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_normals`] maps the three-dimensional prediction with pixel values in the range `[-1, 1]` into an RGB image.

-The visualization function supports flipping surface normals axes to make the visualization compatible with other choices of the frame of reference.

-Conceptually, each pixel is painted according to the surface normal vector in the frame of reference, where `X` axis points right, `Y` axis points up, and `Z` axis points at the viewer.

+The [`~pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_normals`] maps the three-dimensional

+prediction with pixel values in the range `[-1, 1]` into an RGB image.

+The visualization function supports flipping surface normals axes to make the visualization compatible with other

+choices of the frame of reference.

+Conceptually, each pixel is painted according to the surface normal vector in the frame of reference, where `X` axis

+points right, `Y` axis points up, and `Z` axis points at the viewer.

Below is the visualized prediction:

@@ -122,208 +141,226 @@ Below is the visualized prediction:

-In this example, the nose tip almost certainly has a point on the surface, in which the surface normal vector points straight at the viewer, meaning that its coordinates are `[0, 0, 1]`.

+In this example, the nose tip almost certainly has a point on the surface, in which the surface normal vector points

+straight at the viewer, meaning that its coordinates are `[0, 0, 1]`.

This vector maps to the RGB `[128, 128, 255]`, which corresponds to the violet-blue color.

-Similarly, a surface normal on the cheek in the right part of the image has a large `X` component, which increases the red hue.

+Similarly, a surface normal on the cheek in the right part of the image has a large `X` component, which increases the

+red hue.

Points on the shoulders pointing up with a large `Y` promote green color.

-### Speeding up inference

+## Intrinsic Image Decomposition

-The above quick start snippets are already optimized for speed: they load the LCM checkpoint, use the `fp16` variant of weights and computation, and perform just one denoising diffusion step.

-The `pipe(image)` call completes in 280ms on RTX 3090 GPU.

-Internally, the input image is encoded with the Stable Diffusion VAE encoder, then the U-Net performs one denoising step, and finally, the prediction latent is decoded with the VAE decoder into pixel space.

-In this case, two out of three module calls are dedicated to converting between pixel and latent space of LDM.

-Because Marigold's latent space is compatible with the base Stable Diffusion, it is possible to speed up the pipeline call by more than 3x (85ms on RTX 3090) by using a [lightweight replacement of the SD VAE](../api/models/autoencoder_tiny):

+Marigold provides two models for Intrinsic Image Decomposition (IID): "Appearance" and "Lighting".

+Each model produces Albedo maps, derived from InteriorVerse and Hypersim annotations, respectively.

-```diff

- import diffusers

- import torch

+- The "Appearance" model also estimates Material properties: Roughness and Metallicity.

+- The "Lighting" model generates Diffuse Shading and Non-diffuse Residual.

- pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

- "prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

- ).to("cuda")

+Here is the sample code saving predictions made by the "Appearance" model:

-+ pipe.vae = diffusers.AutoencoderTiny.from_pretrained(

-+ "madebyollin/taesd", torch_dtype=torch.float16

-+ ).cuda()

+```python

+import diffusers

+import torch

- image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

- depth = pipe(image)

+pipe = diffusers.MarigoldIntrinsicsPipeline.from_pretrained(

+ "prs-eth/marigold-iid-appearance-v1-1", variant="fp16", torch_dtype=torch.float16

+).to("cuda")

+

+image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

+intrinsics = pipe(image)

+

+vis = pipe.image_processor.visualize_intrinsics(intrinsics.prediction, pipe.target_properties)

+vis[0]["albedo"].save("einstein_albedo.png")

+vis[0]["roughness"].save("einstein_roughness.png")

+vis[0]["metallicity"].save("einstein_metallicity.png")

```

-As suggested in [Optimizations](../optimization/torch2.0#torch.compile), adding `torch.compile` may squeeze extra performance depending on the target hardware:

+Another example demonstrating the predictions made by the "Lighting" model:

-```diff

- import diffusers

- import torch

+```python

+import diffusers

+import torch

- pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

- "prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

- ).to("cuda")

+pipe = diffusers.MarigoldIntrinsicsPipeline.from_pretrained(

+ "prs-eth/marigold-iid-lighting-v1-1", variant="fp16", torch_dtype=torch.float16

+).to("cuda")

-+ pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

+image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

- image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

- depth = pipe(image)

+intrinsics = pipe(image)

+

+vis = pipe.image_processor.visualize_intrinsics(intrinsics.prediction, pipe.target_properties)

+vis[0]["albedo"].save("einstein_albedo.png")

+vis[0]["shading"].save("einstein_shading.png")

+vis[0]["residual"].save("einstein_residual.png")

```

-## Qualitative Comparison with Depth Anything

+Both models share the same pipeline while supporting different decomposition types.

+The exact decomposition parameterization (e.g., sRGB vs. linear space) is stored in the

+`pipe.target_properties` dictionary, which is passed into the

+[`~pipelines.marigold.marigold_image_processing.MarigoldImageProcessor.visualize_intrinsics`] function.

-With the above speed optimizations, Marigold delivers predictions with more details and faster than [Depth Anything](https://huggingface.co/docs/transformers/main/en/model_doc/depth_anything) with the largest checkpoint [LiheYoung/depth-anything-large-hf](https://huggingface.co/LiheYoung/depth-anything-large-hf):

+Below are some examples showcasing the predicted decomposition outputs.

+All modalities can be inspected in the

+[Intrinsic Image Decomposition](https://huggingface.co/spaces/prs-eth/marigold-iid) Space.

-

+

- Marigold LCM fp16 with Tiny AutoEncoder

+ Predicted albedo ("Appearance" model)

- Marigold LCM fp16 with Tiny AutoEncoder

+ Predicted albedo ("Appearance" model)

-

+

- Depth Anything Large

+ Predicted diffuse shading ("Lighting" model)

- Depth Anything Large

+ Predicted diffuse shading ("Lighting" model)

-## Maximizing Precision and Ensembling

+## Speeding up inference

-Marigold pipelines have a built-in ensembling mechanism combining multiple predictions from different random latents.

-This is a brute-force way of improving the precision of predictions, capitalizing on the generative nature of diffusion.

-The ensembling path is activated automatically when the `ensemble_size` argument is set greater than `1`.

-When aiming for maximum precision, it makes sense to adjust `num_inference_steps` simultaneously with `ensemble_size`.

-The recommended values vary across checkpoints but primarily depend on the scheduler type.

-The effect of ensembling is particularly well-seen with surface normals:

+The above quick start snippets are already optimized for quality and speed, loading the checkpoint, utilizing the

+`fp16` variant of weights and computation, and performing the default number (4) of denoising diffusion steps.

+The first step to accelerate inference, at the expense of prediction quality, is to reduce the denoising diffusion

+steps to the minimum:

-```python

-import diffusers

+```diff

+ import diffusers

+ import torch

-model_path = "prs-eth/marigold-normals-v1-0"

+ pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

+ "prs-eth/marigold-depth-v1-1", variant="fp16", torch_dtype=torch.float16

+ ).to("cuda")

-model_paper_kwargs = {

- diffusers.schedulers.DDIMScheduler: {

- "num_inference_steps": 10,

- "ensemble_size": 10,

- },

- diffusers.schedulers.LCMScheduler: {

- "num_inference_steps": 4,

- "ensemble_size": 5,

- },

-}

+ image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

+- depth = pipe(image)

++ depth = pipe(image, num_inference_steps=1)

+```

-image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+With this change, the `pipe` call completes in 280ms on RTX 3090 GPU.

+Internally, the input image is first encoded using the Stable Diffusion VAE encoder, followed by a single denoising

+step performed by the U-Net.

+Finally, the prediction latent is decoded with the VAE decoder into pixel space.

+In this setup, two out of three module calls are dedicated to converting between the pixel and latent spaces of the LDM.

+Since Marigold's latent space is compatible with Stable Diffusion 2.0, inference can be accelerated by more than 3x,

+reducing the call time to 85ms on an RTX 3090, by using a [lightweight replacement of the SD VAE](../api/models/autoencoder_tiny).

+Note that using a lightweight VAE may slightly reduce the visual quality of the predictions.

-pipe = diffusers.MarigoldNormalsPipeline.from_pretrained(model_path).to("cuda")

-pipe_kwargs = model_paper_kwargs[type(pipe.scheduler)]

+```diff

+ import diffusers

+ import torch

-depth = pipe(image, **pipe_kwargs)

+ pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

+ "prs-eth/marigold-depth-v1-1", variant="fp16", torch_dtype=torch.float16

+ ).to("cuda")

-vis = pipe.image_processor.visualize_normals(depth.prediction)

-vis[0].save("einstein_normals.png")

++ pipe.vae = diffusers.AutoencoderTiny.from_pretrained(

++ "madebyollin/taesd", torch_dtype=torch.float16

++ ).cuda()

+

+ image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

+ depth = pipe(image, num_inference_steps=1)

```

-

-

-

-

- Surface normals, no ensembling

-

-

-

-

-

- Surface normals, with ensembling

-

-

-

-

+

- Depth uncertainty

+ Surface normals, no ensembling

- Depth uncertainty

+ Surface normals, no ensembling

-

+

- Surface normals uncertainty

+ Surface normals, with ensembling

- Surface normals uncertainty

+ Surface normals, with ensembling

-The interpretation of uncertainty is easy: higher values (white) correspond to pixels, where the model struggles to make consistent predictions.

-Evidently, the depth model is the least confident around edges with discontinuity, where the object depth changes drastically.

-The surface normals model is the least confident in fine-grained structures, such as hair, and dark areas, such as the collar.

+As can be seen, all areas with fine-grained structurers, such as hair, got more conservative and on average more

+correct predictions.

+Such a result is more suitable for precision-sensitive downstream tasks, such as 3D reconstruction.

## Frame-by-frame Video Processing with Temporal Consistency

-Due to Marigold's generative nature, each prediction is unique and defined by the random noise sampled for the latent initialization.

-This becomes an obvious drawback compared to traditional end-to-end dense regression networks, as exemplified in the following videos:

+Due to Marigold's generative nature, each prediction is unique and defined by the random noise sampled for the latent

+initialization.

+This becomes an obvious drawback compared to traditional end-to-end dense regression networks, as exemplified in the

+following videos:

@@ -336,26 +373,32 @@ This becomes an obvious drawback compared to traditional end-to-end dense regres

-To address this issue, it is possible to pass `latents` argument to the pipelines, which defines the starting point of diffusion.

-Empirically, we found that a convex combination of the very same starting point noise latent and the latent corresponding to the previous frame prediction give sufficiently smooth results, as implemented in the snippet below:

+To address this issue, it is possible to pass `latents` argument to the pipelines, which defines the starting point of

+diffusion.

+Empirically, we found that a convex combination of the very same starting point noise latent and the latent

+corresponding to the previous frame prediction give sufficiently smooth results, as implemented in the snippet below:

```python

import imageio

-from PIL import Image

-from tqdm import tqdm

import diffusers

import torch

+from diffusers.models.attention_processor import AttnProcessor2_0

+from PIL import Image

+from tqdm import tqdm

device = "cuda"

-path_in = "obama.mp4"

+path_in = "https://huggingface.co/spaces/prs-eth/marigold-lcm/resolve/c7adb5427947d2680944f898cd91d386bf0d4924/files/video/obama.mp4"

path_out = "obama_depth.gif"

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

- "prs-eth/marigold-depth-lcm-v1-0", variant="fp16", torch_dtype=torch.float16

+ "prs-eth/marigold-depth-v1-1", variant="fp16", torch_dtype=torch.float16

).to(device)

pipe.vae = diffusers.AutoencoderTiny.from_pretrained(

"madebyollin/taesd", torch_dtype=torch.float16

).to(device)

+pipe.unet.set_attn_processor(AttnProcessor2_0())

+pipe.vae = torch.compile(pipe.vae, mode="reduce-overhead", fullgraph=True)

+pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

pipe.set_progress_bar_config(disable=True)

with imageio.get_reader(path_in) as reader:

@@ -373,7 +416,11 @@ with imageio.get_reader(path_in) as reader:

latents = 0.9 * latents + 0.1 * last_frame_latent

depth = pipe(

- frame, match_input_resolution=False, latents=latents, output_latent=True

+ frame,

+ num_inference_steps=1,

+ match_input_resolution=False,

+ latents=latents,

+ output_latent=True,

)

last_frame_latent = depth.latent

out.append(pipe.image_processor.visualize_depth(depth.prediction)[0])

@@ -382,7 +429,8 @@ with imageio.get_reader(path_in) as reader:

```

Here, the diffusion process starts from the given computed latent.

-The pipeline sets `output_latent=True` to access `out.latent` and computes its contribution to the next frame's latent initialization.

+The pipeline sets `output_latent=True` to access `out.latent` and computes its contribution to the next frame's latent

+initialization.

The result is much more stable now:

@@ -414,7 +462,7 @@ image = diffusers.utils.load_image(

)

pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

- "prs-eth/marigold-depth-lcm-v1-0", torch_dtype=torch.float16, variant="fp16"

+ "prs-eth/marigold-depth-v1-1", torch_dtype=torch.float16, variant="fp16"

).to(device)

depth_image = pipe(image, generator=generator).prediction

@@ -463,4 +511,95 @@ controlnet_out[0].save("motorcycle_controlnet_out.png")

-Hopefully, you will find Marigold useful for solving your downstream tasks, be it a part of a more broad generative workflow, or a perception task, such as 3D reconstruction.

+## Quantitative Evaluation

+

+To evaluate Marigold quantitatively in standard leaderboards and benchmarks (such as NYU, KITTI, and other datasets),

+follow the evaluation protocol outlined in the paper: load the full precision fp32 model and use appropriate values

+for `num_inference_steps` and `ensemble_size`.

+Optionally seed randomness to ensure reproducibility.

+Maximizing `batch_size` will deliver maximum device utilization.

+

+```python

+import diffusers

+import torch

+

+device = "cuda"

+seed = 2024

+

+generator = torch.Generator(device=device).manual_seed(seed)

+pipe = diffusers.MarigoldDepthPipeline.from_pretrained("prs-eth/marigold-depth-v1-1").to(device)

+

+image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

+depth = pipe(

+ image,

+ num_inference_steps=4, # set according to the evaluation protocol from the paper

+ ensemble_size=10, # set according to the evaluation protocol from the paper

+ generator=generator,

+)

+

+# evaluate metrics

+```

+

+## Using Predictive Uncertainty

+

+The ensembling mechanism built into Marigold pipelines combines multiple predictions obtained from different random

+latents.

+As a side effect, it can be used to quantify epistemic (model) uncertainty; simply specify `ensemble_size` greater

+or equal than 3 and set `output_uncertainty=True`.

+The resulting uncertainty will be available in the `uncertainty` field of the output.

+It can be visualized as follows:

+

+```python

+import diffusers

+import torch

+

+pipe = diffusers.MarigoldDepthPipeline.from_pretrained(

+ "prs-eth/marigold-depth-v1-1", variant="fp16", torch_dtype=torch.float16

+).to("cuda")

+

+image = diffusers.utils.load_image("https://marigoldmonodepth.github.io/images/einstein.jpg")

+

+depth = pipe(

+ image,

+ ensemble_size=10, # any number >= 3

+ output_uncertainty=True,

+)

+

+uncertainty = pipe.image_processor.visualize_uncertainty(depth.uncertainty)

+uncertainty[0].save("einstein_depth_uncertainty.png")

+```

+

+

+

+

+

+ Depth uncertainty

+

+

+

+

+

+ Surface normals uncertainty

+

+

+

+

+

+ Albedo uncertainty

+

+

+

+

+

+

+

+

+

+

+

-

-  -

-  +

+  +

+  +

+